By Michele Campa

Overview

In this blog post we take a look at a race condition we found in Microsoft Windows Cloud Minifilter (i.e. cldflt.sys) in March 2024. This vulnerability was patched in October 2025 and assigned CVE-2025-55680.

The vulnerability occurs within the HsmpOpCreatePlaceholders() function, which is invoked when the CfCreatePlaceholders() function is issued by the user to create one or more new placeholder files or directories under a sync root directory tree.

Before the placeholder file is created, the HsmpOpCreatePlaceholders() function validates the filename by checking if the userspace buffer holding the filename contains the \ or the : characters. This validation was introduced when the CVE-2020-17136 vulnerability was patched. After the user-provided filename is checked, it calls the the FltCreateFileEx2() function to create the file. Due to how this check is implemented, there exists a time window between the filename validation and file creation where the user can alter the filename allowing the user to create a file or directory anywhere on the system, leading to a privilege escalation.

Background

Microsoft Cloud API

The sync engine is a service to sync files and directories between the local host and a remote host, such as OneDrive. It uses the Cloud API implementation in cldapi.dll.[1] The sync root folder is the root folder registered as a synced folder, where all the nested files and directories are synced. All the files contained in the sync root directory exist in one of the following states:

- Pinned full file – The file is hydrated, i.e. the content is downloaded from the cloud explicitly by the user (e.g. from the explorer context menu).

- Full file – The file is constantly hydrated, i.e. the content is constantly downloaded from the cloud keeping the file up to date. If space is needed the download can be stopped.

- Placeholder – The file is automatically hydrated by the sync provider when the file is accessed by the user. The file state is influenced by the hydration policy set on the sync root folder.

The sync root folder and all its subfolders follow different behaviors according to the population policy set on the sync root folder. The sync provider is responsible for initializing a directory as a sync root folder, using the CfRegisterSyncRoot() API implementation in cldapi.dll.

HRESULT CfRegisterSyncRoot(

[in] LPCWSTR SyncRootPath,

[in] const CF_SYNC_REGISTRATION *Registration,

[in] const CF_SYNC_POLICIES *Policies,

[in] CF_REGISTER_FLAGS RegisterFlags

);

The provider’s information, like name and version, are supplied in the Registration parameter, while the Policies parameter specifies policies that should be applied to the folder and its nested files. The sync behavior strongly depends on the policies set.[2]

Some of the policies are listed below:

CF_HYDRATION_POLICY: The hydration policy defines when the file is hydrated, i.e. filled with cloud data. For example, if the policy is set toCF_HYDRATION_POLICY_FULLthe file or directory is fully downloaded when requested (even if only one byte has been requested). In case ofCF_HYDRATION_POLICY_ALWAYS_FULLthe de-hydration, i.e. the file content being discarded, operations will fail.CF_POPULATION_POLICY: The population policy defines how the directories should be populated, i.e. when the files contained in them should be downloaded. For example, if it is set toCF_POPULATION_POLICY_ALWAYS_FULLthen the directory is kept updated with the cloud directory contents. In case ofCF_POPULATION_POLICY_FULLthe directory is fully populated when the user navigates to it. Finally, if theCF_POPULATION_POLICY_PARTIALis set then the directory is populated downloading only information requested by the user during the navigation.

The cldapi.dll is backed by the Cloud Files Minifilter driver, i.e. cldflt.sys, where all the functionalities are implemented through the file system filter features.

Cloud Files Minifilter Driver

The Cloud Files Minifilter driver (cldflt.sys) is provided by Microsoft to expose file system functionalities to cloud applications such as OneDrive. It is a file system filter driver and is registered to the Filter Manager by default. It is used through some I/O Control or major function types. It registers callbacks for the following MajorFunction codes:

IRP_MJ_CREATE– File or directory create/open.IRP_MJ_CLEANUP– File or directory closed, its reference counter reached zero.IRP_MJ_DIRECTORY_CONTROL– The major function is used when userspace application execute API likeReadDirectoryChangesW().IRP_MJ_FILE_SYSTEM_CONTROL– It is used when the userspace application invokesNtDeviceIoControlFile().IRP_MJ_LOCK_CONTROL– It is issued by the I/O manager to manage exclusive access setting.IRP_MJ_READ– Request to read file or directory.IRP_MJ_WRITE– Request to write file or directory.IRP_MJ_QUERY_INFORMATION– Request to get file or directory information.IRP_MJ_SET_INFORMATION– Request to set file or directory information.

Cloud Files Minifilter Driver – IOCTLs

For the NtDeviceIoControlFile() and the NtFsControlFile() userspace APIs, the Cloud Files Minifilter Driver defines two callbacks:

HsmFltPreFILE_SYSTEM_CONTROL(): callback executed before the actual operation.HsmFltPostFILE_SYSTEM_CONTROL(): callback executed after the actual operation.

The NtDeviceIoControlFile() and the NtFsControlFile() requests are handled before the operation is executed by the HsmFltPreFILE_SYSTEM_CONTROL() callback and after that the operation is executed by HsmFltPostFILE_SYSTEM_CONTROL().

The HsmFltPreFILE_SYSTEM_CONTROL() callback filters multiple I/O control code requests. Some of them are listed below:

FSCTL_DELETE_REPARSE_POINT– This I/O control code request is denied by default.FSCTL_GET_REPARSE_POINT– This I/O control code is used to get the reparse points set to the file or directory.FSCTL_SET_REPARSE_POINT– This I/O control code is used to set the reparse points to the file or directory.FSCTL_SET_REPARSE_POINT_EX– This I/O control code is used to set the reparse points to the file or directory.0x903BC– This is a custom I/O control code used by thecldapi.dllto execute operations like:CfpRegisterSyncRoot(),CfConvertToPlaceholder(),CfCreatePlaceholders().

Cloud Files Minifilter Driver – IOCTL 0x903bc Operation 0xC0000001

When the I/O control code is 0x903bc, Tag is 0x9000001A and OpType is 0xC0000001 then a CfCreatePlaceholders request has been issued. The CfCreatePlaceholders() API is used by the sync provider to create one or more placeholder files and directories.[3]

The prototype is shown below:

HRESULT CfCreatePlaceholders(

[in] LPCWSTR BaseDirectoryPath,

[in, out] CF_PLACEHOLDER_CREATE_INFO *PlaceholderArray,

[in] DWORD PlaceholderCount,

[in] CF_CREATE_FLAGS CreateFlags,

[out] PDWORD EntriesProcessed

);

The following listing shows the CF_PLACEHOLDER_CREATE_INFO data structure.

typedef struct CF_PLACEHOLDER_CREATE_INFO {

LPCWSTR RelativeFileName;

CF_FS_METADATA FsMetadata;

LPCVOID FileIdentity;

DWORD FileIdentityLength;

CF_PLACEHOLDER_CREATE_FLAGS Flags;

HRESULT Result;

USN CreateUsn;

} CF_PLACEHOLDER_CREATE_INFO;

typedef struct CF_FS_METADATA {

FILE_BASIC_INFO BasicInfo;

LARGE_INTEGER FileSize;

} CF_FS_METADATA;

The CfCreatePlaceholders() API opens the BaseDirectoryPath file and emits an I/O control request with the 0x903BC code to it. As input, it sends a buffer containing all the information that was passed as arguments to the CfCreatePlaceholders() API. The input buffer follows the ioctl_0x903BC data structure. The full data structure, sent as input buffer to the BaseDirectoryPath file, is shown below. The BaseDirectoryPath must be a directory in the sync root folder; otherwise the request will fail.

Offset Length Field Description

------- ------- ------------------------- ---------------------------------------------

0x00 4 Tag Set to 0x9000001A.

0x04 4 OpType Set to 0xC0000001.

...

0x0C 4 size Size of the 'create_placeholder_t'.

...

0x10 8 placeholder_payload Pointer to the 'create_placeholder_t'

data structure

...

The input buffer built by the CfCreatePlaceholders() API implemented in cldapi.dll contains a pointer to thecreate_placeholder_t data structure in the placeholder_payload field.

The create_placeholder_t data structure is shown below.

Offset Length Field Description

------- ------- ------------------------- ---------------------------------------------

0x08 2 relativeName_offset The offset to the 'relName' start.

0x0A 2 relativeName_len The 'relName' size.

0x0C 2 fileidentity_offset The offset to the 'fileid' start.

0x0e 2 fileidentity_len The 'fileid' size.

...

0x2e 4 fileAttributes The file attributes to apply

to the created file.

...

0x50 VAR relName The relative file name content.

VAR VAR fileid The file identity content.

When PlaceholderCount is greater than one then the placeholder_payload is an array of create_placeholder_t structures, the PlaceholderCount information is not contained in the ioctl_0x903BC data structure since the processing in kernel space happens by continuing to read the placeholder_payload buffer until it is finished.

Vulnerability

The vulnerability is in the HsmpOpCreatePlaceholders() function, implemented in the cldflt.sys driver, when handling an IOCTL with the following characteristics:

- I/O control code is

0x903BC. - The input buffer has the

ioctl_0x903BCformat. - Tag is set to

IO_REPARSE_TAG_CLOUD. - Size must be greater or equal to

0x98. - Operation is equal to

0xC0000001, i.e. creating a placeholder.

When creating the placeholder, the driver checks the sync root directory accessibility and permissions invoking the HsmiOpPrepareOperation() function which leads to invoking the HsmFltProcessCreatePlaceholders() function.

// cldflt.sys

__int64 __fastcall HsmFltProcessCreatePlaceholders(

__int64 a1,

PFLT_INSTANCE *a2,

PFILE_OBJECT baseDirObj,

__int64 a4,

__int64 a5,

PVOID Object,

__int128 *a7,

PFLT_CALLBACK_DATA CallbackData,

ioctl_0x903BC *inputBuffer,

unsigned int inputBufferSize,

char a11)

{

[Truncated]

[1]

if ( inputBufferSize < 0x20 || (v14 = inputBuffer, inputBuffer->size < 0x50) )

{

[Truncated]

}

[2]

SyncPolicy = HsmpRelativeStreamOpen(

(__int64)a2,

baseDirObj,

0i64,

0x100000u,

1u,

32,

v26,

&a7,

(PFILE_OBJECT *)&Object);

HsmDbgBreakOnStatus((unsigned int)SyncPolicy);

if ( SyncPolicy < 0 )

{

[Truncated]

}

[Truncated]

[3]

SyncPolicy = HsmpOpCreatePlaceholders(a2, a7, syncPolicy, inputBuffer->placeholder_payload, inputBuffer->size, &v27);

[Truncated]

}

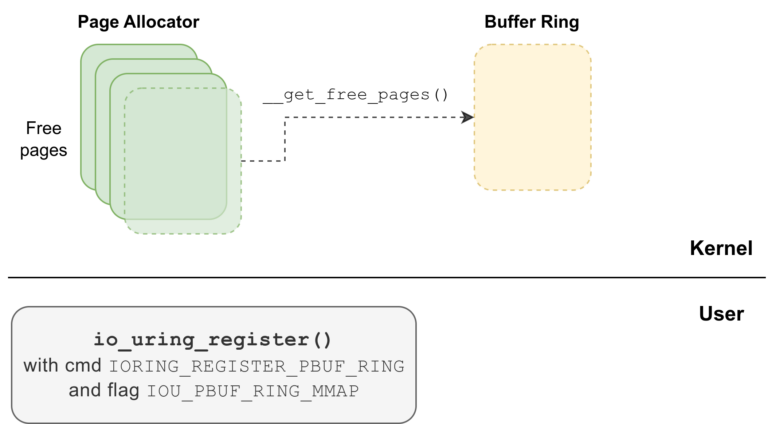

At [1], it checks that the input buffer size, i.e. the nInBufferSize parameter in the DeviceIoControl() function, is greater than 0x20. It also checks that the ioctl_0x903BC.size is greater or equal to 0x50, which means that the placeholder_payload buffer is longer than 0x4f bytes. If one of those constraints is not satisfied then it bails out with an error.

Then at [2], it invokes the HsmpRelativeStreamOpen() function to open the TargetFileObject stored in the PFLT_CALLBACK_DATA structure, which is the file that received the I/O control request 0x903BC (i.e. BaseDirectoryPath). The file handle is stored in the a7 variable. The handle is opened by internally invoking the FltCreateFileEx() function. If any error is returned then it bails out.

At [3], it invokes the HsmpOpCreatePlaceholders() function that will finally process the request. It is invoked by passing the placeholder_payload pointer, its size, and the handle to the directory that received the I/O control request (i.e. BaseDirectoryPath) as arguments.

// cldflt.sys

__int64 __fastcall HsmpOpCreatePlaceholders(

PFLT_INSTANCE *a1,

__int128 *DirHandle,

int syncPolicy,

create_placeholder_t *placeholderPayload,

ULONG placeholderPayload_size,

int *out)

{

syncPolicy_cp = syncPolicy;

dirHandle_cp = DirHandle;

P = a1;

v51 = DirHandle;

placeholderPayload_size_cp = placeholderPayload_size;

v66 = out;

[Truncated]

v53 = 0;

[Truncated]

[4]

MemoryDescriptorList = IoAllocateMdl(placeholderPayload, placeholderPayload_size, 0, 0, 0i64);

if ( !MemoryDescriptorList )

{

[Truncated]

}

[5]

ProbeForRead(placeholderPayload, placeholderPayload_size, 4u);

v10 = MemoryDescriptorList;

MmProbeAndLockPages(MemoryDescriptorList, 1, IoReadAccess);

if ( (MemoryDescriptorList->MdlFlags & 5) != 0 ) // non paged pool | SYSTEM_VA_MAPPED

MappedSystemVa = (create_placeholder_t *)MemoryDescriptorList->MappedSystemVa;

else

MappedSystemVa = (create_placeholder_t *)MmMapLockedPagesSpecifyCache(

MemoryDescriptorList,

0,

MmCached,

0i64,

0,

0x40000010u);

mmapped_userspace_region = MappedSystemVa;

if ( !MappedSystemVa )

{

[Truncated]

}

[Truncated]

[6]

while ( 1 )

{

memset(&placeholderPayload_stack, 0, sizeof(placeholderPayload_stack));

v16 = (create_placeholder_t *)((char *)mmapped_userspace_region + v53);

v51 = v16;

v65 = 0i64;

memset&ObjectAttributes, 0, 44);

[Truncated]

[7]

*(_OWORD *)&placeholderPayload_stack.unknown_0 = *(_OWORD *)&v16->unknown_0;

*(_OWORD *)placeholderPayload_stack.unknown_1 = *(_OWORD *)v16->unknown_1;

*(_OWORD *)&placeholderPayload_stack.unknown_1[16] = *(_OWORD *)&v16->unknown_1[16];

*(_OWORD *)&placeholderPayload_stack.fileAttributes = *(_OWORD *)&v16->fileAttributes;

*(_OWORD *)&placeholderPayload_stack.minus_1 = *(_OWORD *)&v16->minus_1;

[Truncated]

[8]

v21 = (int)v51;

v22 = 0;

if ( HIWORD(relName_sz) >> 1 )

{

while ( 1 )

{

v23 = *(_WORD *)((char *)v51 + 2 * v22 + relName_offset);

if ( v23 == '\\' || v23 == ':' )

break;

if ( ++v22 >= (unsigned __int16)(HIWORD(relName_sz) >> 1) )

goto LABEL_51;

}

LODWORD(v24) = 0xC000CF0B;

HsmDbgBreakOnStatus(0xC000CF0Bi64);

v27 = WPP_GLOBAL_Control;

if ( WPP_GLOBAL_Control == (PDEVICE_OBJECT)&WPP_GLOBAL_Control

|| (HIDWORD(WPP_GLOBAL_Control->Timer) & 1) == 0

|| BYTE1(WPP_GLOBAL_Control->Timer) < 2u )

{

goto LABEL_162;

}

v28 = 107i64;

goto continue;

[Truncated]

}

[Truncated]

[9]

*((_QWORD *)&v65 + 1) = (char *)v51 + placeholderPayload_stack.relativeName_offset;

LOWORD(v65) = placeholderPayload_stack.relativeName_len;

WORD1(v65) = placeholderPayload_stack.relativeName_len;

ObjectAttributes.Length = 48;

ObjectAttributes.RootDirectory = dirHandle_cp;

ObjectAttributes.Attributes = 576;

ObjectAttributes.ObjectName = (PUNICODE_STRING)&v65;

*(_OWORD *)&ObjectAttributes.SecurityDescriptor = 0i64;

CreateOptions = (v33 != 0) | 0x208020;

[10]

LODWORD(v24) = FltCreateFileEx2(

Filter,

Instance,

&FileHandle,

&FileObject,

0x100180u,

&ObjectAttributes,

&IoStatusBlock,

&AllocationSize,

FileAttributes,

0,

2u,

CreateOptions,

0i64,

0,

0x800u,

&DriverContext);

[Truncated]

continue:

v29 = (PFILE_OBJECT)dirHandle_cp;

[Truncated]

if ( (int)v24 >= 0 || (syncPolicy_cp & 1) == 0 )

{

v38 = LODWORD(placeholderPayload_stack.unknown_0) ? LODWORD(placeholderPayload_stack.unknown_0) + v53 : 0;

v53 = v38;

if ( v38 )

continue;

}

[Truncated]

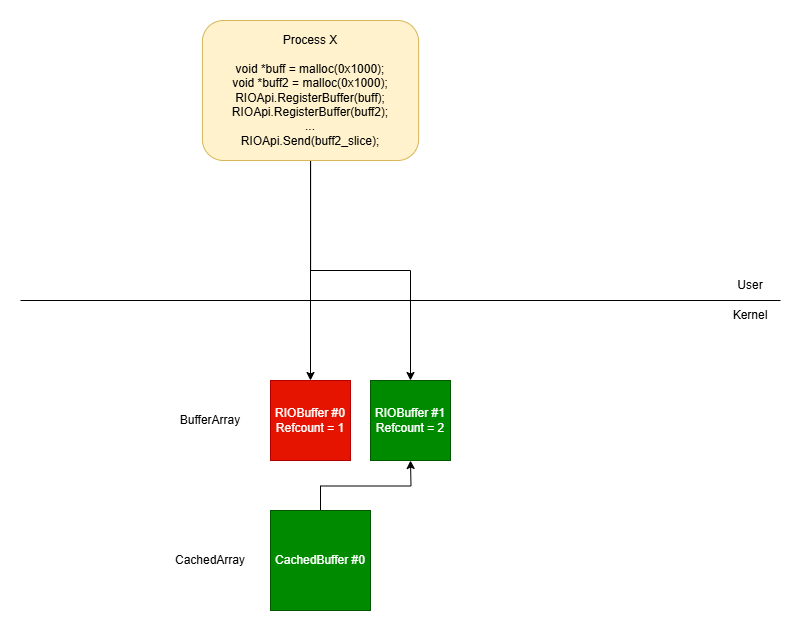

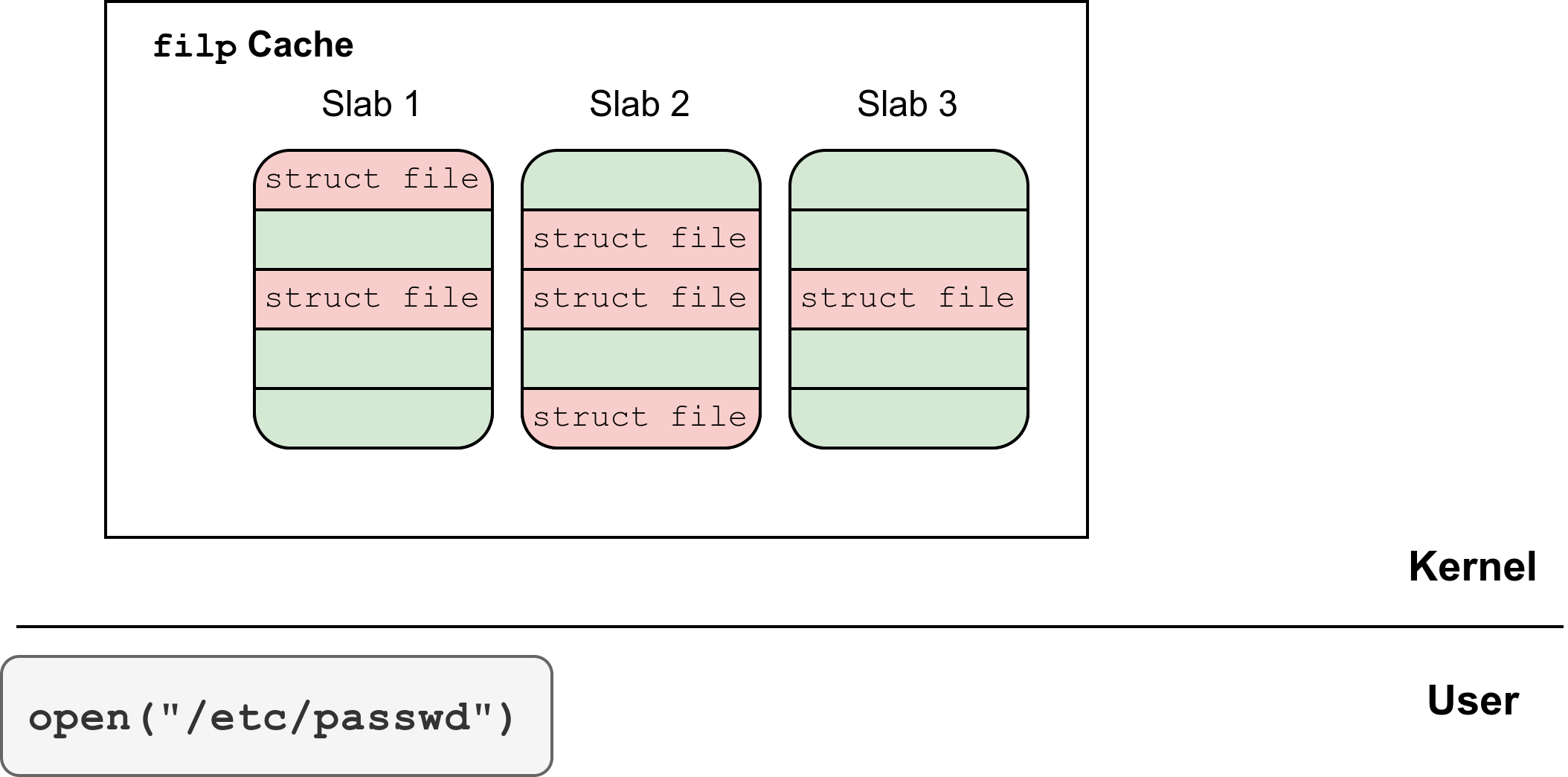

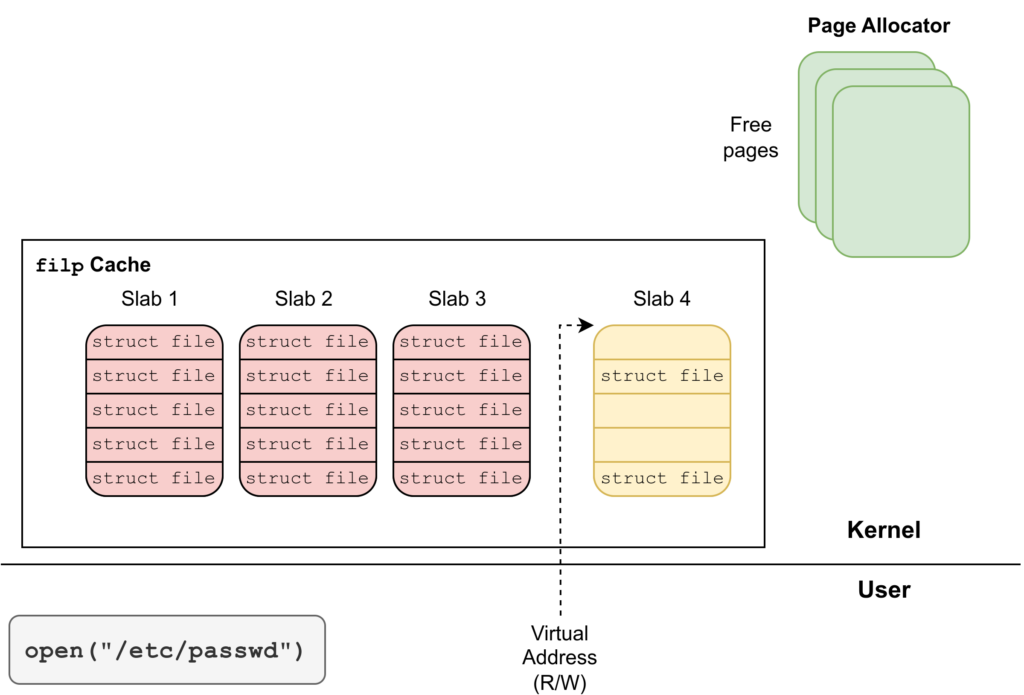

At [4], the HsmpOpCreatePlaceholders() function invokes IoAllocateMdl() to allocate the memory descriptor list to describe the userspace buffer (i.e. placeholderPayload) and at [5] it validates that the buffer is a userspace buffer. Since the address does not belong to a non-paged pool and it is not already mapped into the kernel virtual address space then it is mapped by invoking the MmMapLockedPagesSpecifyCache() function.

The MmMapLockedPagesSpecifyCache() function maps the userspace buffer into the kernel virtual address space, hence both the userspace buffer and MappedSystemVa are backed by the same physical page which means that modification in the userspace buffer is reflected to the MappedSystemVa memory itself.

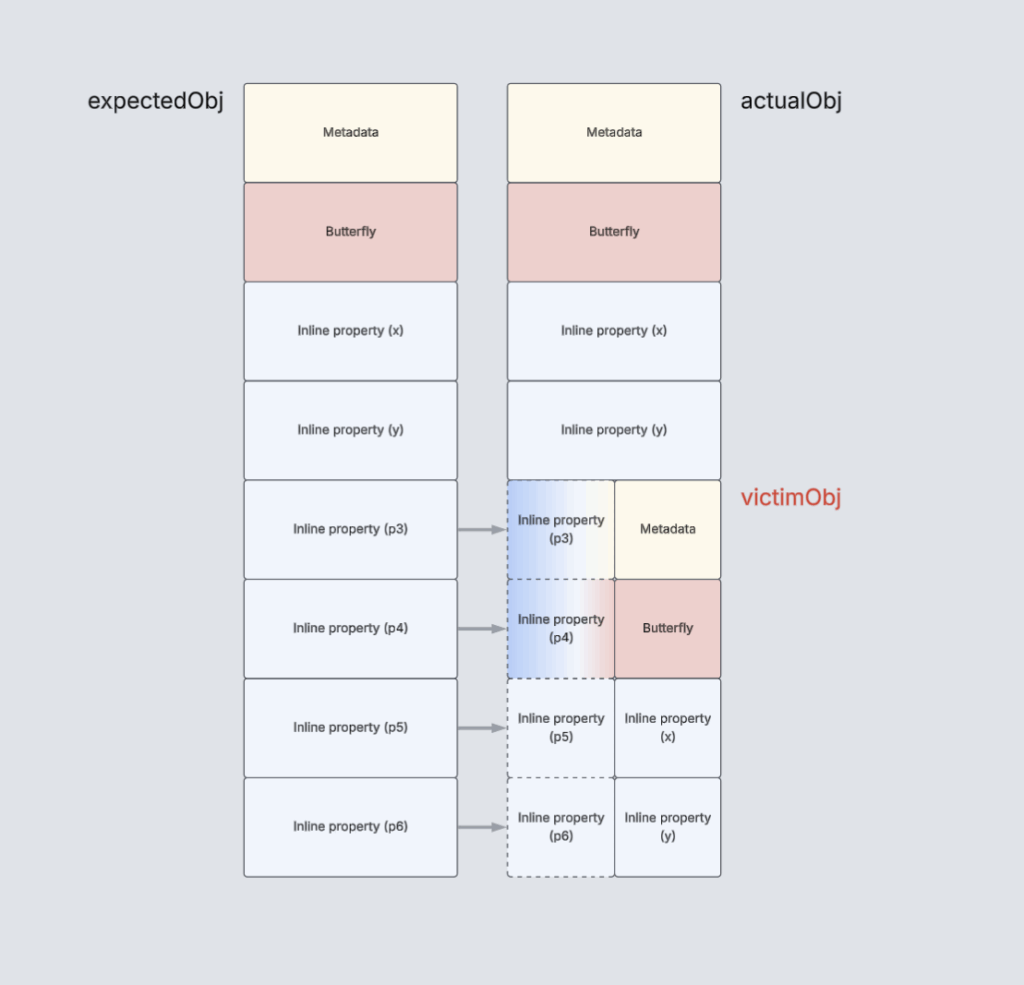

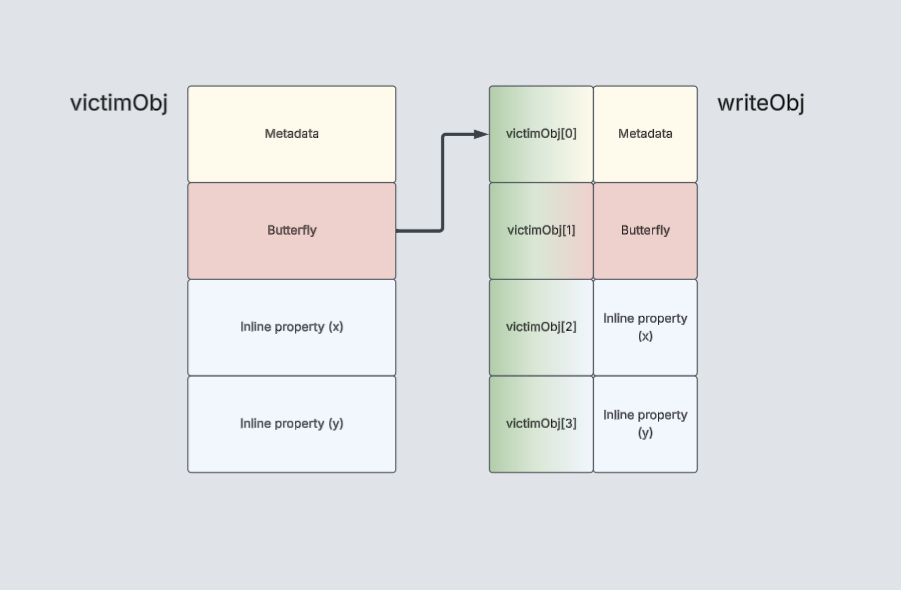

At [6], the function starts parsing the placeholderPayload buffer leading to creating all the intended placeholder files and directories. First at [7], it sets all the information contained in the placeholderPayload in a stack variable, i.e. placeholderPayload_stack. All the information (except the relName and the fileid) are copied into the placeholderPayload_stack variable.

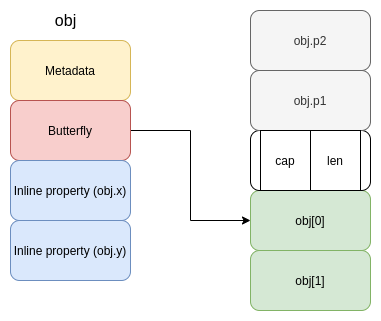

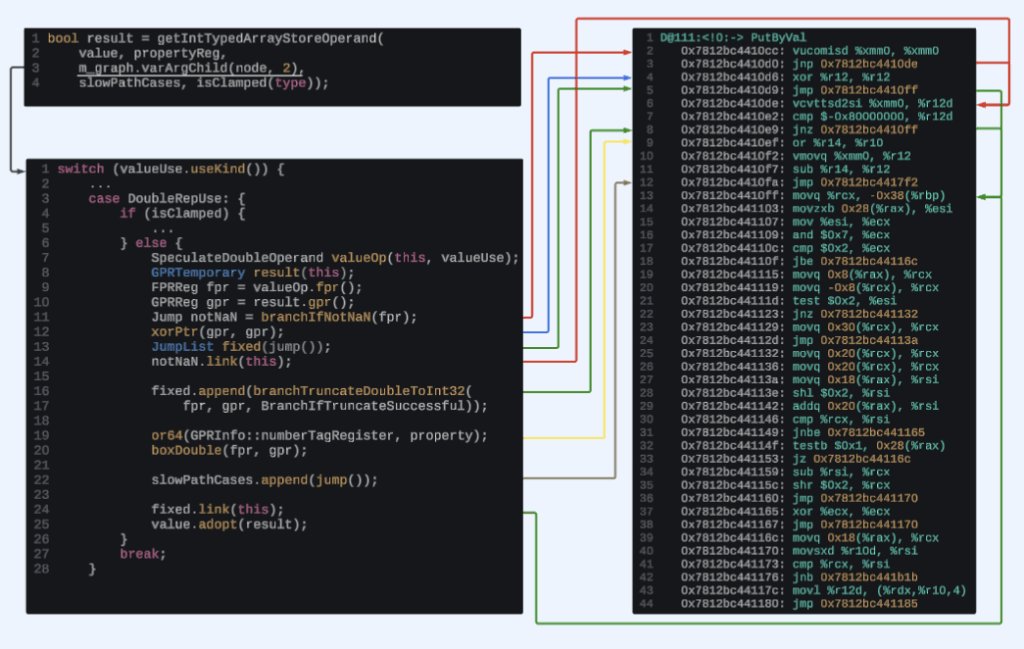

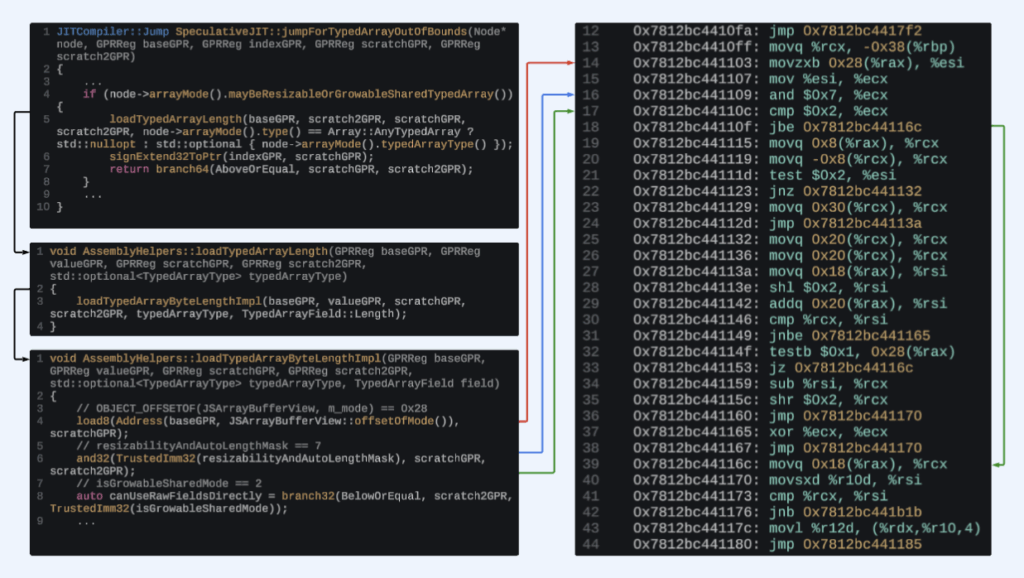

At [8], it validates the filename contained in the relName field of the placeholderPayload, it checks all the wide characters. If any of the characters is equal to the \ or the : character, it stops the current relNameprocessing and continues updating the v53 variable which is the offset to the next byte of the placeholderPayload to process. Finally at [9], if the relName is considered valid then the ObjectAttributes structure is filled setting the RootDirectory handle to the directory targeted by the I/O control request 0x903BC (i.e. BaseDirectoryPath) and the ObjectName to the mmapped_userspace_region.relName address.

Since mmapped_userspace_region is a mapped memory from a userspace buffer then it is possible that the user changes the file name stored in the mmapped_userspace_region.relName just before the FltCreateFileEx2() execution [15]. The FltCreateFileEx2() is invoked by passing the IO_IGNORE_SHARE_ACCESS_CHECK value as the Flags argument and the OBJECT_ATTRIBUTES.Attributes is set to the OBJ_KERNEL_HANDLE | OBJ_INHERIT mask.

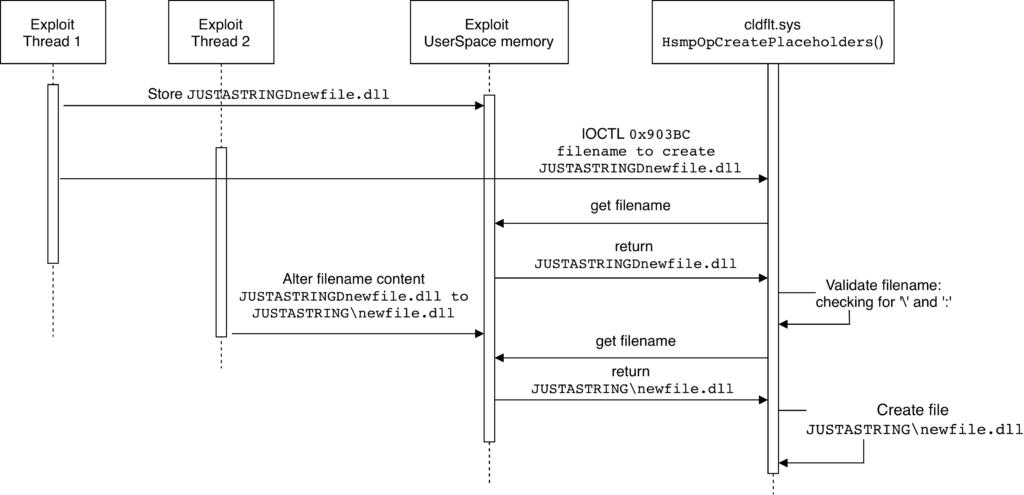

Between [8] and [10] there is a time window where a malicious attacker can alter the placeholderPayload.relName string by inserting the \ character in order to gain a file/directory arbitrary creation. Since the FltCreateFileEx2() function is not invoked by specifying any flag that will make it return an error if a symlink/junction is encountered, an attacker can request the placeholder creation by setting the relName to JUSTASTRINGDnewfile.dll. If the attacker wins the race, the relName content changes from JUSTASTRINGDnewfile.dll to JUSTASTRING\newfile.dll. Next, if the JUSTASTRING directory exists and it is a junction to a directory not writable by the user then FltCreateFileEx2() will follow the junction creating the newfile.dll in the non-writable directory.

Exploitation

In order to exploit this vulnerability the following steps must be performed:

- Setup environment – register sync root directory and create the junction.

- Trigger the vulnerability by spawning multiple threads where some execute the

CfCreatePlaceholders()and some try to change therelNamebetween therelNamevalidation and theFltCreateFileEx2()invocation. - Use DLL side-loading to perform privilege escalation.

- Clean up post-exploitation.

These steps are detailed below:

Step 1 – Setup Environment

The exploit must register a directory as sync root directory, which can be done by invoking the CfRegisterSyncRoot() API. Then it must create a new directory, e.g. JUSTASTRING, in the sync root directory making it a junction to a not-writable directory such as C:\Windows\System32.

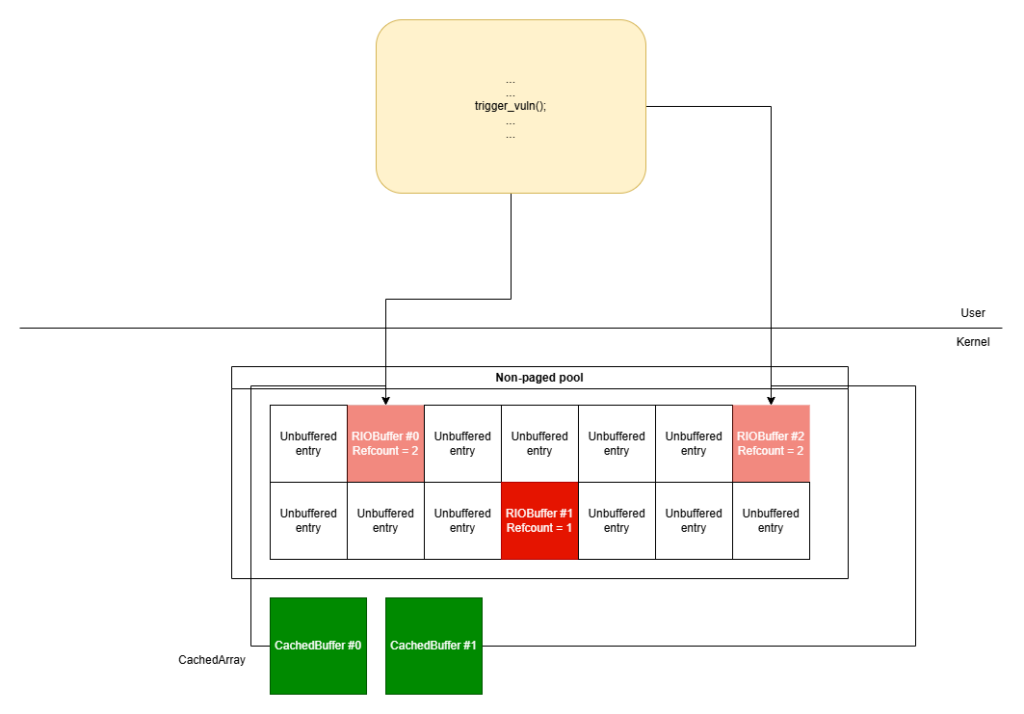

Step 2 – Trigger the vulnerability

The vulnerability can be exploited by running multiple threads in parallel where one of them is responsible for monitoring if the file is created, while some others are running in an endless loop to create a placeholder operation and the rest of the threads are changing bytes in the userspace buffer sent in the create placeholder operation (i.e. relName) as follows:

-

Monitor thread – the thread responsible for monitoring if the file has been created.

-

Create placeholder threads – the threads that execute the create placeholder operation.

-

FileName Changer threads – the threads responsible to change the filename to exploit the time-of-check to time-of-use vulnerability in the

HsmpOpCreatePlaceholders()function.

Assuming the exploit wants to create the C:\Windows\System32\newfile.dll DLL, then the monitor thread will stop the race once the C:\Windows\System32\newfile.dll file has been created.

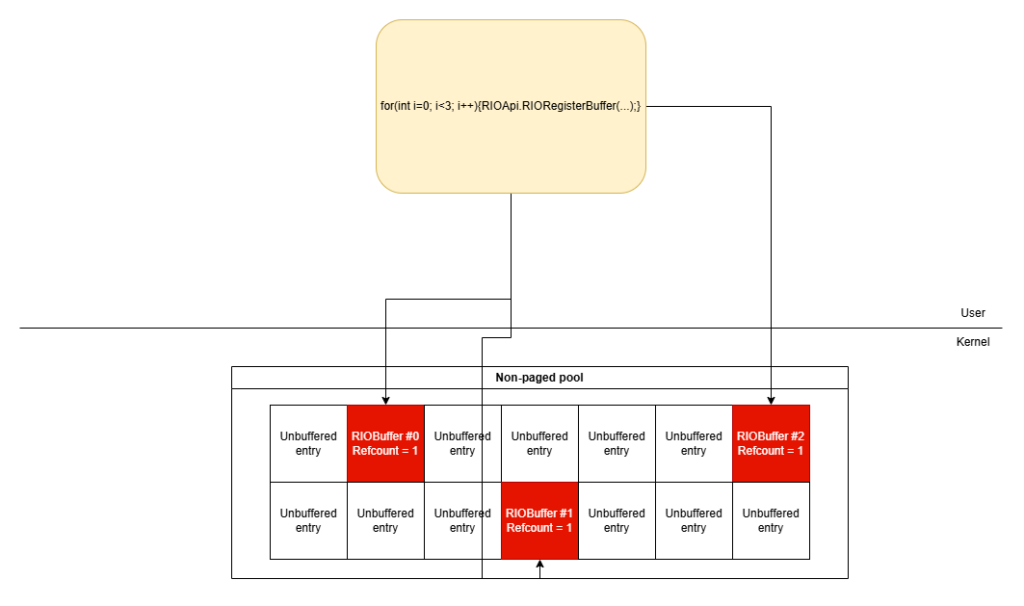

The create placeholder threads will continuously emit create placeholder operations by opening the sync root directory and issuing the 0x903BC I/O control code to it. All these threads send the same input buffer formatted as the ioctl_0x903BC data structure. The placeholder filename ioctl_0x903BC.placeholder_payload.relName is set to JUSTASTRINGDnewfile.dll and the ioctl_0x903BC.placeholder_payload.fileAttributes field is set to FILE_ATTRIBUTE_NORMAL.

The fileName Changer threads will continuously change the D character (i.e. the eight byte of the JUSTASTRINGDnewfile.dll wide string) from D to \ and vice versa with a small delay between the two changes.

In this way it is possible to bypass the validation for the relName field, i.e. check that the relName does not contain any \ character, reaching the FltCreateFileEx2() function with relName set to JUSTASTRING\newfile.dll. If the relName change happens just after the relName validation then the FltCreateFileEx2() function will follow the junction created on the JUSTASTRING directory and create the newfile.dll in C:\Windows\System32.

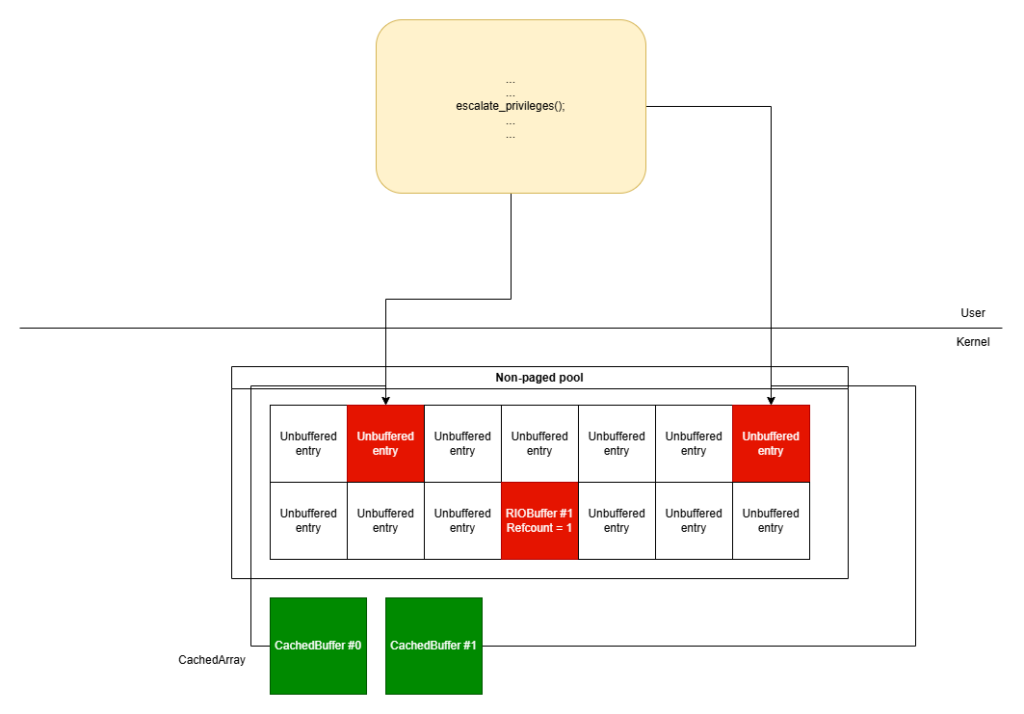

Once the monitor thread detects the C:\Windows\System32\newfile.dll creation, it will write the malicious file content into it and start the privilege escalations phase.

Step 3 – Privilege Escalation

Once the malicious file has been created, it is possible to escalate the privileges on the system by exploiting DLL side-loading.

About Exodus Intelligence

Our world class team of vulnerability researchers discover hundreds of exclusive Zero-Day vulnerabilities, providing our clients with proprietary knowledge before the adversaries find them. We also conduct N-Day research, where we select critical N-Day vulnerabilities and complete research to prove whether these vulnerabilities are truly exploitable in the wild.

For more information on our products and how we can help your vulnerability efforts, visit www.exodusintel.com or contact info@exodusintel.com for further discussion.